Stable Diffusion General FAQ

Welcome

This FAQ will collect quick answers to commonly asked questions. It has been split off from the main sdgguide to make it easier to add/edit new topics that answer very specific questions or issues.

.

By design it is forever WIP.

- General questions

- It's 2025, I came back after a year, what do?

- It's 2026, I came back after a year, what do?

- What models are currently popular?

- What does VPRED and EPS mean?

- What resolution/ratio should I use with SDXL based models?

- How can I add text to a t-shirt so it flows with the fabric with an image editor?

- Where is the current trashcollects_lora?

- What is a Catbox?

- Where can I get current Embeddings / Textual Inversions?

- Local video gens? what?

- Where do I get a current danbooru & e621 combined tag autocomplete csv?

- Can I extract tags from images that have no metadata?

- What is eating my VRAM?

- Generation technical issues

- Prompt questions

- Why are there always additional chars in the backgrounds even though I specify (solo)?

- What are the brackets [], parentheses () and other {}+ stuff for?

- How do I use the BREAK statement? Can I define separate characters with it?

- How can I gen ...

- Why are my snow leopard gens always in winter?

- Why do a lot of carrots appear in my gen when I use the carrot \(artist\) tag?

- My characters always have hair (as in hair styles), how can I get rid of it

- How do I get rid of these garbled signatures?

- What artist and character tags does noobaiXL understand?

- UI questions

- What CLIP SKIP do I need to use?

- Which VAE do I need?

- How can I add (hidden) options to my UI? Quicksettings (Forge/reForge)

- How to use the "send to workflow" feature for ComfyUI?

- When I interrupt generation on (re)Forge it takes a long time to finally stop, why?

- How can I use the same model, lora, etc directories for (re)Forge, ComfyUI, InvokeAI?

- Why does img2img/inpaint in (re)Forge do much less steps than I specify?

- How can I use (re)Forge from my phone/tablet?

- Where can I configure RescaleCFG in Forge?

- (re)Forge does not work with my RTX 5000 Blackwell GPU (CUDA no kernel image is available)

- How can I increase the size of the finished gen in the browser?

- A1111/Forge first run "No module named pkg_resources" error when installing CLIP

General questions

It's 2025, I came back after a year, what do?

It's 2026, I came back after a year, what do?

If you are still using the original AUTOMATIC1111 WebUI, its recommended to change to (re)Forge or ComfyUI as A1111 its pretty outdated and rarely updated anymore. Grab an updated, NVidia Blackwell compatible portable version of reForge, and follow the main quickstart guide:

General advice (especially when you were using SD1.5)

- The meta is shifting away from PonyDiffusionXL based model towards noobaiXL based models

- PonyDiffusion (PDXLv6), Illustrious and noobaiXL are all based on SDXL technology. As such Lora's trained on one might work on the others, but that needs to be tested individually

- noobaiXL is a finetune of IllustriousXL v0.1. Some noobaiXL models are still listed as "Illustrious" on civitai, so check the descriptions if it is merged with noobaiXL or not

- Illustrious itself does have all of danbooru as training data, so it has some basic furry knowledge too, but noobaiXL adds all of e621. As such noobaiXL models understand danbooru and e621 tags, while Illustrious understands only danbooru tags.

- noobaiXL EPS v1.1 and VPRED v1.0 training data contains "everything" on e621 until about middle of 2024. Anything added later or only available in small amount of posts will not be known to any model based on it.

- Current models don't require massive amounts of (neg) tags to work, keep your prompts lean.

- Textual Inversions/Embeddings/Hypernetworks are not commonly used anymore and old SD1.5 based ones won't work with SDXL anyway

- SD1.5 LoRas don't work with SDXL either and are hidden by the WebUI. Pony (PDXL) based LoRas may or may not work with Illustrious/noobAI based models, but many of those are being retrained, see here

- PDXL score tags don't work on IllustriousXL/noobaiXL based models, you must/can use different quality tags, see here

- These models support artist tags (again), see here

- All SDXL based models need a higher basegen resolution than SD1.5, see here

What models are currently popular?

While Pony Diffussion XL (PDXL) has still some use cases, the meta has shifted to IllustriousXL or rather noobaiXL based models as these provider better body coherence, updated knowledge of popular characters/series/concepts and support the uncensored usage of artist tags for unique style mixes

A list of currently popular sdg models including download links, recommendations and sampler comparisons can be found here:

An introductory guide on how to prompt and use noobaiXL based models can be found here:

What does VPRED and EPS mean?

Most PDXL based models were/are of the EPS type, which work well in pretty much in any circumstance. As the noobaiXL model also exists as a VPRED (V_PREDICTION) version (besides the EPS version), it recently became more popular to create VPRED merge models from it. VPRED models are technically slightly different to EPS models and must be supported by the UI you are using. Good news is that the popular UIs like (re)Forge and comfyUI support VPRED out of the box without the need for manual adjustments (see RescaleCFG) if you make sure you update them regularly.

Current noobaiXL based VPRED models do not need a yaml file to work.

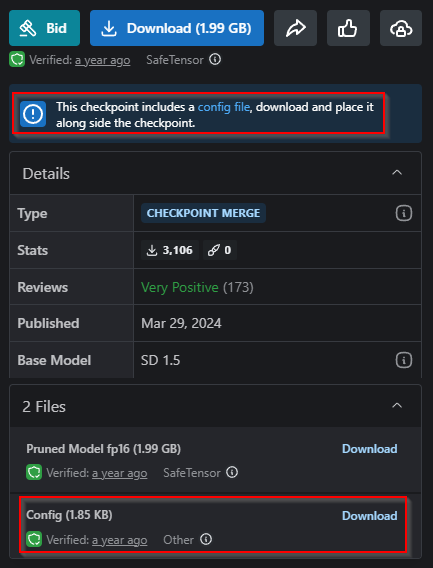

If you want to use an older SD1.5 based VPRED model, the civitai page will usually mention the additional "config file" that you can download if you expand the files section on the right hand side. Place it in the same folder that you saved the safetensor model file!

What resolution/ratio should I use with SDXL based models?

https://rentry.org/sdgguide#resolution-width-height

How can I add text to a t-shirt so it flows with the fabric with an image editor?

In general there are three common approaches to blending in text that you have added in an image editor:

- Just blend it in photoshop/GIMP/Krita. Make it slightly opaque, fade in the edges, change blending mode etc. to make it not stick out so badly.

- Low denoise inpaing with schizoprompting in positives like

english text "MEGAMILK"with whatever in quotes replacing what you want it to read. - Tile resample controlnet at 100% weight and high denoise with the same schizoprompting. May or may not work well, depends on contrast a lot.

If you want to add text to a surface that is not flat, e.g. a wrinkled t-shirt, you can try this approach in Photoshop:

Save copy of base gen with cleaned shirt (gibberish etc. removed) as separate .psd

Apply gaussian blur at 2 strength to the copy, save

Add text or logos or whatever, adjust to fit shirt, blending mode to multiply if light colored shirt, screen or linear dodge if dark

Filter > distort > displace, values at 10-15

Use blurred separate file as displacement map

Make a copy of the blurred gen, clip it to the text, blending to linear dodge (add) and opacity 50%

Clip levels adjustment to the copy of blurred gen, fuck around with levels until it looks decent

Maybe add a layer mask to the text/logo and fade out edges if I feel like it

Done

Another anon recommended to use Inkscape:

I find Inkscape to be a better tool for complex text tasks, or even simple ones as I find Krita's text tool very bad.

Where is the current trashcollects_lora?

What is a Catbox?

Catbox is a free image and file sharing service

URL: https://catbox.moe/

Uploading images to 4chan or imgbox deletes the generation metadata from the image. Uploading it to catbox allows sharing this information with other people. Filesize limit is 200mb, if you have a larger file to share you can use https://litterbox.catbox.moe/ to upload a file up to 2gb in size which is stored for up to three days (you MUST set the expiration limit BEFORE starting the upload).

Catboxanon maintains a 4chanX extension script that allows to directly upload images to catbox and 4chan simultanously, as well as viewing metadata of these catboxed images in the browser

https://gist.github.com/catboxanon/ca46eb79ce55e3216aecab49d5c7a3fb

Follow the instructions in the GitHub to install it.

Lately catbox.moe has been very unstable and offline for extended periods of time, so a possible alternative is

https://pomf.lain.la/

Where can I get current Embeddings / Textual Inversions?

These are rarely used nowadays and the few that exist are of dubious use. Recommended to just ignore this for any SDXL based model.

Local video gens? what?

Local video gen has seen some drastic improvements over the last few months, with different models being released for different use cases. All of them are trained mostly on realistic human inputs, with some anime or 3dcg sprinkled in, so by default they work only mediocre for toony stuff. If you want to do idle anims or simple porn loops, with the right merge or lora stack it can do quite well, but complex animations often lead to body horror or everything turning into dreamworks 3dcg chars.

The most well supported model currently is Wan 2.2 with a lot of loras, merges and quants available on Civitai and Huggingface. Forge Classic Neo does support Wan, but it's recommended to use ComfyUI for video gen as it is on the cutting edge of supporting new models and features.

Check the /ldg/ guide on how to set up Wan 2.2

https://rentry.org/wan22ldgguide

Where do I get a current danbooru & e621 combined tag autocomplete csv?

DBRE6_combi_tags_2024_12_01.csv @ https://files.catbox.moe/fscc1k.csv

Can I extract tags from images that have no metadata?

Short answer: No. If the metadata has been fully removed from the image, which can happen e.g. by editing it in photoshop, there is no feasible way to get the original metadata back.

There are two more options:

If it is a PNG file there is a chance that the original creator has saved the metadata in the PNG alpha channels with an extension called StealthPNG. for (re)Forge install the extension https://github.com/neggles/sd-webui-stealth-pnginfo to be able to read it in the UIs PNG Info tab

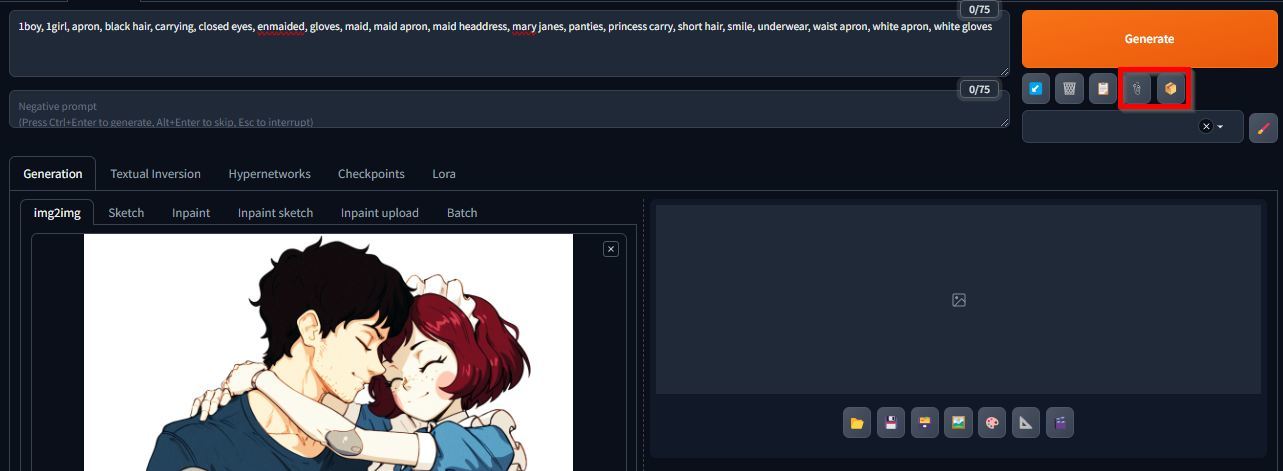

In the end you can use ask the AI to describe the image for you. (re)Forge has two builtin tools hidden in the img2img tab with which you can ask CLIP or the deepBooru model to describe the image that you have loaded

CLIP will give you a NLP description, while deepBooru will use danbooru tags. Both tools are very limited in functionality and tailored for realistic or anime images

If you are using ComfyUI there is a recommended custom node which will describe the image with e621 tags

https://github.com/loopyd/ComfyUI-FD-Tagger

Some unverified tip from another anon:

If you want something similiar for (re)Forge that uses E621 style tags

https://mega.nz/folder/UBxDgIyL#K9NJtrWTcvEQtoTl508KiA

Download "E621 Tagger extenstion.7z" and unpack it to your (re)Forge root folder. Do not use both E621Tagger v15 and E621Tagger v16 extensions, delete one extension!

Another one, but never uses e621 style. Has a lot of models to choose. The style will be like you use usual SDXL with some natural language https://github.com/pharmapsychotic/clip-interrogator-ext

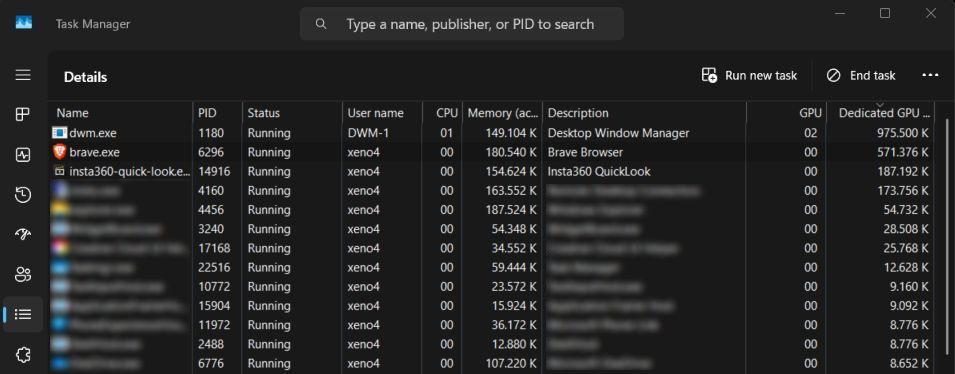

What is eating my VRAM?

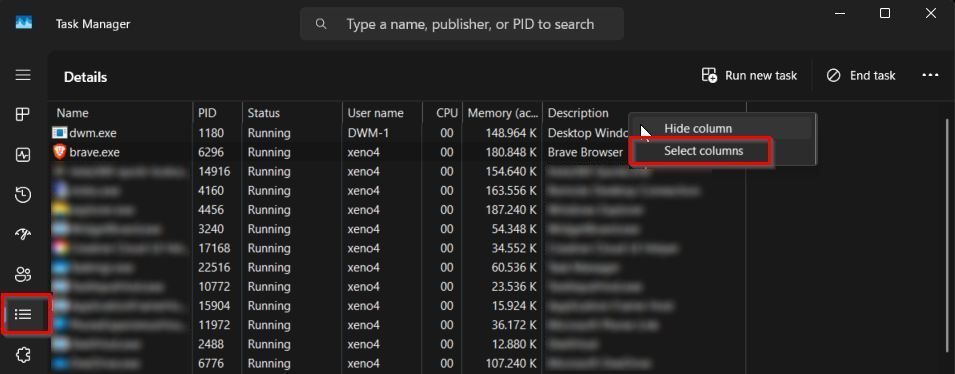

If you are low on VRAM you can check with Windows Task Manager which other applications are using how much of it.

- Open Task Manager, click on the "Details" Tab or Symbol, then right click on any of the column headers, select "Select Columns"

- Scroll down and check "GPU" and "Dedicated GPU memory"

- Sort by the "Dedicated GPU memory" column

⬆️⬆️⬆️To the top⬆️⬆️⬆️

Generation technical issues

My gens are fried, help!

| Image | Reason |

|---|---|

|

Image fries at the last generation step: Wrong VAE. See here |

|

Image randomly turns into noise: See here or you may have set a negative weight on a tag without enabling the NegPip extension. |

|

The model you are using is a VPRED model but either you are missing the corresponding yaml file or your UI cannot handle VPRED models properly. Update your UI, or check if you can enable support manually, you are looking for an option for "ZSNR" or "Zero Terminal SNR". |

I get strange localized artifacts in my gens

If you see artifacts like these but the overall image is fine it might be caused by an incompatible model & lora combination. Try lowering the lora weight, but if that does not solve the issue it's likely the two cant be used together.

How can I prevent inpaint halos?

noobaiXL has some inbuilt problems with being sensitive to inpaint halos. Here are some tips:

- Use a non-ancestral sampler just for inpainting

- Layer the inpainted image onto the original in an image editor and cut or smudge the halo away (example needed)

- Increasing gamma before inpainting dark images:

One thing you can do, is put the first upscale pass into a photo editor and increase the gamma by, say, 1.4, then once you're done hit it with a reciprocal 0.7143 after, as the halos won't appear as often on the gamma adjusted image. Further after you eraser halos back down to the base upscale, you can go back to the photo editor and adjust the black level up by 1-3 to eliminate halos that are just on the cusp of visibility on black that might be overlapping with the subjects body or clothing.

Yet one more thing you can do is open up the soft inpainting tab, and move the difference contrast from 2 down to 1.5 or even 1. This may result in gens doing weird overlay layer effects but it's one of the largest differences I've had with the soft inpainting setting enabled.

One thing I forgot to mention: adjusting the gamma might have the effect of causing your subsequent inpaints to darken the image again, thus returning it to base gamma would end up with the subjects becoming darker than the base gen.

Still, I've found that 1.3-1.4 is a good value to start with, and haven't really come across too many problems involving turning subjects darker because of it.

And, when erasering down to the base later, use a big brush with 0% hardness. There will still be a bit of a glow from the halo but the hard edge will be removed this way making it much less noticeable.

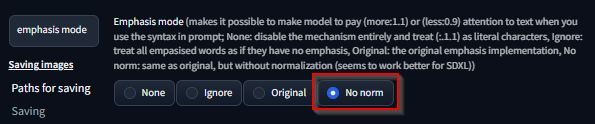

Sometimes i get just black or corrupted images when using (re)Forge

A common issue for that is the "emphasis mode", try setting it to "No norm":

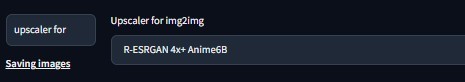

What is the best upscaler for hiresfix or img2img?

Anything but latent, otherwise any current upscaler will be fine. Here is a comparison:

https://rentry.org/sdgguide#upscaler

While you can easily choose the upscaler for hiresfix in the UI, there is an option hidden in the settings menu for img2img as well:

⬆️⬆️⬆️To the top⬆️⬆️⬆️

Prompt questions

Why are there always additional chars in the backgrounds even though I specify (solo)?

These are lovingly called noob gremlins are an issue with most of the current models. Just tags alone can not always get rid of them. Either you have to edit them out with an image editor, or you can try the following suggestions

- add weight (solo:1.3)

- for custom chars, specify "antro <species>", e.g. "anthro fox" instead of just fox

- add "feral <species>" in the neg prompt

- empty surroundings in your gens will increase the risk of gremlins appearing, try to tag some objects fitting your scene to gen instead

What are the brackets [], parentheses () and other {}+ stuff for?

These are used by the (re)Forge advanced prompt syntax that is inherited from A1111, see here:

{} is used by NovelAI and ComfyUI (for tag randomization) , + and - is used by InvokeAI for weighting.

How do I use the BREAK statement? Can I define separate characters with it?

No!

The SDXL text encoder CLIP "consumes" your tags in blocks of ~75 tokens at a time. If your prompt contains more tags/tokens, they get split into different blocks automatically. The BREAK statement does force the creation of a new block, filling the current one with empty tokens. While it's a common myth that "the first block is more important than the next one", in reality it has a minimal effect on your average gen. Roughly speaking, the model does use all tokens from all blocks during the whole generation process, so forcing a new block manually cannot be used to reliably group your tags together to e.g. fully define a custom character. In fact as you are sending empty tokens to the model you weigh down your whole prompt a bit each time, which usually also has not much effect.

Here is a empirical test with 64 side by side comparisons to verify this.

In current models the BREAK statement has very limited edge-case uses and it's recommended to not use it, with one exception:

The BREAK statement is used by the Regional Prompter extension to separate the regions, so if you find an example prompt that contains BREAK it might have been created with the help of that extension.

How can I gen ...

... gryphons

I've found it pretty easy to go with (avian species, quadruped, feral, furry) and have it pop out a gryphon of the chosen species. (folded wings) lets one not deal with having large wing surfaces to inpaint as well.

... coherent text with text2img

You cant with the current popular models. Some text may be semi-reliably produced that is common in the training data, like "PLAP" or "sigh", some other things like "!" or "?" kinda work as well, but you cannot define complete sentences, the result will only be garbled text. Its best to not prompt for text or "speaking" and add it in afterwards with an image editor.

... a character model sheet with different views?

Simply using "model_sheet, turnaround, front view, side view" is enough to get a decent result. The best outcome is achieved without quality tag, In such cases, the chances of a successful generation increase, roughly 3 out of 5 times. Essentially, if you touch up the image in Photoshop—by aligning the key body parts to the same level—it can be used as a reference for 3D modeling.

... male characters if the bias is toward female?

Prompt editing the faceless_male tag for the first 30% of the generation process:

[faceless male::0.3], is extremely useful if you wanna swap the gender bias on an image if the bias is slanted towards human female

Why are my snow leopard gens always in winter?

Why do a lot of carrots appear in my gen when I use the carrot \(artist\) tag?

Some artist tags, concepts or characters can lead to tag bleed, meaning if their tag contains words that are themselves tags known to the model, the AI may want to fulfil your wish for more carrots in your image.

Examples:

- snow leopard, - high chance of snow or winter

- carrot \(artist\), - high change of additional carrots lying around

- worm's-eye view, - high chance of worms appearing in the image

A workaround is to deemphasize the word in the tag, this gives the AI the hint that you e.g. don't want any actual carrots, but it can still interpret the full tag as the artist tag. It is not a totally reliable way depending on many factors but has been working well most of the time.

Example solutions:

- (snow:0) leopard,

- (carrot:0) \(artist\),

- (worm:0)'s-eye view,

See https://rentry.org/sdgguide#weighting-attentionemphasis for more information about tag weighting.

My characters always have hair (as in hair styles), how can I get rid of it

The more anime influence/artist preference is in your setup it will default to generate characters with human hair styles. The common way is to put "bald" into your prompt, if required enforcing it with "hair" in the negative prompt (though that can have other side effects). Bald characters tend to have very smooth heads, so you can again counter that with some "cheek tuft" and optionally "head tuft".

Lion example, as these will generate with manes 90% of the time, contains metadata.

How do I get rid of these garbled signatures?

There is no 100% way to get rid of them with prompting. While you can put "signature, patreon, patreon logo" in the negatives they are trained in hard on the noobaiXL models, so they still will appear or reappear on the upscale.

You can try to get rid of them via just masked inpaint prompting but the much easier way is to edit them out in an image editor and then inpaint that area. If you use (re)Forge and don't automatically upscale everything then you can just load the rawgen in an image editor, paint over the signature with similiar colors, and then use the sparkle button to do the hiresfix. Here is a short video using Krita to do so.

What artist and character tags does noobaiXL understand?

Check the Laxhar HF for the csv files:

https://huggingface.co/datasets/Laxhar/noob-wiki/tree/main

⬆️⬆️⬆️To the top⬆️⬆️⬆️

UI questions

What CLIP SKIP do I need to use?

With up-to-date UIs you dont need to set clip skip manually. Tests have shown that current UIs set it automatically for SDXL based models, so setting it manually may either be ignored by the UI or lead to corrupt images.

ComfyUI sets clip skip to -2 by default for all SDXL models

https://github.com/comfyanonymous/ComfyUI/blob/b4d3652d88927a341f22a35252471562f1f25f1b/comfy/sdxl_clip.py#L45

You can erroneously use the clip skip node to overwrite this setting and will receive broken images.

reforge comparison shows that clip skip setting up to 2 is ignored when loading a SDXL model, but setting it higher will fry your gens

Which VAE do I need?

99% of current models come with a built-in VAE so there is rarely a need to download a separate one for normal operations. Leave it on "Automatic" in the webUIs and connect the VAE from the model load node in Comfy.

If for a specific reason you must use a separate VAE you can use either the standard SDXL one:

https://huggingface.co/stabilityai/sdxl-vae/resolve/main/sdxl_vae.safetensors?download=true

or if you run into VRAM limits you can try this recommended one:

https://civitai.com/models/140686

Still using SD1.5 based models? This one seems to be recommended (DO NOT USE FOR SDXL!):

https://civitai.com/models/276082

Put the downloaded safetensors file in your <webui>\models\VAE" folder and refresh the UI.

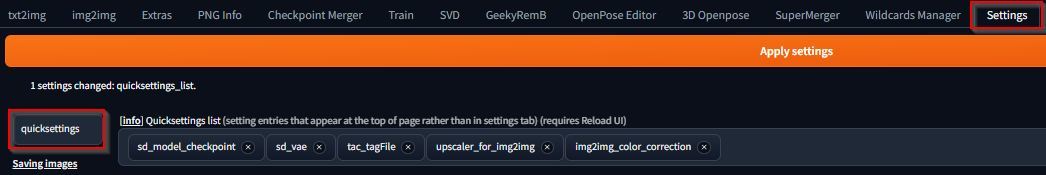

How can I add (hidden) options to my UI? Quicksettings (Forge/reForge)

In the UI tab "Settings" > "User Interface", you can add various settings that you want quick access to or even some that are otherwise hidden to the top of the UI. Click on the "[Info]" link to view a list of all available options.

How to use the "send to workflow" feature for ComfyUI?

Q: I have several different workflows saved for things like inpainting, upscaling, and ControlNet, but the context menu only ever shows "send to current workflow". Is there some other place to save workflows that I'm missing?

you have to edit the pysssss.json file in ComfyUI\custom_nodes\comfyui-custom-scripts and add

"workflows": { "directory": "path\to\workflows\folder" }

the default path for saved workflows being "ComfyUI\user\default\workflows"

restart comfyui and it will work.

When I interrupt generation on (re)Forge it takes a long time to finally stop, why?

On interrupt the image is still VAE-decoded by default which can take some time depending on various factors. Enable this option to skip the decode step

How can I use the same model, lora, etc directories for (re)Forge, ComfyUI, InvokeAI?

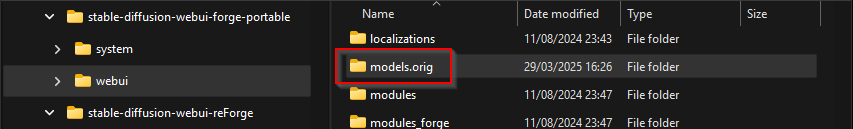

If you have multiple instances of reForge and/or Forge installed, you can use a symlink to link all "models" directories together so all files in these folders exist only once on your hard drive. Be careful, any change to this folder in any location will reflect everywhere, as technically it's just one directory that is linked to multiple locations!

For this example we assume that you have a reForge installation in "C:\SD\stable-diffusion-webui-reForge" and want to use this as your base.

- Step 1: rename the "models" folder in your secondary installation

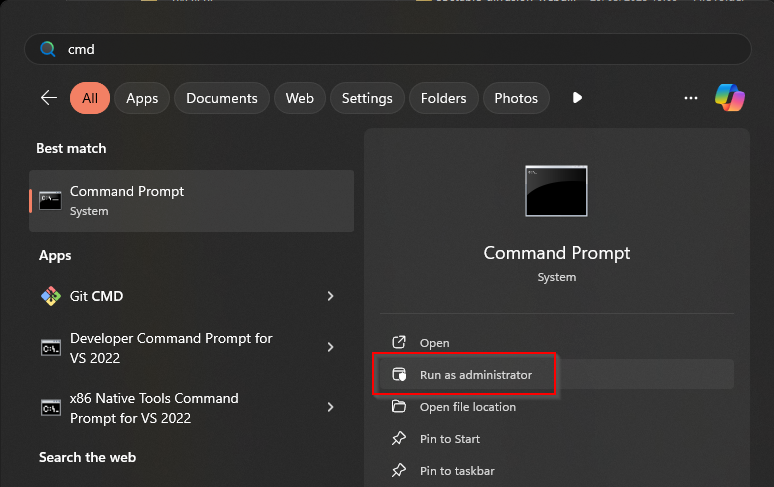

- Step 2: open a command prompt as administrator

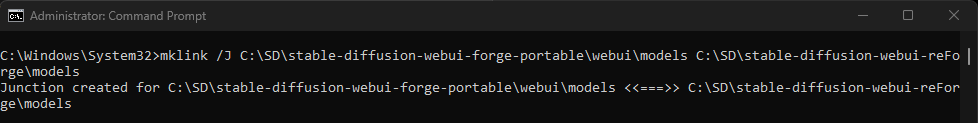

- Step 3: Type the following command, change paths according to your setup

mklink /J C:\SD\stable-diffusion-webui-forge-portable\webui\models C:\SD\stable-diffusion-webui-reForge\models

The first path is the new target that should be linked, second path is the existing master directory

- Step 4: You will see that the directory is again visible but has a special icon in explorer

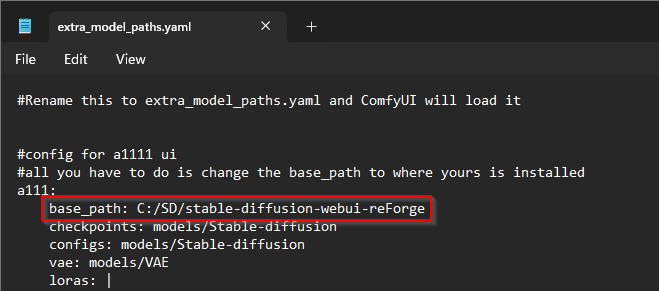

If you want to add ComfyUI to that master directory as well DO NOT use the mklink approach as comfyUI updates tend to remove the symlinks. Instead go to your comfyUI folder (inside the portable folder if you are using that) and look for a file called "extra_model_paths.yaml.example". Rename that file to just "extra_model_paths.yaml" and edit it with a text editor.

By default the first section "a111" is already active and so you only have to change the "base_path" to the directory of that contains your master installation. Make sure to replace backslash \ with forward slash /

For adding InvokeAI, you can specify the "load in place" option when adding models to prevent the UI from copying them to its own directory.

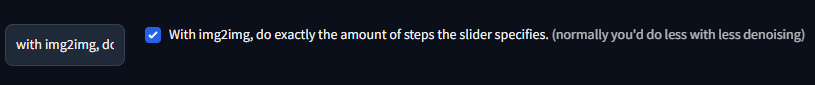

Why does img2img/inpaint in (re)Forge do much less steps than I specify?

By default, the number of steps done is whatever you configure in the gui multiplied by the denoise value. So if you set it to do 20 steps and denoise is 0.3, it will do only 6 steps.

You can change this behaviour with the following setting

How can I use (re)Forge from my phone/tablet?

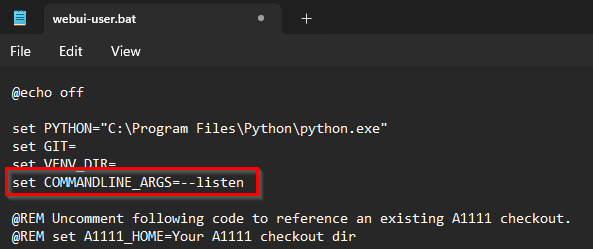

If you want to connect to the UI from inside your home network you must shut it down and edit your "webui-user.bat" to add the "--listen" parameter to the commandline args:

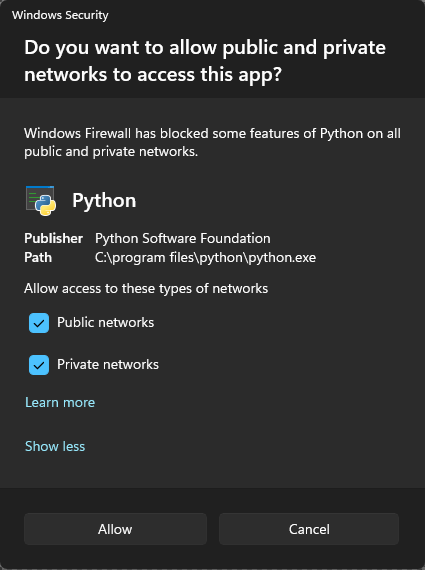

Start the webUI again, likely you will receive a pop from Windows firewall that you need to acknowledge to open the necessary ports for python.exe

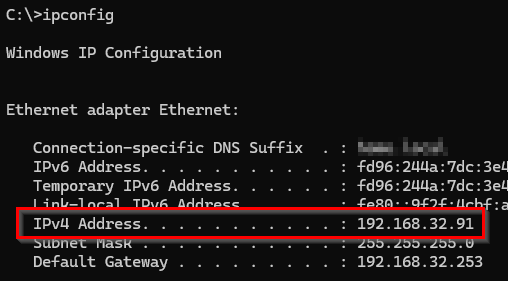

Open a command prompt and execute "ipconfig" to find the IPv4 address of your computer:

Browse to the webUI on your mobile device with "http://<your.ip.addres.here>:7860"

At this point you could open the access on your router from the Internet to your genning machine. But this is a certified stupid idea as it will allow anyone to find this unprotected access and abuse your webUI to their hearts content. If you really need to have access via the Internet look into VPN or Cloud-based redirect solutions like Teamviewer, but this is a complex topic and outside of the scope of this guide.

Where can I configure RescaleCFG in Forge?

It seems there is currently no working simple CFGRescale plugin available so you have to use the "LatentModifier Integrated" built-in extension. Set it to Enabled and use this slider to configure the same values you would set on reForge or Comfy:

I tested it side-by-side with the "ReschaleCFG for reForge" and the resulting images are the same.

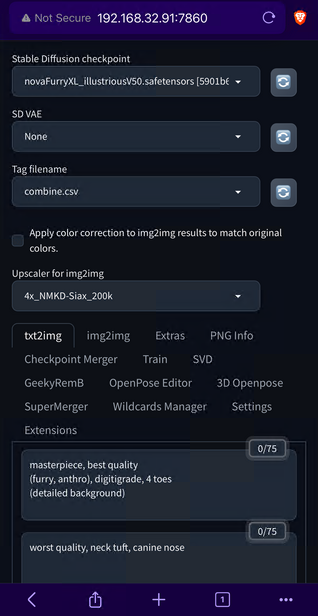

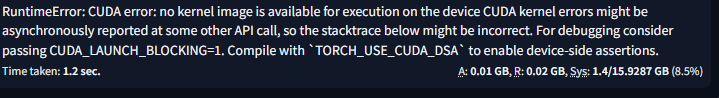

(re)Forge does not work with my RTX 5000 Blackwell GPU (CUDA no kernel image is available)

If you exchanged your GPU for a new RTX 5000 series but are using an older version of any webUI likely it still has a Pytorch version that is incompatible with them as Pytorch does not get updated by the standard update scripts. You need to update your installation to at least Pytorch version 2.7.0.

If you exchanged your GPU for a new RTX 5000 series but are using an older version of any webUI likely it still has a Pytorch version that is incompatible with them as Pytorch does not get updated by the standard update scripts. You need to update your installation to at least Pytorch version 2.7.0.

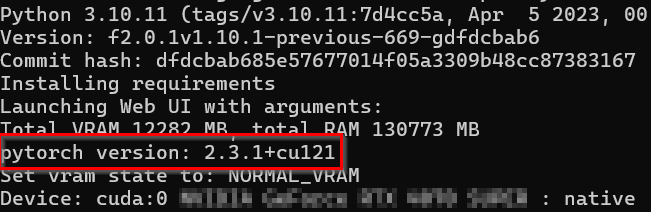

The popular UIs will show you the Pytorch version that is installed during startup:

Make a backup first! Depending on how old your previous UI install is, updating to current Pytorch may also update a lot of dependencies which may break stuff!

If you want to do a fresh install anyway here is a collection of popular webui portable packages that are updated to latest version of the UI and Pytorch:

ComfyUI portable

If you are using specifically this UI you can run update\update_comfyui_and_python_dependencies.bat which will update the UI and all Python modules to the latest version, no need to do anything manually.

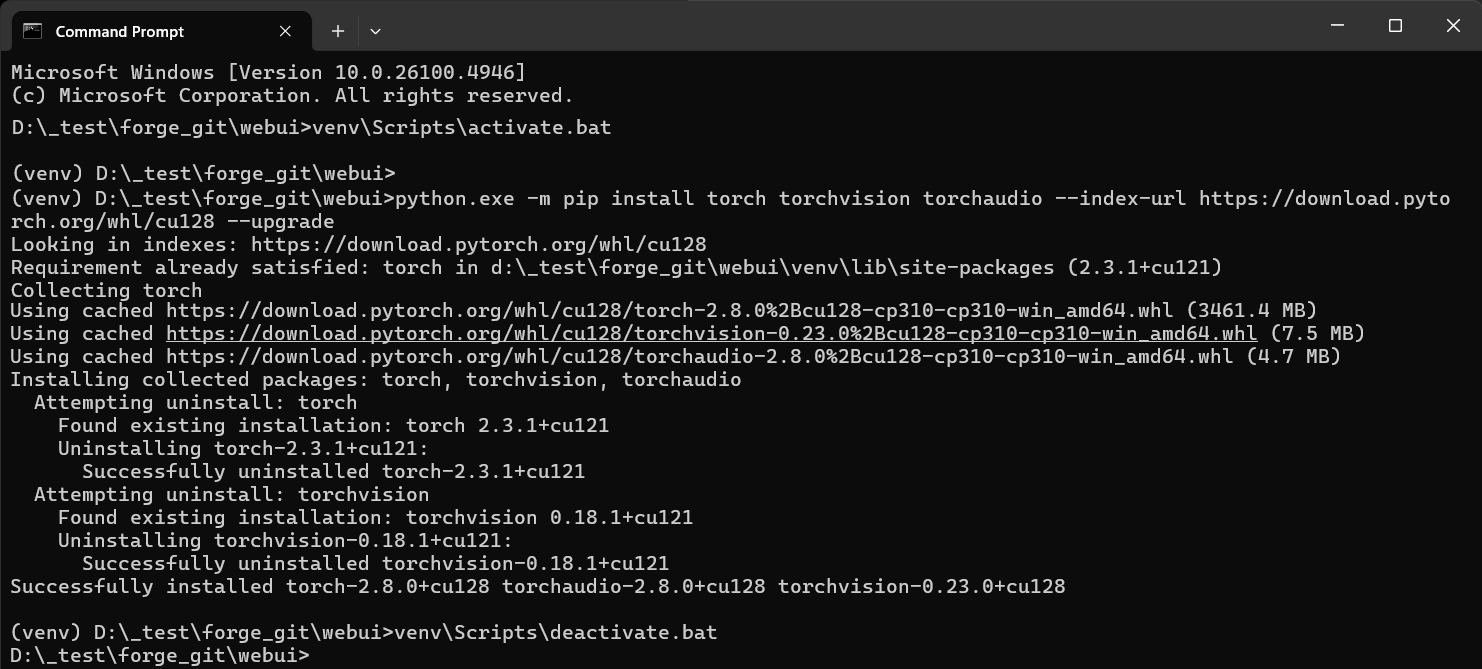

Forge/reForge/other webUIs installed via git clone

If you used the traditional way to install your UI via cloning the github repository, you will have a setup that includes a venv where all the Python requirements, including Pytorch, are installed. To update Pytorch you first need to activate this venv, otherwise it will not work.

- open a cmd.exe (not powershell!) and go to your webui folder (in this example

D:\_test\forge_git\webui) - run

venv\Scripts\activate.bat. Your command prompt should change to start with (venv) - run

python.exe -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu128 --upgrade

This will download the current Pytorch version that is build against CUDA 12.8. There are other builds, but this one is the commonly used one. - once the installation is successful, run

venv\Scripts\deactivate.bat

Now you can start your webui normally and it should display the new Pytorch version during startup.

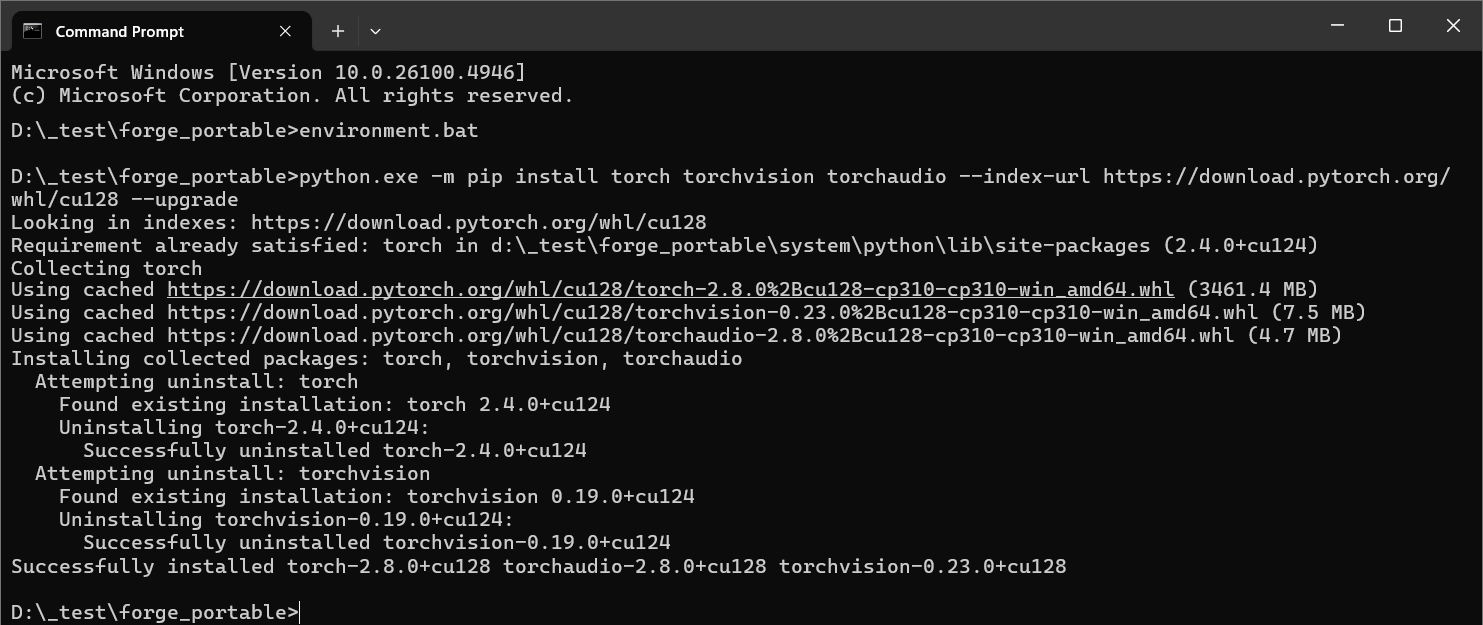

Forge/reForge/other webUIs portable version

The portable versions don't utilize a venv but have their own dedicated Python portable that is independent of your system's portable, which is usually activated by running a special bat file like enviroment.bat first.

- open a cmd.exe (not powershell!) and go to the root directory of your portable webui install (in this example

D:\_test\forge_portable) - run

environment.bat(or similar). This may or may not give you any output. - run

python.exe -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu128 --upgrade

This will download the current Pytorch version that is build against CUDA 12.8. There are other builds, but this one is the commonly used one.

Now you can start your webui normally and it should display the new Pytorch version during startup.

How can I increase the size of the finished gen in the browser?

Settings -> User Interface -> Gallery. Enter a value in "Gallery height", e.g. "1152px"

https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/5297

A1111/Forge first run "No module named pkg_resources" error when installing CLIP

Currently there can be an issue installing CLIP on a fresh A1111/Forge (and maybe other webui forks as well) during the first run. To fix this you need to upgrade setuptools and install CLIP without build isolation. This should only happen if you have installed the webui the classic way (python and git clone).

If you want to do a fresh install anyway here is a collection of popular webui portable packages that are updated to latest version of the UI and Pytorch:

- open a cmd.exe (not powershell!) and go to the scripts folder in your webuis venv folder (in this example

C:\SD\stable-diffusion-webui\venv\scripts) - run

activate.bat. Your command prompt should change to start with (venv) - run

pip install --force-reinstall setuptools==80.10.2

This will install a newer version of the Python setuptools that are required.

- run

pip install https://codeload.github.com/openai/CLIP/zip/d50d76daa670286dd6cacf3bcd80b5e4823fc8e1 --no-build-isolation

This will install CLIP without build isolation, preventing the build error.

- once the installation is successful, run

deactivate.batto close the venv.

Now you can run webui-user.bat again to install the rest of the requirements.

⬆️⬆️⬆️To the top⬆️⬆️⬆️

Xeno443