Archived; see post >>73245729

Have a good one /sdg/

/trash/ MEGA-Megacollection (WIP)

- Splitting the Rentry

- Character Rentry

- PonyDiffusion6 XL artist findings

- Models

- Galleries

- PonyXL LoRAs made by /h/

- LORAs from the Discord

- Assorted Random Stuff

- Artist comparisons

- Different LORA sliders - what do they mean?

- What about samplers?

- Script for comparing models

- Wildcards

- OpenPose Model

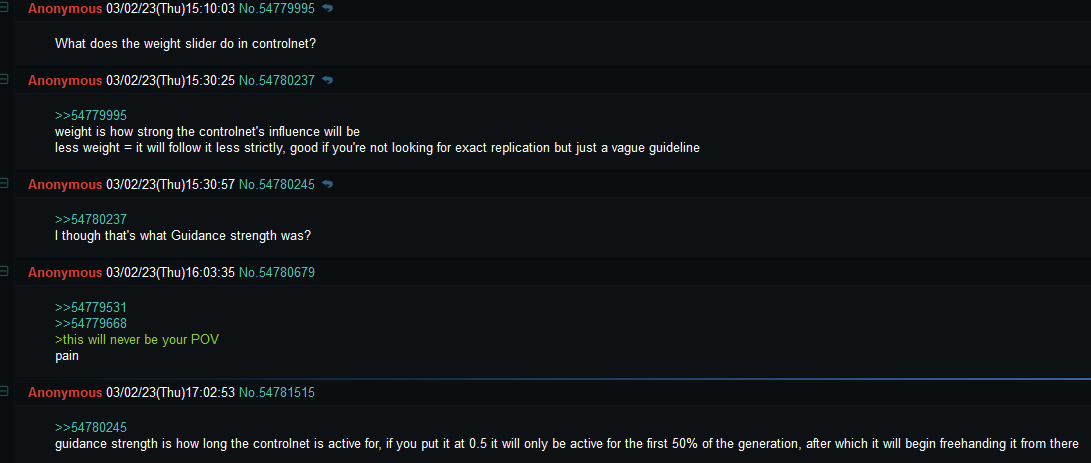

- "What does ControlNet weight and guidance mean?"

- Img2Img examples

- E621 Tagger Model for use in WD Tagger

- Upscaler Model Database

- LoCon/LoHA Training Script / DAdaptation Guide

- Script for building a prompt from a lora's metadata tags

- Example workflows

- Tutorials by fluffscaler

- CDTuner ComfyUI Custom Nodes

- ComfyUI Buffer Nodes

- Sillytavern Character Sprites

- Easyfluff V11.2 HLL LoRA (Weebify your gens)

- NAI Furry v3 random fake artist tags

Splitting the Rentry

We did it guys

I have moved the main LoRA section off to a new rentry:

https://rentry.org/trashcollects_loras

Character Rentry

Huge, seperate rentry maintained by another anon, which I think are mostly his own LoRAs even. While I mostly just collect links, that anon curates example images to go along with his LoRAs.

Comes with an edit code to make changes to the rentry, so if you have LoRAs you want to share in the thread, or have some example images for LoRAs posted here on collects, feel free to add new entries to the two rentries.

Part 1: https://rentry.org/c6nt3cnh

Part 2: https://rentry.org/5kdhna5w

PonyDiffusion6 XL artist findings

https://lite.framacalc.org/4ttgzvd0rx-a6jf

Putting it here for better visibility and until I figure out where else to put it.

The author of PonyDiffusion V6 obfuscated artist names before training, which as it turns out seem to be random three letter combinations.

/h/ has been spending some time trying out various combinations, and have been codumenting their findings in the spreadsheet above. If you try some combinations and recognize the artist behind them, feel free to contribute.

Use the base PonyDiffusion V6 XL checkpoint, not DPO, autismmix or similar. They most certainly will have similar influences at the very least, but for the sake of comparison you might want to stick to the above.

DDL: https://civitai.com/api/download/models/290640?type=Model&format=SafeTensor&size=pruned&fp=fp16

Mirror: https://pixeldrain.com/u/UPddM9ez

If you haven't used an SDXL model before, you will need a different VAE for them

VAE: https://civitai.com/api/download/models/290640?type=VAE&format=SafeTensor

Mirror: https://pixeldrain.com/u/kzu1x1u8

Also, remember to change Clip Skip to 2.

I'm just gonna link y'all furries all the compilations so far so you can have an easier time looking:

aaa to bzm:

https://files.catbox.moe/c0rl1r.jpg

cad to eum:

https://files.catbox.moe/ewr0s0.jpg

evg to hns:

https://files.catbox.moe/tda4ir.jpg

hpb to jki:

https://files.catbox.moe/44a2rc.jpg

jkv to lek:

https://files.catbox.moe/tj2aeq.jpg

lgu to mkb:

https://files.catbox.moe/p7qqaz.jpg

Here's mkg to nyj:

https://files.catbox.moe/365n8h.jpg

and nyp to pyb:

https://files.catbox.moe/64zi6v.jpg

Less consistent:

aav to frw:

https://files.catbox.moe/1z4efd.jpg

fsp to klm:

https://files.catbox.moe/jnjwqi.jpg

kmq to ojn:

https://files.catbox.moe/6qpxyi.jpg

oka to rrg:

https://files.catbox.moe/hvy7re.jpg

Models

This list of models has been added to over the course of more than a year now. Therefore, most models at the top of this list are old. Start at the bottom of the "Models" section, or better yet, check out the current list of recommended models over in /trash/sdg/, then check back and look them up via CTRL+F.

Base SD 1.5

.ckpt: https://pixeldrain.com/u/HQBAmpyD

Easter e17

.ckpt: https://mega.nz/file/Bi5TnJjT#Iex8PkoZVdBd3x58J52ewYLjo-jn9xusnKAhyuNtU-0

.safetensors: https://pixeldrain.com/u/PJUjEzAB

Yiffymix (yiffy e18 and Zeipher F111)

Yiffymix: https://civitai.com/api/download/models/4053?type=Model&format=SafeTensor&size=full&fp=fp16

Yiffymix recommended vae: https://civitai.com/api/download/models/4053?type=VAE&format=Other

Yiffymix 2 (based on fluffyrock-576-704-832-lion-low-lr-e16-offset-noise-e1) (Use Clip Skip 1)

Yiffymix 2: https://civitai.com/api/download/models/40968?type=Model&format=SafeTensor&size=pruned&fp=fp32

YiffyMix2 Species/Artist Grid List [FluffyRock tags]: https://mega.nz/folder/UBxDgIyL#K9NJtrWTcvEQtoTl508KiA/folder/YNhymCLY

YiffAnything

Merge of Yiffy and Anything, posts from the archives indicate that the hash from the one below vary from the usual hash? Either way, you can either download the one below or merge it yourself: Select Yiffy in box A, AnythingV3 in B, the leaked novelai anime model in C, multiplier is 1, interpolation is add difference

https://pixeldrain.com/u/QxV5FMjc

Explanation as stated by anon:

>Reports stated that the hash is different from what is expected

automatic1111 changed the hashes a couple weeks ago, they used to be 8 characters long ever since they were introduced, they're 10 characters long now, which are just the first 10 characters of the sha256 hash

every old link you'll find mentioning a hash will show a different hash in the webui now

7th_furry tests (seem to be merges of the 7th layer models)

7th furry testA: https://huggingface.co/syaimu/7th_furry/resolve/main/7th_furry_testA.ckpt

testB: https://huggingface.co/syaimu/7th_furry/resolve/main/7th_furry_testB.ckpt

testC: https://huggingface.co/syaimu/7th_furry/resolve/main/7th_furry_testC.ckpt

NovelAI Leak + VAE

https://pixeldrain.com/u/rWQ9wQmk

NAI Hypernetworks

https://pixeldrain.com/u/BRh8qfJM

Lawlas's Yiffymix 1 and 2

2 has been merged with AOM3 and other anime models, hence the described need for high weighting of furry. I personally prefer 1, but try both and see what you like more.

The mentioned embeddings are on huggingface. Easy_negatives is on CivitAI, but shouldn't need an account.

Version 1

Version 2

▼Tips

Known problem(s):

▼Credits:

Here are models used as far as I can remember:

I apologize for not keeping a record of the models I used. Without their amazing work, this model wouldn't have even existed. Kudos to every creator on this site!

AbyssOrangeMix2 (for those without a Huggingface account)

AbyssOrangeMix2_sfw.safetensors

AbyssOrangeMix2_nsfw.safetensors

AbyssOrangeMix2_hard.safetensors

AOM VAE (rename it the same as the AOM model you use)

Frankenmodels (Yttreia's Merges)

https://drive.google.com/drive/folders/1kQrMDo2AtzcfAycGhI79M2YnPUHebu6M

Explanation:

The filename is the recipe. Minus symbols are averaged, plus symbols are added.

Tried avoiding any models that need special VAEs.

Uh, no real comments otherwise, my Twitter is https://twitter.com/Yttreia

Gay621 v0.5

https://civitai.com/api/download/models/12262?type=Model&format=PickleTensor&size=full&fp=fp16

Based64 Mix

https://pixeldrain.com/u/khSK5FBj

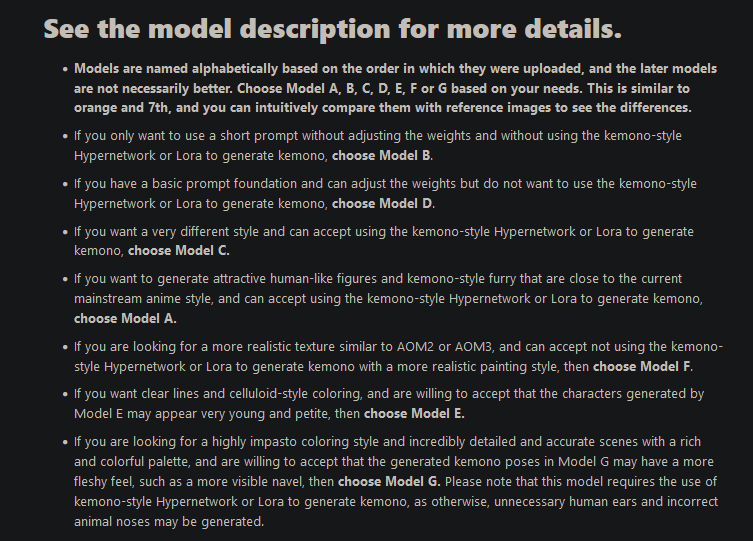

Crosskemono (CivitAI, links last updated: 03/28/2023)

Crosskemono 2 (with added E621 Tag support)

If you make some cool gens with these, feel free to post them over on the Crosskemono CivitAI page and leave a rating - the author machine-translated his way onto /trash/ to ask for feedback and examples, and is bummed out he barely gets any feedback about what people think about the model over on CivitAI.

Crosskemono 3

https://www.seaart.ai/models/detail/ac3c26ac1ff19c18dc840f3b8e162c25

https://pixeldrain.com/u/3YXax5DP

PC 98 Model

https://mega.nz/file/uJkQBbKL#qVI95nOJkkMAjPQXBsvPZA9bTSaF5gOv0IA0XCjdE2E

Low-Poly

mega.nz/file/PAcABRrS#tFCWwWyyatquNvrzLIUqPkrpYJhsS9nEjpY0mv4SNKM

Direct DL link from CivitAI for r1 e20: https://civitai.com/api/download/models/80182?type=Model&format=SafeTensor&size=pruned&fp=fp16

Seperate Fluffusion rentry maintained by the model author (?): https://rentry.org/fluffusion

Below are links to the Prototype r10 e7 model; you probably won't need it, but it's here for posterity.

Fluff_Proto Merges

0.7(revAnimated_v11) + 0.3(fluff_proto_r10_e7_640x): https://easyupload.io/0eqrwu

Revfluff : https://pixeldrain.com/u/KJ1TKS26

0.8 (fluff_proto_r10_e7_640x) + 0.2( revAnimated_v11)

fluff-koto: https://pixeldrain.com/u/mMFsR6Ez DEAD

0.75 (fluff_proto_r10_e7_640x) + 0.25( kotosmix_v10)

Tism Prism (Sonic characters)

https://archive.org/details/tism-prism-AI

Fluffyrock

What the fuck do all these models MEAN!? (Taken from the Discord on August 27th)

MAIN MODELS (For these, typically download the most recent one):

e6laion (Combination dataset of LAION and e621 images, can do realism rather well. Currently is being trained with vpred, so will require a YAML file, and for optimal performance needs CFG rescale too.

uses Lodestone's 3M e621 dataset, almost the entire website)

fluffyrock-1088-megares-offset-noise-3M-SDXLVAE (Offset-noise version of the model using the 3M dataset, but experimenting with Stable Diffusion XL's VAE. Will produce bad results until more training happens.)

fluffyrock-1088-megares-offset-noise-3M (Offset-noise version of the model using the 3M dataset, without any fancy vpred, terminal snr, or a different VAE. Fairly reliable, but may not produce as good results as others.

fluffyrock-1088-megares-terminal-snr-vpred (Vpred and terminal snr version of the model using the 3M dataset. Requires a YAML file to work, and is recommended to install the CFG rescale extension for optimal results.)

fluffyrock-1088-megares-terminal-snr (Terminal snr version of the model using the 3M dataset. Fairly plug and play, but is some epochs behind compared to the others.)

fluffyrock-NoPE (Experimental model to try and remove the 75-token limit of Stable Diffusion by removing positional encoding. Uses Vpred, so will require a YAML file, and again, use it with CFG rescale for optimal performance.

OUTDATED MODELS:

csv-dump

fluffyrock-1088-megares

fluffyrock-2.1-832-multires-offset-noise

fluffyrock-2.1-832-multires

fluffvrock-832-multires-offset-noise

fluffvrock-832-multires

fluffyrock-1088-megares-offset-noise

old-768-model

old-adam-832-model

old-experimental-512-model

old-experimental-640-model

OTHER REPOS:

Polyfur: e6laion but with autocaptions, so should improve at natural language prompts. Vpred + terminal SNR, will require a YAML and should use CFG rescale

Pawfect-alpha: 500k images from FurAffinity. Vpred and terminal SNR, so will require YAML and should use CFG rescale.

Artist comparison: https://files.catbox.moe/rmyw4d.jpg

Repository (GO HERE FOR DOWNLOADS): https://huggingface.co/lodestones/furryrock-model-safetensors

CivitAI page: https://civitai.com/models/92450

Artist study: https://pixeldrain.com/l/caqStmwR

Tag Autocomplete CSV: https://cdn.discordapp.com/attachments/1086767639763898458/1092754564656136192/fluffyrock.csv

Crookedtrees (Full Model)

Use crookedtrees in your prompt

0.3(acidfur_v10) + 0.7(0.5(fluffyrock-576-704-832-960-1088-lion-low-lr-e22-offset-noise-e7) + 0.5(fluffusion_r1_e20_640x_50))

Monstermind (Style)

Use mmind and argon_vile in your prompt, on_back, high-angle_view, etc. Ghost_hands and disembodied_hand are hit or miss.

BB95 Furry Mix

v14.0 : This version improves fur and will be great to generates better bodies

This version has a baked in VAE. You don't need to download the VAE files

V10.0 RELEASED This version can generates at higher resolution than v9 with a less mistakes. More realistic, better fur, better clothes, better NSFW !

This version has a baked in VAE.

Please consider supporting me so I can continue to make more models --> https://www.patreon.com/BB95FurryMix

Don't forget to join the Furry Diffusion discord server --> https://discord.gg/furrydiffusion

Since v3, this model uses e621 tags.

This model is a mix of various furry models.

It's doing well on generating photorealistic male and female anthro, SFW and NSFW.

I HIGHLY recommend to use Hires Fix to have better results

Below is an example prompt for the v7/v6/v5/v4/v3/v2.

Positive:

anthro (white wolf), male, adult, muscular, veiny muscles, shorts, tail, (realistic fur, detailed fur texture:1.2), detailed background, outside background, photorealistic, hyperrealistic, ultradetailed,

Negative:

I recommend to use boring_e621, you can add bad-hands-v5 if you want

Settings :

Steps: 30-150

Sampler: DDIM or UniPC or Euler A

CFG scale: 7-14

Size: from 512x512 to 750x750 (only v4/v5/v6/v7)

Denoising strength: 0.6

Clip skip: 1

Hires upscale: 2

Hires steps: 30-150

Hires upscaler: Latent (nearest)

Furtastic V2.0

Description: https://files.catbox.moe/cr137n.png

Put embeddings in \stable-diffusion-webui\embeddings, and use the filenames as a tag in the negative prompt.

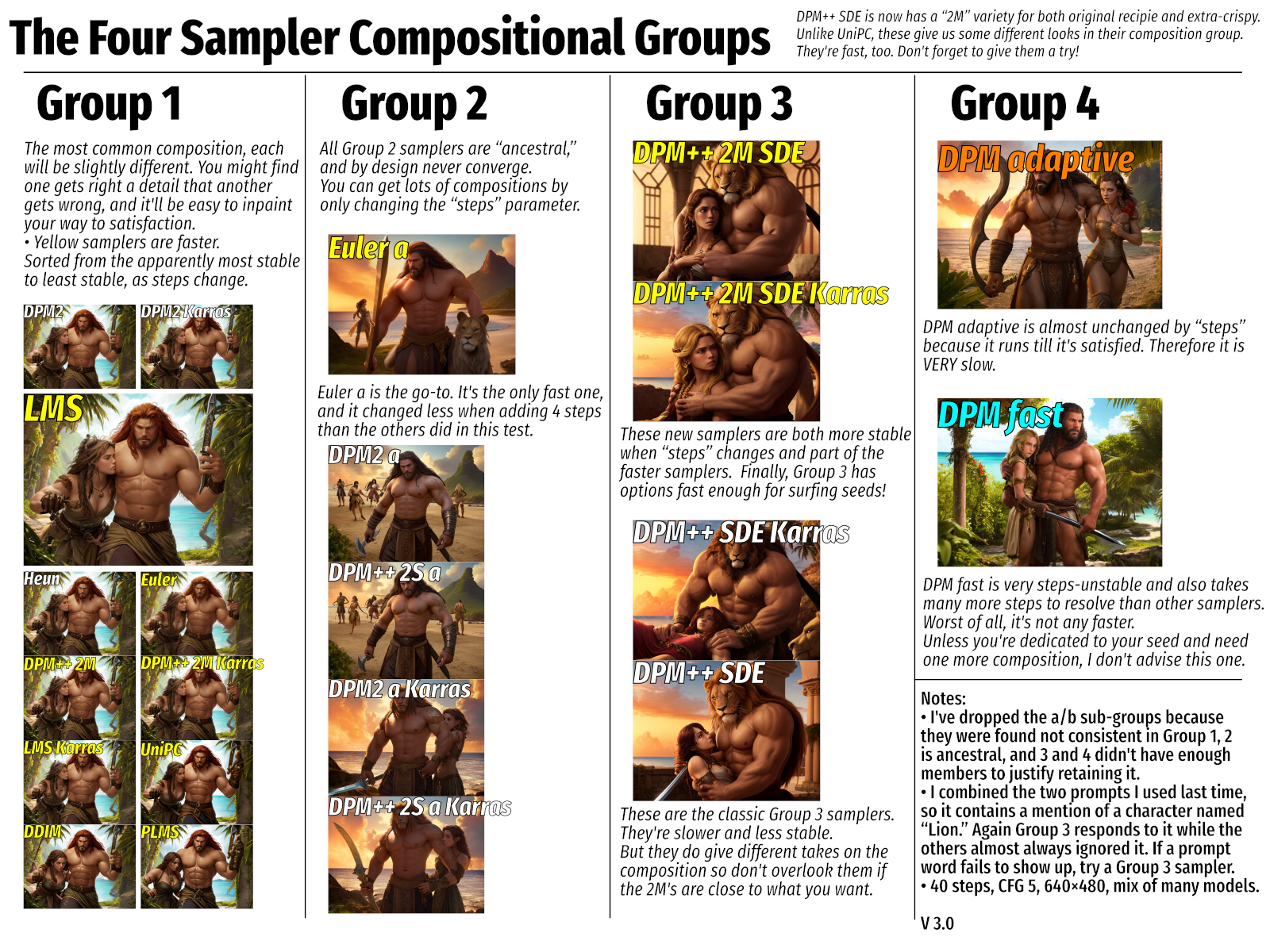

EasyFluff

Description:

A Vpred model, you need both a safetensor and an accompanying yaml file. See here for more info: https://rentry.org/trashfaq#how-do-i-use-vpred-models

What are Fun/Funner Editions?

Tweaked UNET with supermerger adjust to dialback noise/detail that can resolve eye sclera bleed in some cases.

Adjusted contrast and color temperature. (Less orange/brown by default)

CLIP should theoretically respond more to natural language. (Don't conflate this with tags not working or having to use natural language. Also it is not magic, so don't expect extremely nuanced prompts to work better.)

FunEdition and FunEditionAlt are earlier versions before adjusting the UNET further to fix color temperature and color bleed. CLIP on these versions may be less predictable as well.

Indigo Furry mix

https://civitai.com/models/34469?modelVersionId=167882

Various different model mixes with varying styles.

All models are baked with VAE, but you can use your own VAE.

Of the many versions uploaded, I will provide direct links to the following recommended models (as of Feb 17th 2024):

Test V-Pred Model:

Hybrid/General Purpose:

v105:

v90:

v75:

v45:

Anime:

v100:

v85:

v70:

v55:

Realistic:

v110:

v95:

v80:

v65:

v50:

FluffyBasedKemonoMegaresE71

Queasyfluff

I'd recommend setting CFG rescale down to around 15-35 and maybe prompt high contrast or vibrant colors.

Higher CFG rescale tends to bleach colors.

People keep asking what's in the mix so:

QuEasyFluff (regret this name already) is an easyfluff TrainDifference merge of easyfluff10-prerelease with a custom blockmerge non-furry realism model I made some time back that was made by merging:

HenmixReal_v30

EpicRealism_PureEvolutionV3

LazymixRealAmateur_v10

For added realism, try using Furtastic's negative embeddings (found here).

Queasyfluff V2

What's different about this version?

Fixed colors and CFG rescale issue

Follows directions a bit better

That's about it. Just does 3d and realism better than base easyfluff but still does great drawing style too

EF10-prerelease based: https://pixeldrain.com/u/sfB3fC58

EF11.2 based: https://pixeldrain.com/u/HLALVhng

Yamls: https://pixeldrain.com/u/ZCg93wph

0.7(Bacchusv31)+0.3(5050(BB95v11+Furtastic2))-pruned.safetensors

BBroFurrymix V4.0

Courtesy of an anon from /b/

Dream Porn (Mix)

That's a custom frankenstein mix I made.

Can't remember exactly whats in there.

r34

dgrademix

dreamlike photoreal

???

https://pixeldrain.com/u/ZiB5vT28

SeaArt Furry XL 1.0

DDL: https://civitai.com/api/download/models/437061?type=Model&format=SafeTensor&size=full&fp=fp16

VAE: https://civitai.com/api/download/models/437061?type=VAE&format=SafeTensor

(The VAE is the "usual" sdxl_vae.safetensors, if you've already used a SDXL model before you won't need to download this again)

Prompt Structure:

The model was trained with a specific calibration order: species, artist, image detail, quality hint, image nsfw level. It is recommended to construct prompts following this order for optimal results. For example:

Prompt input: "canid, canine, fox, mammal, red_fox, true_fox, foxgirl83, photonoko, day, digitigrade, fluffy, fluffy_tail, fur, orange_body, orange_fur, orange_tail, solo, sunlight, tail, mid, 2018, digital_media_(artwork), hi_res, masterpiece"

Species and Character Calibration:

We have provided a series of nouns for main species calibration such as mammals, birds, and have repeatedly trained on specific furry characters. This helps in generating more accurate character images.

Quality Hints:

The model supports various levels of quality hints, from "masterpiece" to "worst quality". Be aware that "masterpiece" and "best quality" may lean towards nsfw content.

Artwork Timing:

To get images in the style of specific periods, you can use time calibrations like "newest", "late", "mid", "early", "oldest". For instance, "newest" can be used for generating images with the most current styles.

Recommended Image Sizes:

For best quality images, it is recommended to generate using one of the following sizes: 1024x1024, 1152x896, 896x1152, etc. These sizes were more frequently used in training, making the model better adapted to them.

Dimensions Aspect Ratio

1024 x 1024 1:1 Square

1152 x 896 9:7

896 x 1152 7:9

1216 x 832 19:13

832 x 1216 13:19

1344 x 768 7:4 Horizontal

768 x 1344 4:7 Vertical

1536 x 640 12:5 Horizontal

640 x 1536 5:12 Vertical

SeaArt + Autismmix Negative LoRA

https://civitai.com/models/421889

SeaArt: https://civitai.com/api/download/models/470089?type=Model&format=SafeTensor

Autismmix: https://civitai.com/api/download/models/475811?type=Model&format=SafeTensor

Inspired by the popular Boring_e621 negative embedding https://civitai.com/models/87781?modelVersionId=94126 , this is a negative LORA trained on thousands of images across years of data from different boorus with 0 favorites, negative scores, and/or bad tags like "low quality." Therefore putting it in the Negative Prompt tells the AI to avoid these things, which results in higher quality and more interesting images.

Pros:

-Generally increases quality which means more details, better depth with more shading and lighting effects, brighter colors and better contrast

Could be Pro or Con depending on what you want:

-Tends to generate more detailed backgrounds

-Tends towards a more detailed or even more realistic look

Cons:

-Many of the training images were low resolution sketches or MSPaint style doodles, if you are trying to generate sketches or doodle style work putting this in the negatives may be detrimental

-Many of the training images were black and white sketches or otherwise monochrome/grayscale, if you are trying to generate images without color putting this in the negatives may be detrimental

-Accidentally putting this in the positive prompt instead of the negative prompt reduces the quality of images

Test images was done using a weight of 1 with only this LORA in the negative prompt. You can adjust the weight of the LORA to change the impact, however in my testing the impact of different weights was minimal.

The first version uploaded "boring_SDXL_negative_LORA_SeaArtXL_v1" was trained on the Sea Art XL model (https://civitai.com/models/391781/seaart-furry-xl-10) and is intended to be used with that model. A version for AutismMix SDXL (https://civitai.com/models/288584?modelVersionId=324524) is currently being trained. Please feel free to request if you want a version trained specifically for any other SD XL model.

Compassmix XL Lightning

https://civitai.com/models/498370/compassmix-xl-lightning

DDL: https://civitai.com/api/download/models/553994?type=Model&format=SafeTensor&size=full&fp=fp16

Indigo Furry Mix XL

https://civitai.com/models/579632?modelVersionId=646486

V1.0 DDL: https://civitai.com/api/download/models/646486?type=Model&format=SafeTensor&size=pruned&fp=fp16

This is a (test) XL model based on pony XL with a little modifications, can react to some artist tags due to Seaart Furry XL mixed in. This model is mainly for anime bara kemono content, should be ok for all contents.

For v1.0:

It's ok to use 'score' tags or 'zPDXL' embeddings or not, use score tags will generate kemono-styled (japanese furries) images, not using score tags will generate western-styled (those common ones on e621) images.

Prompt length can significantly affects the style and the effect of score tags.

Can react to some artist tags.

Optional style tags: 'by mj5', 'by niji5', 'by niji6'.

Sometimes output images can be too yellow with score tags. (especially with short prompts)

Shixmix_QueasyIndigo SD1.5

https://pixeldrain.com/u/Es8VrAyD -model

https://pixeldrain.com/u/v8gi82uy -yaml

Galleries

FluffAnon's Generations

Yttreia's Stuff

Quad-Artist combos

250 hand-picked quad-artist combos, out of ~2800, each genned with 6 different scenarios for a total of 1500 raw gens.

Best viewed by resizing your window so that each row has 6 (or a multiple of it) images.

Artist list as a .txt: https://files.catbox.moe/7ky7fb.txt

SeaArt Artist Combination Examples

https://mega.nz/folder/UvZg1ZiR#MXc-Ax86OLTC4WKUgm9mcA

I made a triple roll with SeaArtXL for 363 artists, based on the prompt used by the anon that posted the bunnies in the last thread. All 1089 gens can be found here:

There's a .txt file at the end with the artist list.

As usual it's best viewed by resizing the window so that there are a multiple of three images per row.

PonyXL LoRAs made by /h/

Basically just made a python script to download all the LoRAs in this rentry: https://rentry.org/ponyxl_loras_n_stuff . There's a powershell script in there that also downloads everything, but I'm on Linux which doesn't run that natively. Python is just more accessible in my opinion.

Catboxed them here if you want to add them:

https://files.catbox.moe/ujz8p4.txt

https://files.catbox.moe/c63lpj.py

The txt is just a list of the urls with the artist they correspond to. The .py file reads the .txt and downloads everything in the text file. Anyone can edit the txt file to add or remove LoRAs if you're downloading a large batch.

Strictly speaking, this script works for more than just this rentry. It can basically just download any number of files from a list of URLs.

LORAs from the Discord

Various Characters (FinalEclipse's Trash Pile)

BulkedUp

Here is the link to the LoRA, model formula, training dataset, and images of the examples:

Protogens

Link:

Mr. Wolf (The Bad Guys)

Wizzikt

beeg wolf wife generator (Sligarthetiger)

Cervids

Various (Penis Lineup, Kass, Krystal, Loona, Protogen, Puro, Spyro, Toothless

Puffin's LoRAs

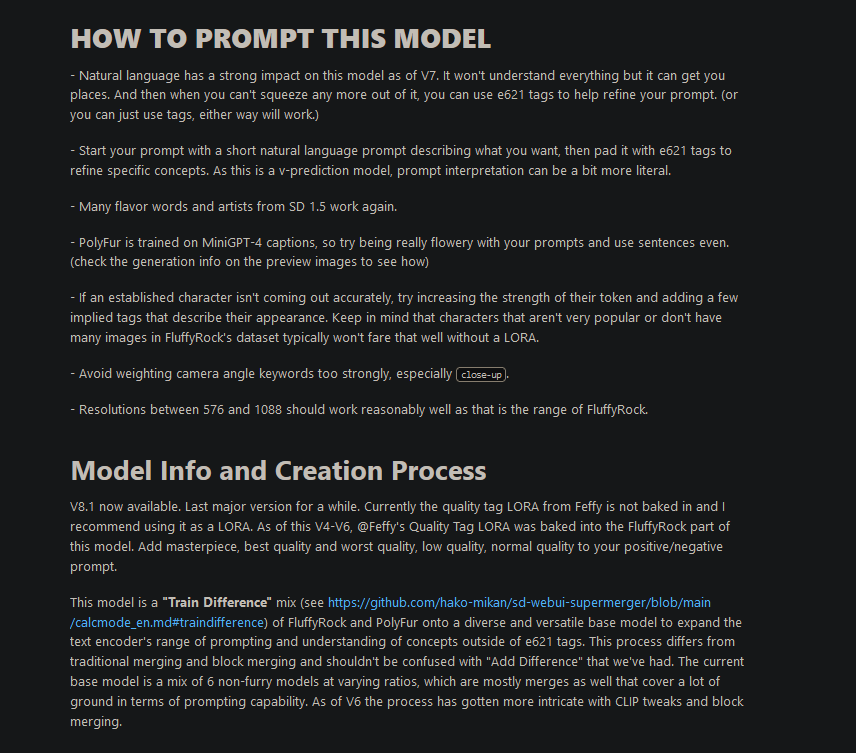

Pic taken 2023/05/16

Looking them over, some of these are likely the same ones posted before ITT, currently filed under "Birds" up above. Gonna leave it up, for posterity's sake.

Some of these are Lycoris files; check out this extension if you encounter problems.

Cynfall's LoRAs

https://mega.nz/folder/DRI0RY4Q#g1IJ7Ch1hM6-sAG7dGkJ7g

Feral on Female

Valstrix's Gathering Hub (Monster Hunter and more)

Slugcats (RainWorld)

From: https://civitai.com/models/94795/slugcats-rainworld-wip

https://civitai.com/api/download/models/101116?type=Model&format=SafeTensor

AnonTK's LoRA Repository (TwoKinds LoRAs)

https://mega.nz/folder/DtFz1IbQ#wZJFX0aYEL4rKwBBmgyaZQ

Tom Fischbach Style LoRA - PDXL

https://mega.nz/folder/oXZHwAIb#LarZqlfkp9Zr45suaZkRZw

Assorted Random Stuff

Artist comparisons

PDV6XL Artist Tag Comparison

Samples made using https://civitai.com/models/317578/pdv6xl-artist-tags

https://mega.nz/folder/YXcEgBCK#Jydfj9qF9IXyCjWhfw8rnQ

Autismmix XL Artist/Hashes Comparison

https://mega.nz/folder/x25QyQhL#MWpbJfSDIBo6dpn9APpGVQ

PDXL Artist/Hashes Comparison

https://mega.nz/folder/ErBSQR7A#MPUWcWy9bA9QEJzn0unM-Q

SeaArt Artist Comparison

https://mega.nz/folder/MrgAiA6J#bt6z2MnMhcWsUVKoSpKuQg

SeaArt Furry XL SDXL Base Artist Comparison:

https://rentry.org/sdg-seaart-artists

Yiffmix v52 Artist Tests: https://mega.nz/folder/JgRG2a7L#rL9o48_vVxere2lwQjShUg

Working artists Easyfluff Comparison V1

I regenned my artist collection word document thing because I figured I could with dynamic prompts.

Also there's more artists now thanks to 4 artist combo anon providing them.

I think this will be the final version for a while, details of further improvements and changes outlined in the PDF.

V1 Males: https://www.mediafire.com/file/g7y2i8u239k9zut/Working_artists_redemption_arc_edition.pdf/file

V1 Females: https://www.mediafire.com/file/mbg5rk9c34h243y/Working_artists_cooties_edition.pdf/file

V1 Base SD Artists: https://www.mediafire.com/file/mnqv47mgji4baa4/Working_artists_real_artists_edition.pdf/file

Working artists Easyfluff Comparison V2 (up to 28725 different e621 based artist previews)

Explanatory Memorandum: https://www.mediafire.com/file/ymxqexl6r3nl68s/Working_artists_explanatory_memorandum.pdf/file

I have generated images using a list of 28725 artist tags from the e621 csv file which came with the dominikdoom's tagcomplete extension.

Due to the strain making these puts on my system, these images have been split up into 9 PDF files with 3192 images in each. I find this also makes it relatively light on the client side as well, resource wise

The images have been placed in descending order based on how many images that artist had tagged to them on e621 at the time the csv file was made. The artist prompts used and the amount of tagged images is displayed above each picture. This text is selectable for convenient copy+pasting.

The parameters used for these guides will be placed in the PDFs.

https://www.mediafire.com/folder/3kv4l4l3c6sgq/SD+Artist+Prompt+Resources

(Not embedded due to filesize)

Comparison of Base SD-Artist - Furry artist combos (done on an older furry model, likely YiffAnything)

thebigslick/syuro/anchee/raiji/redrusker/burgerkiss/blushbrush Prompt Matrix comparison (done on a Fluffyrock-Crosskemono 70/30 merge)

Vixen in Swimsuit artist examples (Model: 0.3(acidfur_v10) + 0.7(0.5(fluffyrock-576-704-832-960-1088-lion-low-lr-e22-offset-noise-e7) + 0.5(fluffusion_r1_e20_640x_50)) .safetensors) (DL link can be found above)

Artist examples using Toriel as an example (0.5 (0.7fluffyrock0.3crosskemono) + 0.5 fluffusion)

Big artist comparison

EasyFluff Comparisons

https://rentry.org/easyfluffcomparison/

Easyfluff V10 Prerelease

There are artist comparisons and they are all nice and stuff but I was curious how scalies would turn out.

Here are some of a dragoness, if anyone cares.

I went with detailed scales and background.

Unfortunately, I had FreeU turned on, so it's not going to be perfect for all of you.

They are broken up at a more or less random spot to keep them from getting insanely huge.

I haven't examined them all yet. Just thought I'd share.

https://mega.nz/folder/tHMTkDxZ#ga3iHKb_7AHpgzSH2YDGfg

EasyFluff V11.2 Comparison

0.6(fluffyrock-576-704-832-960-1088-lion-low-lr-e209-terminal-snr-e182) + 0.4(furtasticv20_furtasticv20) Comparison

https://pixeldrain.com/u/xUVfbjdc

https://files.catbox.moe/5ylrek.pdf

Ponydiffusionv6 XL Artist Comparison

Nabbed an anons artist list from /h/ and put together some grids for PDXL, gonna do one for anime girls later and maybe run the EF artist list I got just to see what does and doesn't work

https://mega.nz/folder/kvwQHLiA#fmI-1cgoCagt3vBEhHudag

Autismmix Artist Comparison

Went ahead and put together an xyz spreadsheet for autismmix of all the artists from a txt file posted some threads ago

https://mega.nz/folder/x25QyQhL#MWpbJfSDIBo6dpn9APpGVQ

CompassMix artist mixes

So far it's just 55 mixes, split between two folders: one with plain triple mixes and one with weighted quadruple mixes.

https://mega.nz/folder/EmB1nR5Q#99x_PAvjw5L5a1FcDzpApg

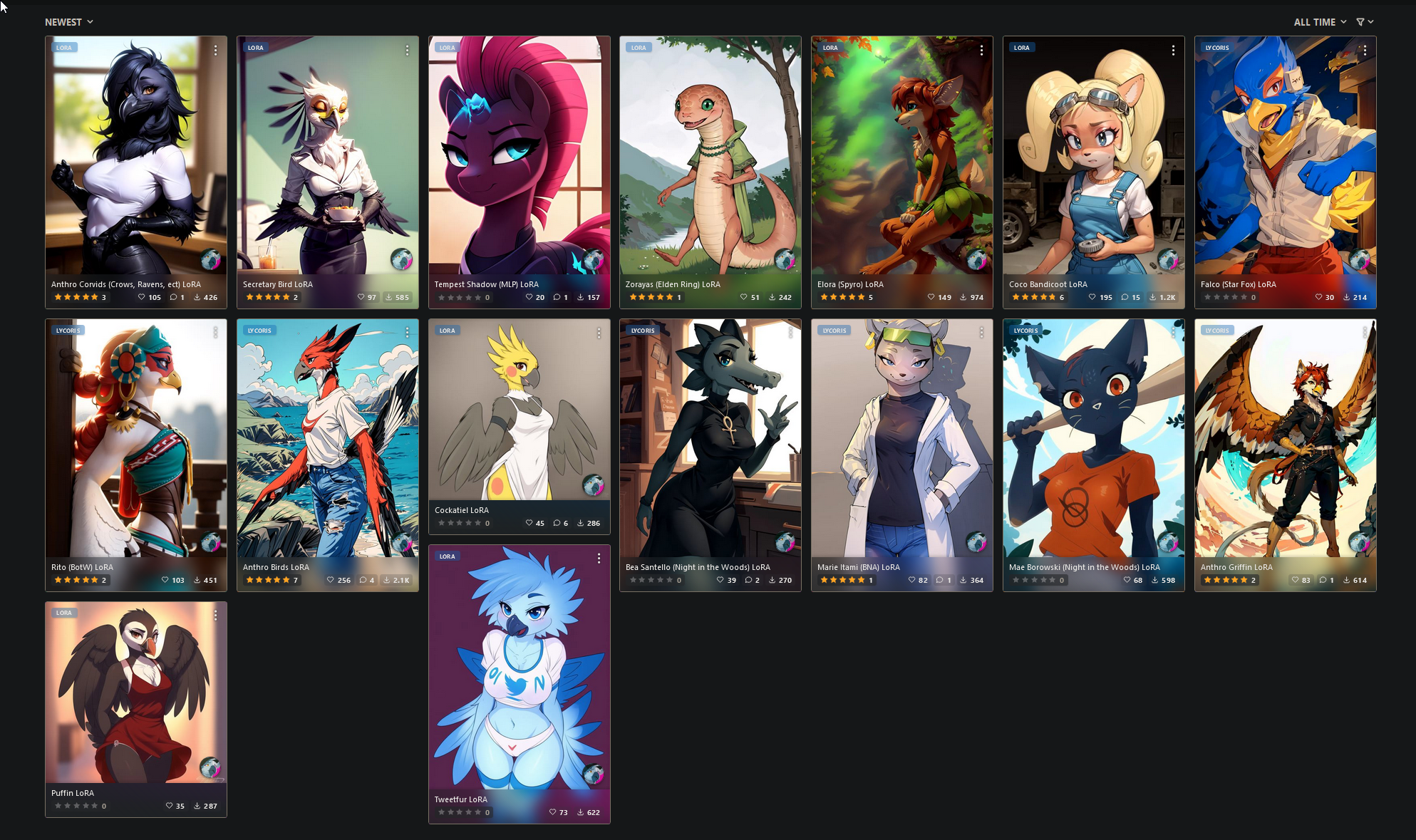

Different LORA sliders - what do they mean?

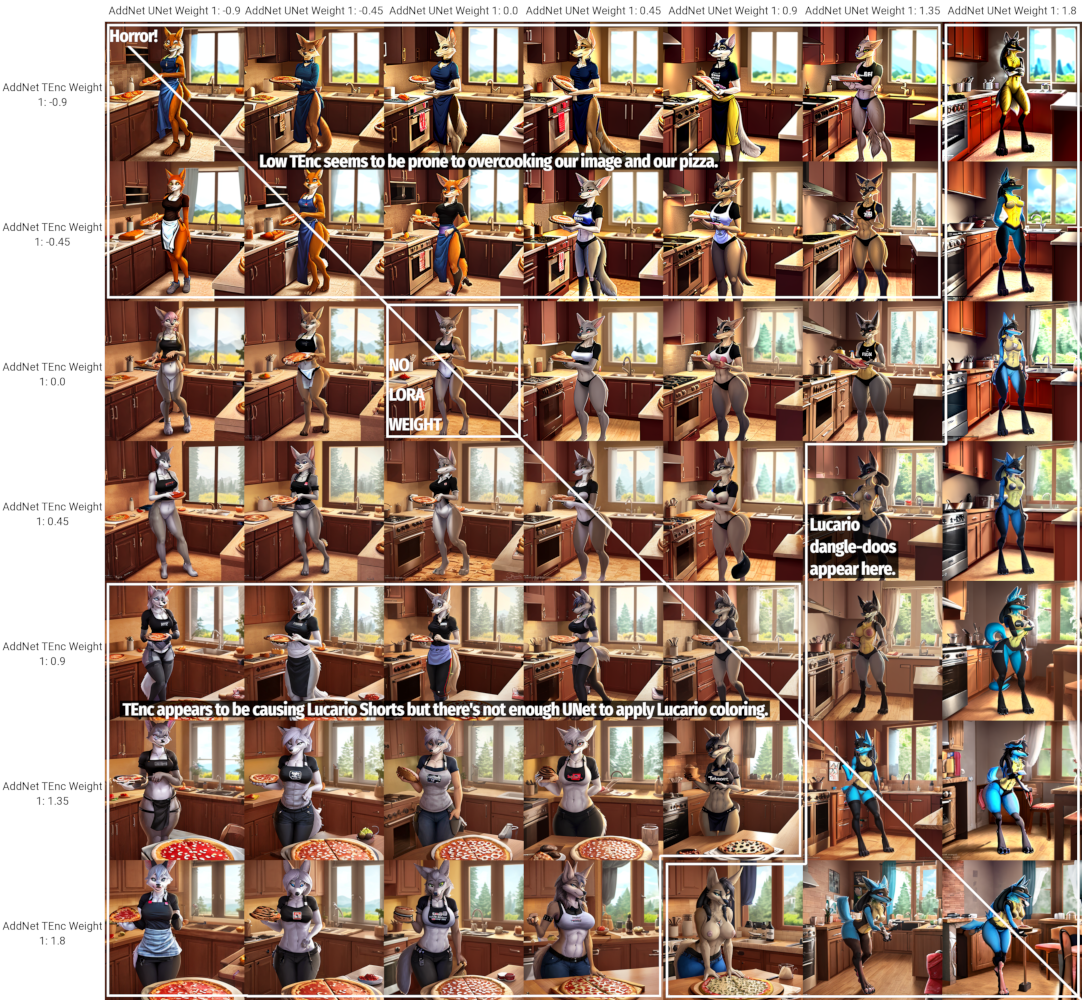

What about samplers?

Script for comparing models

54395615

Okay, thanks? What am I supposed to do with this?

Wildcards

Use either the Wildcard or Dynamic prompts extensions!

List of wildcards: https://rentry.org/NAIwildcards

Dynamic prompts wildcards: https://github.com/adieyal/sd-dynamic-prompts/tree/main/collections

(Work even without the dynamic prompts extension if you prefer the older one, just grab the .txt files.)

Most modern models were trained on the majority if not all of E621.

You can grab a .csv containing all e621 tags from https://e621.net/db_export/ and filter for Category 1.

Here is an artist listing of the entirety of e621, sorted by number of posts (dated mid Oct. 2023): https://files.catbox.moe/mjs8jh.txt

All artists from fluffyrock.csv sorted by number of posts: https://files.catbox.moe/vtch6n.txt

Pose tags: https://rentry.org/9y5vwuak

Wildcards collection: https://files.catbox.moe/lwh0fx.7z

Species Wildcards Collection: https://rentry.co/4sy6i33r

Huge Wildcard Collection sorted by artist types, poses, media etc.: https://mega.nz/folder/UBxDgIyL#K9NJtrWTcvEQtoTl508KiA/folder/pJR0mLjb

Pony Diffusion XL V6 Wildcards:

e621: https://files.catbox.moe/icf7ak.txt

Danbooru: https://files.catbox.moe/k2pgw2.txt

PDXL V6 artist tags with at least moderate effect on gens

https://files.catbox.moe/a1srau.zip

e621 Character Wildcard with supporting tags: https://files.catbox.moe/4k91ms.txt

OpenPose Model

"What does ControlNet weight and guidance mean?"

Img2Img examples

https://imgbox.com/g/tdpJerkXh6

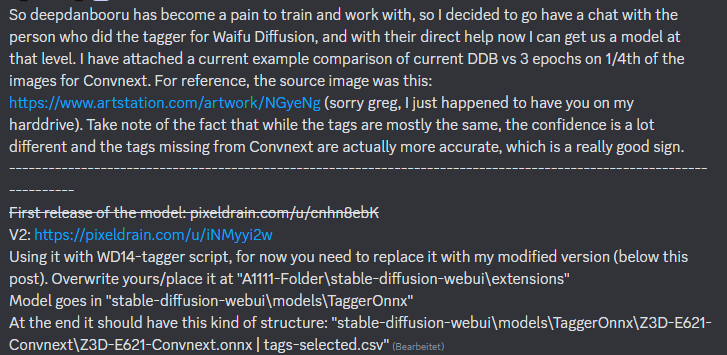

E621 Tagger Model for use in WD Tagger

!!NEW!! Zack3D's tagger model (see below) is quite old by now; Thessalo has made a newer, better model which sadly has not been adapted to WD Tagger and the like just yet.

Link to the model: https://huggingface.co/Thouph/eva02-clip-vit-large-7704/tree/main

Batch inference script for use with Thessalo's Tagger model: https://mega.nz/folder/OoYWzR6L#psN69wnC2ljJ9OQS2FDHoQ/folder/HwgngBxI

Reminder that the prior GitHub repo has been discontinued; delete the extension's folder and install https://github.com/picobyte/stable-diffusion-webui-wd14-tagger instead, which reportedly works even with WebUI 1.6.

The patch below seems to NOT BE NEEDED anymore as of Oct 17th 2023 if you are using the picobyte repo. Download only the Convnext V2 model, and place it as described.

Upscaler Model Database

Recommendations are Lollypop and Remacri. Put in models/ESRGAN

https://upscale.wiki/wiki/Model_Database

LoCon/LoHA Training Script / DAdaptation Guide

Script: files.catbox.moe/tqjl6o.json

Gallery: imgur.com/a/pIsYk1i

www.sdcompendium.com

Script for building a prompt from a lora's metadata tags

Place into your WebUI base folder. Run with the following command:

python .\loratags.py .\model\lora\<YOURLORA>.safetensors

https://pastebin.com/S7XYxZT1

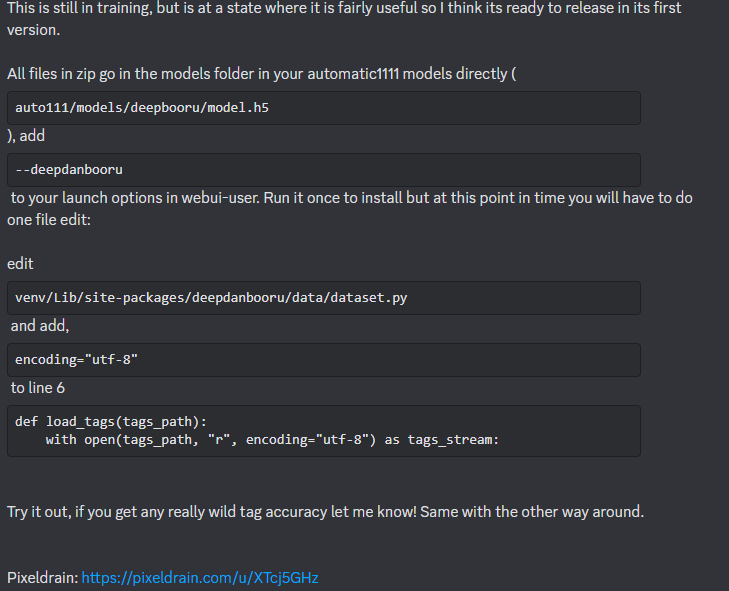

Example workflows

Text2Image to Inpaint to SD Upscale Example:

Using ControlNet to work from sketches:

Usually I start with a pretty rough sketch and describe the sketch in the prompt, along with whatever style I want. Then I'll gen until I get one that's the general idea of what I want, then img2img that a few dozen times and pick the best one out of that batch. I'll also look through all the other ones for parts that I like from each. It could be a paw here or a nose there, or even just a particular glint of light I like. I'll composite the best parts together with photoshop and sometimes airbrush in certain things I want, then img2img again. When I get it close enough to the finished product I'll do the final upscale.

Picrel is a gif of another one I've posted here that shows what these iterations can look like

I use lineart controlnet with no preprocessor (just make sure your sketch is white-lines-on-black-background or use the invert processor.) Turn the control weight down a bit. The rougher the sketch, the lower your control weight should be. Usually around 0.3-0.7 is a good range.

Couldn't you theoretically use the lineart preprocessor to turn an image into a sketch, and then make adjustments to it there if you want to add or remove features?

Good call, that's exactly what I did with part 3 of the mouse series. Mouse part is mine, the rest is preprocessor.

Tutorials by fluffscaler

Inpainting: https://rentry.org/fluffscaler

PDXL: https://rentry.org/fluffscaler-pdxl

CDTuner ComfyUI Custom Nodes

A kind anon wrote custom nodes for ComfyUI to achieve similar results as https://github.com/hako-mikan/sd-webui-cd-tuner.

https://rentry.org/r9isz

Copy the script into a text file, rename it to cdtuner.py, and put it into your custom_nodes folder inside your ComfyUI install.

Do not make the same mistake I did: only save the contents of the script, if you use Export > Raw, make sure to remove the everything before the first import and after the last }.

The nodes are now called:

SaturationTuner

ColorTuner

LatentColorTuner

I made some slight changes because the original CDTuner implementation is a bit weird.

LatentColorTuner will allow you to edit latent colors with similar sliders to CDTuner but you don't need to re-generate images all the time.

The actual ColorTuner which is implemented almost the same as CDTuner is a bit of hack job because you can't really get the step count back out of a sampler. So rather than editing just the cond/uncond pair in the last step it edits them at all steps. I think this leads to the changes being a bit better integrated into the images, but it's different from A1111 CDTuner.

Input and output are the same type so you just plop it as a middle man before the node you want it to apply to

Gives different effects depending on where you place it

ComfyUI Buffer Nodes

Sillytavern Character Sprites

Piko

https://files.catbox.moe/wqmnxv.rar

Tomoko Kuroki

https://files.catbox.moe/iyorf6.rar

fay_spaniel

https://files.catbox.moe/7jj4mz.rar

Elora

https://files.catbox.moe/kalun9.rar

Character sprites were made using Easyfluff v10 with character lora while using Control Net ((Reference only)) and changing the expressions for each sprites.

Easyfluff V11.2 HLL LoRA (Weebify your gens)

A set of LoRAs trained on Dan- and Gelbooru images and Easyfluff, allowing for better non-furry gens and use of anime artists.

A guide can be found here

NAI Furry v3 random fake artist tags

NAI Diffusion Furry v3, similar to PDXL, no longer has artist tags, or at least obfuscated them. The pastebin below has examples of fake artist tags that yield different styles.

https://pastebin.com/LAF342fY