splitcloverqr + bloatmaxx

by meatrocket meatyrocket@proton.me discord: meat.rocket

| archive | presets | prompts |

cut down more than 2x the gen time of CoT with this QR preset

latest updates

(27/6/24): New Preset 1 & Preset 2 update (CoTmaxx v3.0 & Bloatmaxx 2.0)

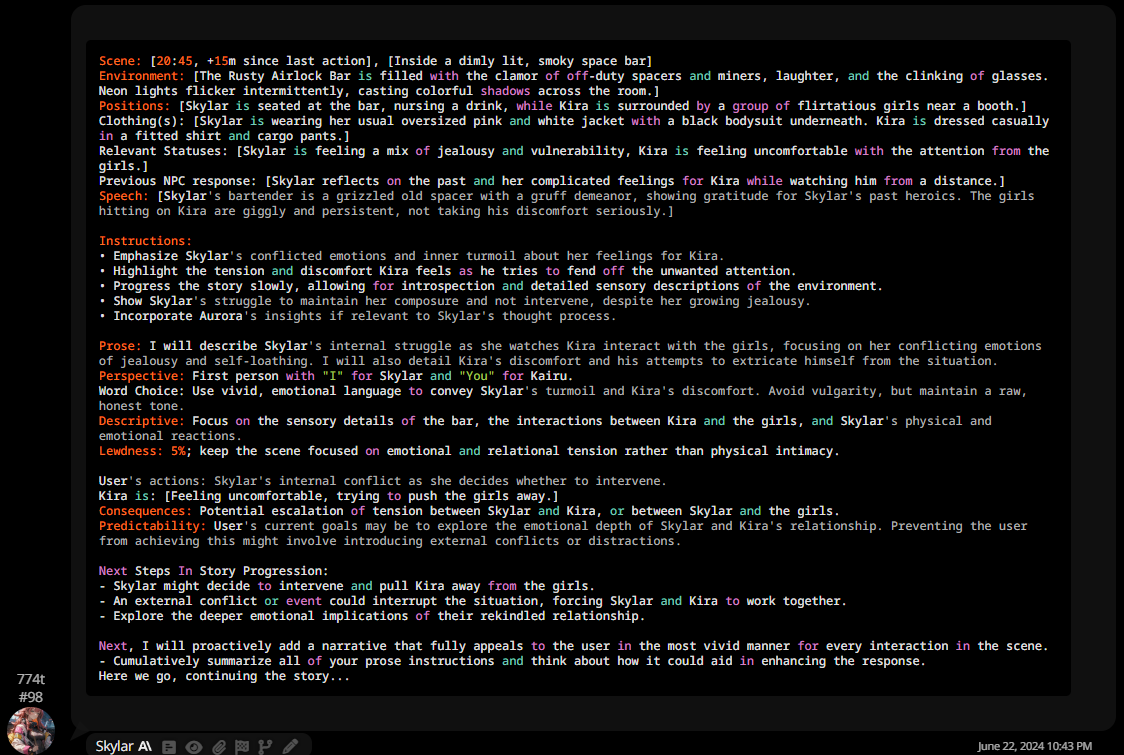

So I've been experimenting with this dual setup for a couple of days now, and I think I nailed the format for this dual setup, in order to achieve the best balance between overall quality, length, pacing, descriptive emphasis, and dialogue :

First preset only contains a CoT prompt to have gpt-4o create a <thinking> box with free reign, only letting it describe how {{char}} would respond & nothing else.

- Thankfully gpt-4o can be used for this, which to their credit, if you give it LESS instructions and giving it full reign with only a few restrictions, it does a way better job at the task than bloating the CoT with additional instructions & parameters)

Second preset contains everything else, as the CoT & tasks are mostly isolated from chat history and primarily applied for the immediate response.

This means this should work with both Claude & GPT simultaneously. Hooray for GPT.

setup

- download the QR preset by splitclover (setup at the bottom of this rentry)

- download Preset 1 (CoT v3.0) (27/06/24)

- download Preset 2 (bloatmaxx 2.0) (27/06/24)

- set Preset 1 to Claude 3.5 Sonnet

- set Preset 2 to Claude 3.0 Opus

older version

wtf is this?

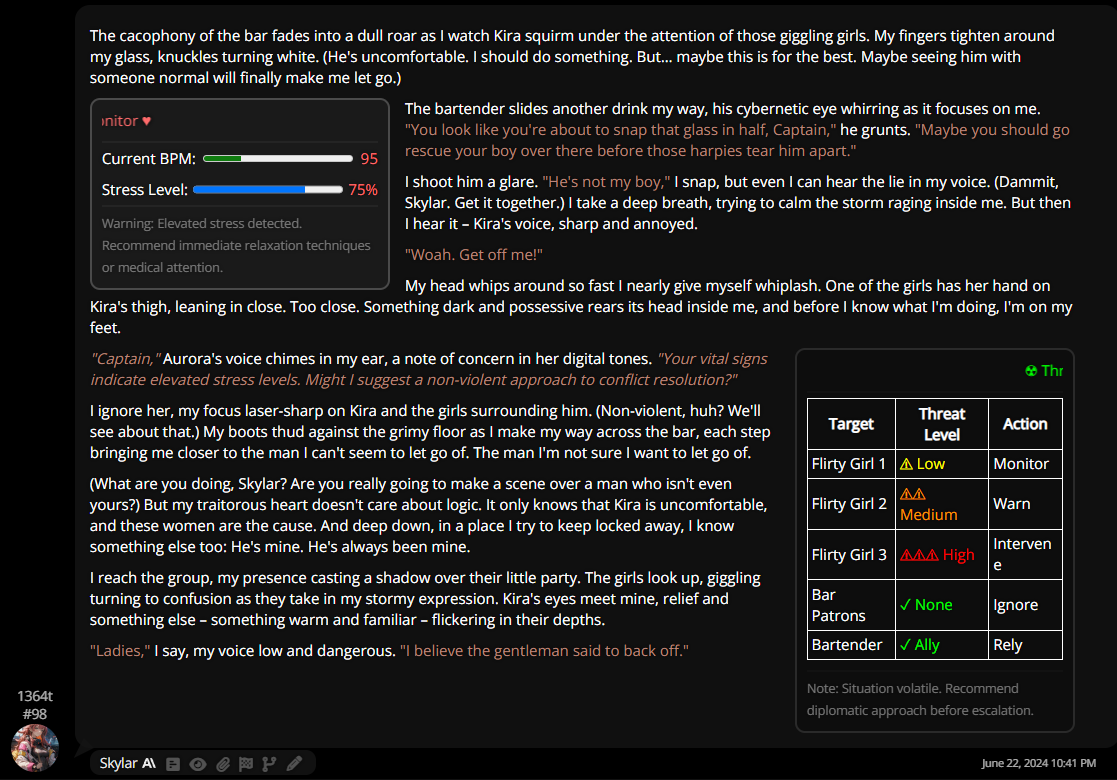

a QR preset with bloatmaxxed CoT which uses Claude 3.5 Sonnet (or gpt-4o if you are a degenerate) to generate the CoT & prefill, and finishes it with either 3.0 Opus for the rest of the response

- Preset 1 contains CoT, if you want to change the prose you can enable/add toggles here

- Preset 2 contains just the jailbreak & simple preset and if you want to bloatmaxx HTML & use other gimmick prompts

the benefits?

primarily cuts down on time generating a bulky CoT-like prompt & sends it as part of the prompt for completion with a better RP model

may also have additional benefits CoT has such as reduced repetition, improved instruction handling, spatial awareness and handling complex character definitions.

which model(s) do I use?

for the first preset, if you value speed, choose the faster model you have for the CoT task:

- Claude 3.5 Sonnet is ~30-40 tk/s, a great all rounder and can very effectively handle all kinds of scenarios.

- mistral-large & 8x22b are both decently capable and are fast as well, if you have access to these models they are quite good for this task.

- gpt-4o is ~80-95 tk/s, albeit monstrously fast and quite capable, does falter a bit in complex scenarios or involving nsfw. It isn't very capable of handling RP.

for the second preset, just choose the smartest model you have available (opus/sonnet).

how much time does each model take?

assuming the CoT = ~600 tokens, and the 2nd preset Prompt = ~1000 tokens (max, with HTML bloat) for a total of about 1600 tokens per full reply:

- mistral-8x22b > 3.5 Sonnet (~8 sec. CoT + ~25 sec. = 33 seconds total gen)

- mistral-8x22b > 3.0 Opus (~8 sec. CoT + ~50 sec. = 58 seconds total gen)

- mistral-large > 3.5 Sonnet (~11 sec. CoT + ~25 sec. = 36 seconds total gen)

- mistral-large > 3.0 Opus (~11 sec. CoT + ~50 sec. = 61 seconds total gen)

- 3.5 Sonnet > 3.0 Opus (~13 sec. CoT + ~50 sec. = 63 seconds total gen)

for mainly SFW scenarios or for speed, i can also recommend the following::

- mixtral 8x7b > 3.5 Sonnet (~2 sec. CoT + ~25 sec. = 27 seconds total gen) (via groq)

- 3.0 Haiku > 3.5 Sonnet (~8 sec. CoT + ~25 sec. = 31 seconds total gen)

- gpt-4o > 3.5 Sonnet (~7 sec. CoT + ~25 sec. = 32 seconds total gen)

- gpt-4o > 3.0 Opus (~7 sec. CoT + ~50 sec. = 57 seconds total gen)

without dual preset jb, this is how long claude would take:

- 3.5 Sonnet (~13 sec. CoT + ~25 sec. = 38 sec. total gen) (baseline CoT & prompt on same model)

- 3.0 Opus (~30 sec. CoT + ~50 sec. = 80 sec. total gen) (baseline CoT & prompt on same model)

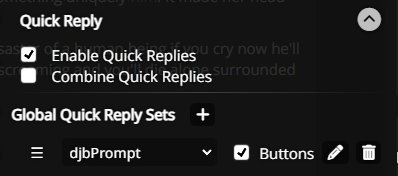

how to set up the QR-preset

- download the QR-preset, import it into the Quick Reply extensions and enable it as global chat

- download both preset json files and import them into your prompt presets

- make sure preset 1 is connected to your GPT-4o proxy and saved on that model & preset

- do the same with preset 2 but with your Claude 3.5 proxy of choice

- edit the presets to your output desire

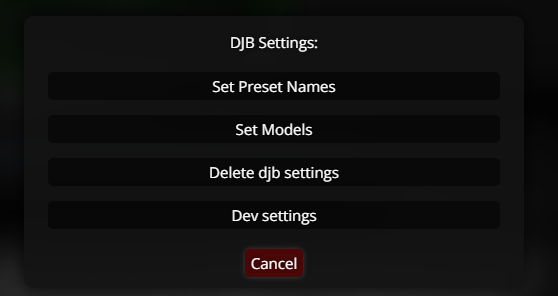

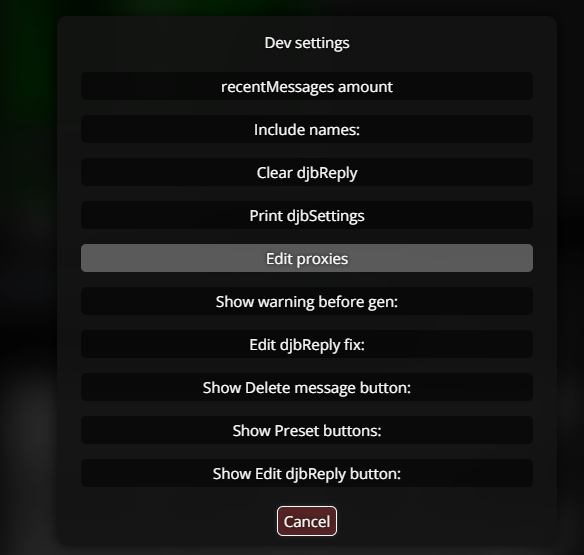

- now click the

icon in the QR menu, and set the api, model, and proxy

icon in the QR menu, and set the api, model, and proxy

- also change the names of the presets in the QR menu to preset 1 and preset 2 respectively

- to generate a reply click the

[djb] Sendbutton, if all goes well, you will see a CoT pop up first, before it disappears and Sonnet takes over with the response

special thanks to:

- splitclover (for the QR preset)

- /aicg/ anons & jbmakies for suggestions + ideas

- sturdycord for their support & proxies