HOW TO TRAIN LORAS FOR CASCADE - WITH THE RESONANCE PROTOTYPE

(Using Lora Easy Training Scripts - Custom fork, Kohya Scripts or Onetrainer)

An Unofficial Guide :: Prototype installation how-to - https://rentry.org/ehuvmf34

Remember to donate if you like the prototype, donations will give us the final product bake much faster!

Resonance Cascade Info Rentry - https://rentry.org/resonance_cascade (by TheOneAndOnlyGod from FG discord)

Resonance Model: https://rentry.org/resonancemodel

Additional Cascade resources: https://huggingface.co/stabilityai/stable-cascade/tree/main

New - Collaborative sheet for lora setting tests. Add to it freely, no sign up required: https://cryptpad.fr/sheet/#/2/sheet/edit/GPXYLlQOH+31qzlFkm4sU-cJ/

[Thanks to Dumdumfun11 from the FD discord for suggesting it!]

If you find what you consider 'optimal' lora training settings for Cascade, please share them with @b- on the Fusion Generative Collective discord https://discord.gg/pBwH4AwYzP

Easy Training Scripts

Note: This will likely be the easiest & most efficient option for most users!

UPDATE - Machina has added a toggle option to switch between lora training for the 1B and 3.6B models

Machina on the FG discord has made a branch of Easy Training Scripts that supports Cascade: https://github.com/67372a/LoRA_Easy_Training_Scripts

install and use instructions are available on the github page

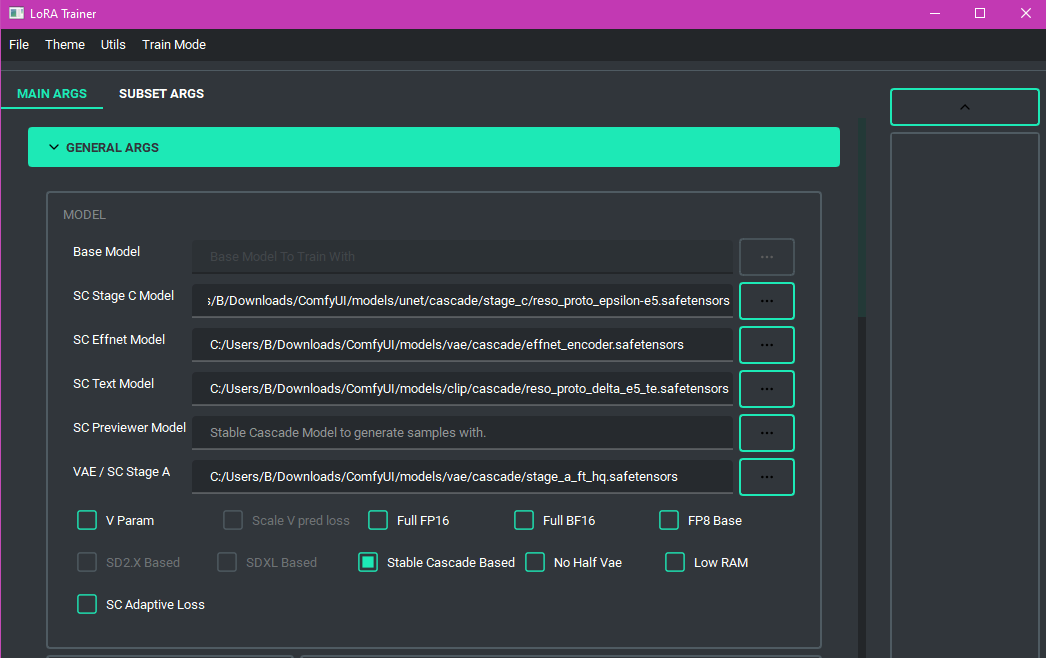

- You will need to check the 'Stable Cascade Based' checkbox to be able to fill in the necessary models, see my screenshot below if you're not sure which files to use

- You can queue training with Easy, however, if you OOM/error it will not continue down the queue. If you have limited vram or are trying new settings, I recommend testing that they will make it to the training stage successfully before leaving your queue unattended

Quote from Machina:

here is LoRA_Easy_Training_Scripts forked with support for Stable Cascade (stable-cascade branch, which I made the default). This should be considered provided as is, I cannot promise testing everything, fixing every issue encountered, or porting new features from main to stable-cascade. Some settings will not work with Stable Cascade due to it having it's own implementation, I don't believe Lycoris will work at all. Edit: Lycoris may work, might have been a local issue I was having. People can add issues to the repo or let me know via Discord and I'll try to look into them

Using Kohya Scripts

UPDATE FOR 1B TRAINING:

The 1B model/training will have the same requirements as SD 1.5, so is very accessible for low vram

To train on the 1B Cascade model, replace stable_cascade_utils.py (C:\Users\B\Downloads\kohya_ss\library\stable_cascade_utils.py) (fix courtesy of Jordach)

with:

https://files.catbox.moe/9ml16j.py

RENAME 9ml16j.py to stable_cascade_utils.py

(this replaces https://github.com/kohya-ss/sd-scripts/blob/stable-cascade/library/stable_cascade_utils.py#L99-L112 with Jordach's fix: https://files.catbox.moe/x1aw8t.png)

Note - This will remove the ability to train on 3.6B, you will need to switch it back/make a copy if you want to move between them

If you are already familiar with the bmaltais GUI, this process should be fairly simple. We need to run it as command lines instead of within the Gradio GUI, but the Gradio GUI can still help write 98% of those lines for you. Though you can use Kohya Scripts on its own without bmaltais, this guide will be focusing on using bmaltais for the sake of user simplicity. Overall, the results/method should be the same.

Step 1:

- Install and set up the bmaltais GUI. Instructions are available on github, but if you're still having trouble, this youtuber's video may assist: https://youtu.be/iCr9CTpV8b4?si=VOGI1xhpZMPZ92Eg&t=92

https://github.com/bmaltais/kohya_ss/

https://github.com/bmaltais/kohya_ss/tree/stable-cascade

Step 2:

- Once installed (or if already installed), make sure you git pull the 'Cascade' branch from Github. If you are not familiar with this process, here is one way to do so:

- Download and install the GitHub Desktop APP (account not required)

https://docs.github.com/en/desktop/installing-and-authenticating-to-github-desktop/installing-github-desktop - Open the Github Desktop APP. In the top left hand corner, select 'File> Add local repository > Choose...'

- Select the filepath to your Kohya installation (For example, mine is: C:\Users\B\Downloads\kohya_ss), and click 'Add Repository'

- In the GITHUB Desktop APP, 'Current Repository' should say 'kohya_ss'. Beside it, for 'Current Branch', click on the drop down and select 'Other Branches > stable-cascade'

- Press the 'fetch origin' button

- If for some reason this doesn't work, up near where the 'File' button is at the top, try 'Repository > Pull'

- Download and install the GitHub Desktop APP (account not required)

If this step was a success, when you open your Kohya ss folder, it should now show python files such as 'stable_cascade_train_c_network' (the one we're going to use), 'stable_cascade_train_stage_c', 'stable_cascade_gen_img'

Step 3:

- Open the Kohya ss bmaltais GUI using the GUI batch file in your kohya_ss folder. The cmd.exe window that pops up should include 'Running on local URL: http://IP address:port number'. Go to that URL in your browser. (Defaults are usually one of the following - http://127.0.0.1:7860/ or http://localhost:7860/ )

Step 4:

- With the GUI gradio interface open, click on the 'Lora' tab. Pick a random source model such as xl-base (we'll be re-writing that part). Under 'folders'>Image folder, input your dataset

(Note: Kohya's formatting is such that you want this to be _base folder_, with another folder inside it being _desired repeats_folder_. Example: My dataset > 1_my dataset, would be used for a dataset with 1 repeat. Your images will be in the 1_my dataset subfolder, with My dataset being the folder path you select for 'image folder'). In the output folder, select a folder you want your lora to save to- Here is a visual example: https://files.catbox.moe/vedifc.png

Step 5:

-

Head on over to the 'Parameters' tab. Here, you can fill in the LORA training settings you want to try*.

(Suitable settings for CASCADE prototype loras are still pending - however, a Civitai user did an indepth exploration of base Cascade settings using Onetrainer. These may prove as a useful starting point: https://civitai.com/articles/4253/stable-cascade-lora-training-with-onetrainer )* Note: Some setting params will cause an error when attempting to bake. The Cascade branch on github, in particular, makes note that certain noise-related params will not work or throw errors. I found:

- I had to remove ARGS=block dims[ ] in my settings

- I had to remove the "[ ]" brackets from betas=[0.xx,0.xx] in my settings

- Certain param terms may not directly translate, especially if you forget to click on the 'Lora' tab in Kohya GUI (For example: you may see '--learning_rate_te', this must be changed to '--text_encoder_lr'. Likewise, Cascade lora training only uses one text encoder, so if you see 'learning_rate_te2', remove it)

- Sometimes, especially if you forget to click on the 'Lora' tab in the Kohya GUI, the command print will come out without '--network_module=networks.lora' '--network_alpha=""' '--network_dim=""', and you will need to add them

This is uncharted territory, so you may need to experiment

Step 6:

- Once you have filled out the 'Parameters' tab, scroll to the bottom and click the 'Print training command' button. In the Kohya_ss cmd.exe window, it will poop out all your settings in a big block that starts with something like 'accelerate launch'. Highlight this giant block, copy it, and paste it into a notepad for you to work on

- Here is a visual example: https://files.catbox.moe/euf78v.png

Step 7:

- Now that you have put the copied parameters into notepad, go through it and:

- Change

./sdxl_train_network.pytostable_cascade_train_c_network.py(you can remove the ./ if using cmd.exe in later steps) - Change

--pretrained_model_name_or_path="stabilityai/stable-diffusion-xl-base-1.0"to--stage_c_checkpoint_path="the location of your reso_proto_epsilon-e5.safetensors".

For example:--stage_c_checkpoint_path="C:\Users\B\Downloads\new_ComfyUI_windows_portable_nvidia_cu121_or_cpu\ComfyUI_windows_portable\ComfyUI\models\checkpoints\reso_proto_epsilon-e5.safetensors" - After your '--output""' line in the block, add

--effnet_checkpoint_path="the location of your effnet_encoder.safetensors"

For example:--effnet_checkpoint_path="C:\Users\B\Downloads\new_ComfyUI_windows_portable_nvidia_cu121_or_cpu\ComfyUI_windows_portable\ComfyUI\models\vae\cascade\effnet_encoder.safetensors" - Add

--text_model_checkpoint_path="the location of your reso_proto_delta_e5_te.safetensors"

For example:--text_model_checkpoint_path="C:\Users\B\Downloads\new_ComfyUI_windows_portable_nvidia_cu121_or_cpu\ComfyUI_windows_portable\ComfyUI\models\clip\cascade\reso_proto_delta_e5_te.safetensors"

- Change

Step 8:

You may now close your kohya_ss GUI, if desired. From your start menu, open a fresh command prompt (cmd.exe) or windows powershell script.

(Note - If using windows command prompt, you do not need ./ infront of your commands, where as you will want to add ./ when using powershell. Both will have the same result. I will be using cmd.exe for the rest of the tutorial, for simplicities sake

Step 9:

- In the command prompt window, type

cd the location of your kohya_ss installationand hit Enter

For example:cd C:\Users\B\Downloads\kohya_ss - Now, in the command prompt window, type

the location of your kohya_ss installion\venv\Scripts\activateand hit Enter

For example:C:\Users\B\Downloads\kohya_ss\venv\Scripts\activate -

Copy+paste your big 'accelerate launch' block of text from the notepad you edited earlier (note - you may need to right mouse click to paste instead of CTRL+V), now hit Enter

- Here is a visual example: https://files.catbox.moe/fz96dc.png

[Thanks anon from /hdg/ for the venv activation change suggestion on this step]

- Here is a visual example: https://files.catbox.moe/fz96dc.png

If successful, your lora should begin to bake. If it throws an error (such as "tensor x is not equal to tensor y"), you may need to fiddle with the settings used and try removing/changing some of them to see what its mad about. You can keep copy+pasting your newly edited settings into the same window, don't need to start from scratch. If you get an error like 'cannot find stable_cascade_train_c_network.py', double check that you are:

a) on the cascade branch of kohya ss and that file exists in your kohya_ss folder

b) that in command prompt, you are in the regular kohya_ss folder and not a subfolder

Step 10:

- Once baking is complete, add your loras to your comfyui > models > lora folder and try them in the CAPGUI or with control prompt by inputting <lora:name of your lora here:1.0> into your prompt, yey

Using Onetrainer

Warning: There have been some reports that Onetrainer is ignoring the 'Prior model', meaning in its current state it may not be able to train loras successfully for the finetune. More experimentation is needed.

Note: I have personally opted to continue my testing using Kohya_ss instead of Onetrainer, as Onetrainer has a 75 token caption limit and seems to have some differences in how results are handled/limited input options. I am uncertain whether it will train accurately on the prototype or not - however, others have trained regular Cascade loras using it with success

Step 1:

- Download and install Onetrainer. Installation instructions are available on the Github page

https://github.com/Nerogar/OneTrainer

Step 2:

- Create a subfolder within the Onetrainer folder called 'Models-Cascade'

- Move a copy of your effnet_encoder.safetensors and a copy of your reso_proto_epsilon-e5.safetensors to this folder

Note: Onetrainer is not capable of parsing filepaths that are outside of its main folder, hence the reason for this

You may also create a 'Models' folder if you would like, which is the default place Onetrainer saves the full epoch loras

Step 3:

- Open Onetrainer using the start-gui.batch file in the Onetrainer folder. It should automatically open up the GUI window

Step 4:

- In the top left hand corner, select '#Stable Cascade' and in the top right hand corner 'Stable Cascade' 'Lora'

- Alternatively, a Civitai user used Onetrainer to successfully train loras for base Cascade. You can use his .json for training params (or a starting point) by adding it to your OneTrainer > training_presets folder

https://civitai.com/articles/4253/stable-cascade-lora-training-with-onetrainer

Step 5:

-

Under the 'Model' tab, your settings should look like this -

Model: stabilityai/stable-cascade-prior

Prior Model: your filepath to reso_proto_epsilon-e5.safetensors in the Models-Cascade folder you made within Onetrainer- For example:

C:\Users\B\Downloads\OneTrainer\models-cascade\reso_proto_epsilon-e5.safetensors

Effnet Encoder: your filepath to effnet_encoder.safetensors in the Models-Cascade folder you made within Onetrainer - For example:

C:\Users\B\Downloads\OneTrainer\models-cascade\effnet_encoder.safetensors

Decoder Model: stabilityai/stable-cascade

Model Output Destination: models/yourloranamehere.safetensors - Here is a visual example: https://files.catbox.moe/8y48lt.png

Any settings in the 'Model tab' outside of the above gave me an error when attempting to train

- For example:

Step 6:

- Input your dataset/captions folder in the 'Concepts' tab. Double click on it to edit the settings for repeats, variations, etc

- Change training factors in the 'Training' tab as desired (Note the [...] button beside the optimizer for further settings related to it)

- In the back up tab, you can chose to save by Epoch (or whatever other option you desire)*. If you do not select this, then Onetrainer will only give you the final end-result file in the models folder

* If you save by epoch or other option, it will spit these out in the Onetrainer > Workspace > Run > Save folder

Step 7:

- You may edit the 'Lora tab' settings, but leave the 'Lora Base Model' blank

- Try any additional adjustments you want outside of these

Step 8:

- Press 'Start Training' and let it work its magic

Step 9:

- Once baking is complete, add your loras to your comfyui > models > lora folder and try them in the GUI or with control prompt by inputting <lora:name of your lora here:1.0> in your prompt, yey