Cosmia's guide to AI art

This guide does not aim for completeness. They merely describe what I have learned from making my mixed-media project. This is a companion piece to the project files: https://drive.google.com/drive/folders/1llP3YQUT-Dx74H6PQNjbMxk4QziXmf-E?usp=sharing

I suggest that you download the project files, and look for the styles folder. You can then drag an image there to AUTOMATIC1111 webui and see the metadata showing how it was made.

Art neural networks

What neural networks do

A neural network is a machine that intakes in some numbers and outputs some numbers. They can do many tasks, as long as the task is translated into the format of numbers in, numbers out. Furthermore, since a neural network is a machine made of software, with many moving parts, you can rewire the inputs and outputs, to get one neural network to do different things.

To make a neural network, do these steps:

- Describe the shape ("architecture") of the network.

- Provide example input-output pairs ("dataset").

- Train the network (this step takes a long time).

Chinese people like to say that you don't "make" a neural network, you "cook" it (炼丹), like a Taoist alchemist would cook a pill of immortality. This is a good metaphor.

After a neural network is cooked, you can finetune it ("twice-cooked neural network"?) by giving more input-output pairs and cooking it again. Finetuning is useful when you want to take a network that does a task A, and cook it further to do task A' which is a bit different from A. Overcooking it could be bad, as the twice-cooked network would forget what it learned in the first cooking period, but undercooking is bad too, as the network would not learn the new data. Cooking is still mostly alchemy.

Finetuning is much cheaper than the original training. For example, a typical finetuning of Stable Diffusion 1.5 costs about 100 USD, while the training of SD1.5 itself cost about 600,000 USD.

A brief history

An early example of neural network for art was DeepDream of 2014. It was actually a neural network not specifically trained to generate art, but trained to classify images from ImageNet. By plugging the neural network in a different way, a Google engineer created DeepDream, which stylizes images. This shows that a neural network is much more than what it is trained to do.

Later, Generative Adversarial Networks came to dominate during 2014--2018 period. The most successful one was Nvidia's StyleGAN2. This gave us things like This Person Does Not Exist, This Pony Does Not Exist, etc.

GANs are fast. They take one go to generate images, unlike diffusion networks that take many iterations. They were overtaken by diffusion models mostly in 2020, for unclear reasons. The typical story is that they were abandoned because they were hard to train, but Gwern argued that they were simply displaced by diffusion models by bad luck.

While GANs are no longer used for text2img generation, they are still used for many img2img tasks, such as style transfer, upscaling, coloring. Though diffusion models can do all of those, GANs are faster, so if a GAN can work, use a GAN.

Diffusion models

A diffusion network ("base model") is a neural network that is trained to take in a noisy image, plus some "conditioning", and output a less noisy image that is closer to the conditioning. By rewiring such a network, it can be used to perform many tasks. The most common way to use it is to run it repeatedly over a single image, with English text as conditioning, to get a less and less noisy version, until a finished image is got. This is the standard text2img method. However, a single diffusion model can be used to do many tasks in many ways, each way is a "workflow".

For example, if the conditioning uses both text and image, we end up with the img2img method (generate an image that resembles an image, as described in text). ControlNets are basically extra neural networks that can be plugged into a base model to allow more complex conditionings. As a historical note, the earliest diffusion models could not accept any conditioning, and would generate images unconditionally. For example, a diffusion model trained on cars would generate a random car from the space of possible cars, etc. Conditioning was added a bit later.

Given a base model $X$, you can finetune it to a model $X + D$. Now you can just upload the $D$ for the world to see. This $D$ is a LoRA. A LoRA is like an equipment, and must be applied to the particular base model it is made for.

There are currently the following base models:

- Open source

- Stable Diffusion (SD) series: Originally trained as a research project (SD1.1 to SD 1.4), it was then trained by the company Stability AI and released for free. The most notable versions are SD1.5, SDXL, and SD3.

- Pony Diffusion (PD) series: Extensively finetuned from SD series. It is so finetuned that most of the extras (LoRA, plugins, etc) made for SD series would fail for the PD series.

- Many more...

- Closed source:

- Midjourney. Its selling point seems to be that it is very easy to use on mobile, via Discord bots.

- Dalle: made by OpenAI. Dalle 3 is the image generator behind Bing Image Generation and ChatGPT.

- Ideogram. V1 is roughly as powerful as Dalle 3, but with much looser safety controls.

- Many more...

Other notable models

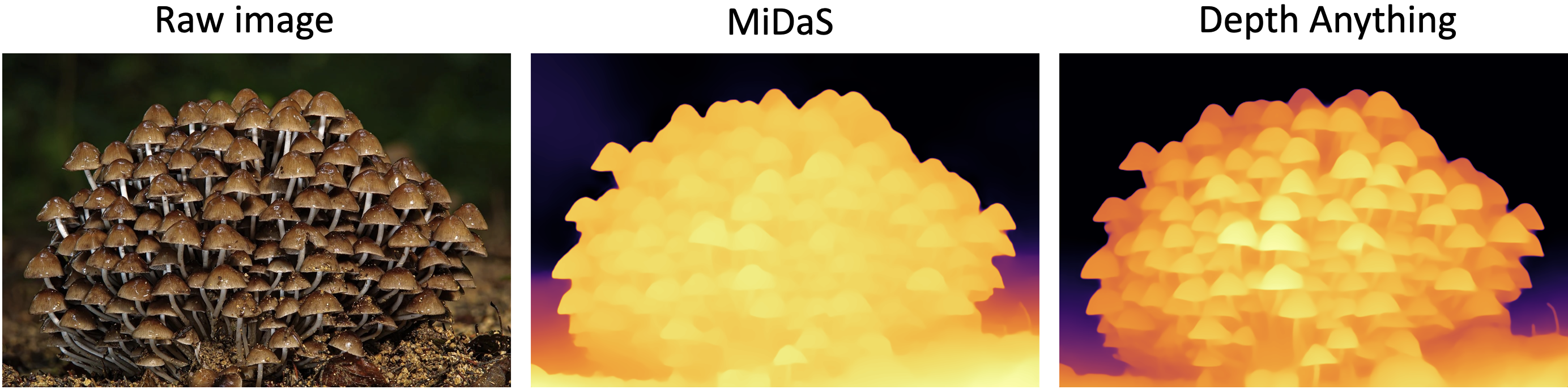

The recent Segment Anything neural network was trained to generate object masks for input images, but it has been finetuned and rewired into doing many other computer vision tasks, such as for estimating depth map from a single image.

Some art softwares are not based on neural networks. There used to be more of those, but most have been obsoleted by neural networks.

EbSynth does style transfer but it uses purely classical computer vision, with no neural networks. As for frame interpolation (tweening), it is a task that still has a place for classical computer vision, though neural networks do very well in it too.

How I made art with Pony Diffusion

I did most of the art with Pony Diffusion V6 XL (PDXL). It is simply the best from the whole series. For some infinite zoom/scroll backgrounds, I used Juggernaut XL - V9+RDPhoto2-Lightning_4S, which has more art styles, and is guaranteed to not randomly put ponies into a scenery (a constant danger when you try to use PDXL for pony-less art).

Controlling styles

Typically, there are the following methods for modifying styles, from most to least powerful:

- Use a different model.

- Use style LoRA

- Describe the style in the prompt; use a reference image in a Reference ControlNet.

- Change the software (e.g. ComfyUI instead of AUTOMATIC1111).

- Change the scheduler (e.g. SDE Karras instead of Euler A).

Unless you are doing something like a MAP [Multi AI Project], you would probably want style consistency, which means that you should stick mostly to a single model in a single software with a single scheduler and a single set of LoRAs. For me, I mostly sticked to Pony Diffusion V6 XL in AUTOMATIC1111 with Euler A scheduler. I only modified the prompt and the weights on the style LoRAs.

See #style-research-group in PurpleSmart.ai Discord server for more information on how to control the style.

By the way, many models have a "default style", but the default style is not to be relied on. For example, if you just ask for "a cat", you would probably get a wide scatter of cat styles vaguely centered around the default style. For style consistency, specify the style like "a cat, watercolor, <lora:Rainbow Style SDXL_LoRA_Pony Diffusion V6 XL:1>, ...".

What works and what doesn't work

It is hard to predict what works and what doesn't work. My advice is to try everything several times, and if it doesn't work, ask around the PurpleSmart.ai Discord server.

Things that just work:

- Most kinds of scenery

- Single character, facing any direction (front view, side view, 3/4 view, facing away from the camera).

- Hands that don't touch other things

- Hands that are holding or touching something with a straight edge, such as a stick, a handle.

- Two characters that are not touching, or only touching a little.

- Controlling most poses with OpenPose ControlNet + Depth ControlNet

- Typical perspectives (parallel, one-point, two-point, three-point, fish-eye, worm's eye view, bird's eye view).

- Infinite zooms with deforum

- Consistent style using Loras, or certain phrases.

Things that are possible but hard:

- Holding hands

- Large contact area between 2 characters (hugging, sleeping together, etc)

- 3 characters touching

- Rare objects, such as a bag of frosting, the handle-bar-thing that you see on the side of a bathtub.

- Contorted poses (such as in yoga)

- Consistent styles across multiple AI models. You are better off sticking to a single diffusion model, preferably with a reference style image.

Things that don't work:

- Wiping tears with the back of the hand.

- Rotoscoping character with deforum.

- Rotoscoping anything whatsoever with PDXL.

- Diverse artistic styles (van Gogh, cubism, pop art, etc) with PDXL. Because PDXL is finetuned a long distance away from SDXL, most of the styles available in SDXL are no longer available in PDXL.

- AnimatedDiff with PDXL.

- Train tracks that go left-to-right. Every AI artist I have tried insists on making a one-point perspective.

- Asking Dalle 3 (in ChatGPT) to explain what it can and cannot do. It confabulates a lot, probably because it is required to not leak the internal system prompt.

Censorships:

- Dalle 3 always refused to draw teeth-brushing for being "unsafe". Ideogram could do it, but it doesn't draw Pinkamena well. PDXL tried, and failed to generate any toothbrushing. So I painted it myself.

- Dalle 3 refused to draw phone UI or ponies, when accessed through ChatGPT, but it drew it without problems when accessed through Bing Image Creator.

- Dalle 3 refused to do img2img, style transfer, etc. It can still use images as rough inspirations.

- Dalle 3 and Ideogram have no negative prompting. Asking it to "don't do X" would end up putting "not X" into the positive prompt, and that would really suck because it has about the same effect as saying to someone "don't think of a white bear". The X ends up appearing prominently in the final image.

Image workflow

If I have a mental image of the picture I want, I would have to cook up the very first draft by blocking in the colors and then do img2img. I cringe when I look at the first draft, but surprisingly it does work! Here is a complete example:

First, I did a quick drawing by hand:

I imported it into img2img and in order to avoid mixing Twilight and Pinkamena's clothes, I used inpainting to get Twilight, then get Pinkamena, then the middle-edge of these two halves. After that, I generated a few variants with img2img:

I generated about 10 of them, picked my favorites, and photobashed with the generated pictures, as well as adding in a previously generated background.

Then I repeated the impaint-Krita cycle, fixing this and that, until it's done. Typically this takes about 5--10 cycles. Then I removed the background, combined it with a previously generated background painting, then relit the foreground in Krita.

The final result is:

A few more before-and-afters:

Sometimes I need to "titrate" the weights in the prompt. This can be done easily with X/Y/Z plot with "Prompt S/R". Here is an example that modifies the weights on (smiling:0.0) and (annoyed:0.0).

ControlNet

Very few ControlNets work with PDXL. I can confirm that these do work:

Referencedepth_anythingwithcontrollllite_v01032064e_sdxl_depth_500-1000 [b35a4fd8]dw_openpose_fullwithcontrol-lora-openposeXL2-rank256 [72a4faf9]controllllite_v01032064e_sdxl_canny_anime [8eef53e1]controllllite_v01032064e_sdxl_canny [3fe2dbce]

Typically, I use these settings:

- control weight

1.2 -- 1.3; ControlNet is more important;- Default settings otherwise (starting control step

= 0, ending control step= 1, preprocessor resolution= 512).

Multiple ControlNets work. I can recommend using both OpenPose ControlNets and Depth ControlNets, if you want to perfectly enforce pose. Even then, some poses are impossible to do with PDXL V6, and must await the next version.

For example, here is an example of using Depth ControlNet to create an image with the same pose and setting. For some poses, using the Depth ControlNet is not enough, in which cane combining it with OpenPose ControlNet works better.

Rotoscoping

I have tried to do rotoscoping in several ways, mostly failed. The tools available for base SDXL are just not available for PDXL.

Deforum almost completely fails at rotoscoping. I ended up doing it half-manually with a lot of editing and frame-by-frame animation.

If you want to study deeply how it works, look here: FizzleDorf's Animation Guide. However, if I'm being honest, I think you should not look too deeply into this, as these are skills likely to be obsolete in a year.

Scribble effect

This is like an AI-powered fade-in. It is easy to do. First, make a base-image, then put it into img2img, and mess with the "denoising strength" and "sampling steps". I suggest about 10 -- 20 sampling steps, and 0.1 -- 0.8 denoising strength.

Another idea is to take a base image and do img2img inpainting on all of the image, except a region:

Deforum

Deforum is useful for infinite zooms, infinite scrolls, and a very small amount of animation.

The simplest is infinite scroll, where you just let it move in the X- or Y-direction every frame.

Also easy is infinite zoom. I suggest that, to keep the zoom visually consistent, use a reference image as a depth-map with ControlNet. For example, I used this as reference:

Which ends up with this video: https://i.imgur.com/kSJhNj9.mp4.

The hardest to do is character animation. Deforum is pretty close to useless for this. I ended up with mostly frame-by-frame animation. First I would create a single keyframe, then break it into components, then create more keyframes by moving the components around. Then I would use impainting to smooth over the rough edges, and do a bit of editing-impainting cycle to get it done. It is basically frame-by-frame animation. Very manual and very time-consuming.

Post-processing

Sometimes I need to remove the background, or upscale them. There are plugins inside AUTOMATIC1111 for this.

For upscaling, I found Real-ESRGAN good enough. The slight smoothing artifacts don't bother me, as they fit the aesthetic. If the artifacts bother you, try the other upscaler neural networks.

For removing backgrounds, I used danielgatis/rembg. I have found that the networks are pretty similar in performance, with silueta and isnet-general-use being the best. You need to fiddle with the settings for best effect. Run rembg s in the command line after installation, then open the URL that appears in the command line interface.

Sometimes the rembg fails to do its job, so I do it manually in Krita.

I used struffel/simple-deflicker for deflickering timelapse image sequences. I also used darktable's color mapping to fix color inconsistencies (Samsung camera is so smart as to adjust the damn color profile in the middle of a photoshooting session).