LLaMa 4bit w/ Kobold

Important update

Everything below is now essentially outdated as Kobold should support LLaMa out of the box with the git version of transformers.

Acquire models on your own.

Note

According to the Kobold Discord, there are at least three possible factors I'm aware of now that can hamper results.

- The 4bit models available via a torrent magnet that have been distributed have not had their SHA256 hashes reproduced and are apparently producing worse results. Quantize them yourself or wait on somebody else to share them (4bit 30b as of this writing does not seem to be available publicly). Quantizing yourself may actually be required due to the conflicting hashes and output quality results but this is currently speculation.

- Kobold also apparently does not have the new samplers for LLaMa implemented. Results should be better when these samplers are implemented (if they aren't already?). You may also want to play around with sampler settings a bit, per https://twitter.com/theshawwn/status/1632569215348531201

- The current huggingface transformers implementation for LLaMa handles the BOS token incorrectly with Kobold. This causes prompts to be cut off and also greatly reduces output quality. There is a series of posts explaining this from Henk (https://boards.4channel.org/vg/thread/421635798#p421725571). It is expected to be fixed eventually, you can follow the PR here for updates: https://github.com/huggingface/transformers/pull/21955

All of this is not even considering the fact there is currently no finetune and many more optimizations can be made (some of which are now starting to crop up).

tl;dr: If you don't know what you're doing, better to wait until those who better know what they're doing can properly share and update everything in due time.

This was my very janky setup to get 4bit LLaMa models loaded into Kobold. Special thanks to the anon who helped solve the final step of this.

I've never used Kobold before, and installed Kobold from scratch. I could not get anything working with Anaconda so I opted to just use my normal Python install with a venv. If you know what you're doing you can adapt this guide as necessary.

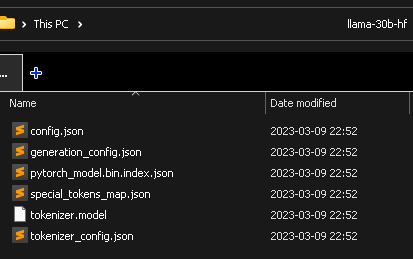

You will need Python, the CUDA Toolkit, VS Build Tools (only 2017 or 2019 work), git, the 4bit LLaMa weights (or quantize them yourself), and model configs (these can be acquired from huggingface, a torrent, or converting the model yourself). Note that as of 2023-03-13, the tokenizer has changed, making all previous distributions of this obsolete. You will likely need to build it yourself with the script provided in the huggingface PR/repo.

At this point you will need to make one small modification to the GPTQ repo.

Open gptq.py and change from quant import * to from .quant import * (add the missing period).

If there's a simpler way to do this without modifying the repo let me know and I'll make the necessary changes to my fork and/or guide.

Drop the LLaMa configs into the models folder in it's own subfolder, like so:

In the web interface, you can then pick the folder you created in the models folder to load the model, via AI > Load model from it's directory > the directory

Initial model load is potentially going to take several minutes, especially if you're using the 30B or 65B variant.