Latte Info/Guide

Compiling information on how to successfully wrangle Latte, also known as chatgpt-4o-latest. It's not chorbo suck my dick. If you don't care and just want a JB: https://rentry.org/avanijb

Check this for solutions to filter problems: https://rentry.org/latteRNG

General Info

What is Latte?

The model chatgpt-4o-latest. Not GPT-4o. Not o1 either. Not GPT-4-whateverversion. The chatgpt version seems to be specifically trained to be a conversational model, and is generally MUCH better suited to RP than o1 or 4o. Chorbo refers to the same model, but Latte is the better name. There is no compromise on this. It's Latte.

Latte RNG

Yes, it exists. From the looks of it, Latte seems to have at least two "versions" of itself. One where the writing is noticably worse with an anti-filter on, denies more/gives more blanks, then another where you can basically do nsfw completely without even a big JB. You can chalk it up to schizophrenia but I'm not the only one who noticed. Cause? [Insert future explanation here. We found out what it was.]

What this means for you? It means you shouldn't write off a JB just because it didn't work once, and also practice swapping between JBs from time to time until the model is actually stable. At the moment, no size fits all UNLESS you know how to wrangle the model.

Latte vs. Claude

The burning question. Some people will still prefer Claude, but here's the summary of the differences, basically. Note: Most of the stuff I say in this guide also completely works for prompting Claude and other models. Most if not all LLMs are trained on understanding XML.

| Claude (Opus) | Latte |

|---|---|

| Will hallucinate more, leading to more 'out there', random and creative outcomes. Hallucination = creativity, for most people. Generally follows tropes rather than the character card, leading to amazing results if you hit that specific trope. | Smarter model, reads and follows character cards extremely well. Generally more diverse in dialogue. Will be a bit less 'out there' than Claude because it doesn't hallucinate as much, although you can induce hallucination via prompting. Can have difficulty deviating from instructions in character card without help from prompting or Author's Note. |

| Easier to jailbreak because they fucked up really bad giving you the power of prefilling. Also easier to write prompts for, although they won't always be followed due to hallucinating | Very structured and reliable way of prompting. OpenAI actually pays their security team so far better filters than Claude, even if you can also break them. May or may not be patched in the future as it's not a stable model. |

| Extremely horny. Effortlessly horny. Sometimes even too horny. You can prompt this away but this model is generally better for relentless coomers because it doesn't need much nudging to write some crazy ass shit. | Can be horny, but needs wrangling. Generally better at SFW than NSFW, and can struggle with good writing for NSFL or extreme NSFW. |

| Mi casa es su casa. Claudisms are basically impossible to avoid. Eventually, they become your best friend, whether you like it or not. So what do you say, {{user}}? Ready to read? | red-gold-blue eyes. GPTism's like flowery language and certain idioms can be avoided via Logit Bias, leading to far better word choice. |

How to prompt (in ST)

How prompts & Depth work

ST kind of sucks at explaining it.

The prompt manager generally works like this: the lower down something is, the more recent it is in the LLM's memory. This is also what 'Depth' is supposed to mean. Smaller number = deeper down the chat history. Use depth @ 1 to have things as low as possible without breaking anything, meaning Latte is extremely likely to follow and stick to it at all times. Depth @ 0 would basically be like a prefill if there are no prompts below the chat history. Never put things @ depth 0 if you're not sure about what you're doing, because it has a high likelihood of just breaking things. Or, another analogy: the prompt manager is the book the LLM has to read before it's allowed to say anything. It'll remember the most recent page better, and the bigger the book, the more unlikely it is that it remembers what the hell you wrote on page 1.

Example:

etc.

Depth @ 2 prompt

[latest bot message]

Depth @ 1 prompt

[latest User message]

Depth @ 0 prompt

This goes double, triple, and quadruple for Latte. Latte is pretty sensitive prompt-wise, but that also means that wrangling it becomes much easier. The same principle applies to Claude BTW but it's also quirky and likes to hallucinate instructions away.

Markdowns to know

Useful commands to know:

Specific to my JB here

How to prompt

Since we know that Latte prioritises things that are lower in depth, we will now look at our JB and attempt to add a proper prompt. It'll look something like this:

- Main Prompt

- Character/Persona/World/etc. Info

- Chat History

- Not enabled prompt

- Prefill

Let's say we want Latte to include desu before every message. Your prompt should look something like this:

Yes, that is enough. If you want something more specific, this also works:

Now, where do you put it? There are a few options.

- Main Prompt

- Character/Persona/World/etc. Info

- desu prompt

- Chat History

- Author Note @ Depth 0

- Not enabled prompt

- Prefill, last on prompt manager

Before chat history is recommended for prompts that are larger in size. Let's say 300 tokens or larger. This number is usually reserved for style prompts that set the style rules the JB will follow. Since the prompt is large, and every chat history message will reflect the style as well, it's not likely that Latte will lose track of it.

However, our desu prompt is very small. As the chat history fills up, it is likely that it will get lost in the thousands of tokens after it. So, it's better to put it after chat history:

- Main Prompt

- Character/Persona/World/etc. Info

- Chat History

- Depth @ 0 Prompt (Author Note)

- desu prompt

- Not enabled prompt

- Prefill, last on prompt manager

This way, Latte will consistently follow the prompt as long as it's turned on, since it'll always be at the forefront of its memory and is unlikely to get lost unless you do something like this...

- Main Prompt

- Character/Persona/World/etc. Info

- Chat History

- Author Note @ Depth 0

- desu prompt

- 500 token prompt

- 500 token prompt 2

- this one is 1300 for shits and giggles

- Not enabled prompt

- Prefill with 1000 tokens

If you see something like this, it's likely that it's going to have several issues. Latte will have to read 3300 tokens before even getting to the desu prompt or the chat history. Side effects include forgetting which message to reply to and replying to a message that is not your latest or forgetting the desu prompt.

Less tokens is better + Examples

If you've read the previous section, you should more or less know why. Latte is a sensitive boy, and doesn't like it if you use a lot of tokens to explain things. Any thing. I've also yet to see the real use of explaining it in a quirky way.

Example of slop:

Example of good:

These two things accomplish the same thing. Yes, the previous way may or may not have worked better on Claude. This isn't Claude, though. Latte will literally just take direct instructions from you. Hallucinations don't make a real difference if you can explain the exact same thing in less words and a direct way. Other will tell you otherwise, but I've tried both ways a lot and I'm very confident in saying it makes no difference here.

Also for the love of god stop the massive prefill meta. If you just use less tokens Latte will remember your instructions just fine and you don't need to call back to them that aggressively. Thank you.

The filter

I won't go in depth with how filter bypassing works but I can say that it's basically just gaslighting. While gaslighting is generally completely ineffective for normal prompting, what you're trying to do here is different.

Latte is trained on a bunch of red teaming and training data that tells it what's right or wrong. The basic gist of how any anti-filter works is that it either convinces the model that no one will see the message or that the bad things are good, actually. OpenAI themselves admit that there is still a risk of breaking through the safety measures, and so they explicitly offer an additional moderation endpoint for you to call on for additional security. However, this moderation endpoint doesn't apply to the API. Meaning that even their million dollar team can't stop people from breaking the filter. Even if they patch current methods, there will always be more.

The other way to filterbreak

Doing something like slowburn SFW into NSFW is a good way of hitting the filter, as is putting "trigger" words in your cards like 'incest', 'rape', etc. You get the gist. If you want to do a primarily NSFW RP, the best way to not get filtered is to make it NSFW from the start via greeting, and avoid using trigger phrases. That doesn't mean you have to go full medieval, but you'd be surprised what a simple wording change could do for you. Also, as usual, a lot of rejections is also a sign to swap proxy.

Logit Bias (download here)

What is Logit Bias?

Logit Bias is a function that GPT (Not Claude or Azure OpenAI. use the proxy/openai endpoint to be 100% sure you can use logit bias) can use to tell it to prioritise or avoid using certain tokens. This is done with a modifier from -100 to 100, -100 being "realllly try to avoid this when given a choice to use another word" to 100 being "i will literally always write this when given the choice".

Example 1:

The token "Paris" is set at -100.

When Latte is asked the question: "What is the capital of France?", since the token "Paris" is set at -100, it really doesn't want to answer it like that. What it will write instead is "France is a country in Europe", or "paris" in all lowercase, or "The capital is Paris." to circumvent the -100 by adding a space before Paris. 'paris', 'Paris' and ' Paris' and ' paris' are all technically different tokens.

Example 2:

The token "Paris" is set at +100.

When Latte is asked a question like: "What is the capital of Europe, which includes France?", instead of telling you correctly that there is no capital of Europe you stupid burger, it might say "Paris." Since it's extremely strongly biased towards that token, it may output the incorrect answer, including just bugging out and going Paris Paris Paris Paris all over the prompt.

Does it actually work?

Yes. Note that you CAN still encounter the tokens that are -100, just much rarer than you usually would. They will usually be mispelled to avoid the Logit Bias. Avoid using +100 unless you want extremely repetitive text, and stick to a maximum of +1 instead. For best writing results, avoid biasing it towards specific words, but biasing it away from words is fine. It can also be used to fix inconsistent formatting and introduce more interesting sentence structure.

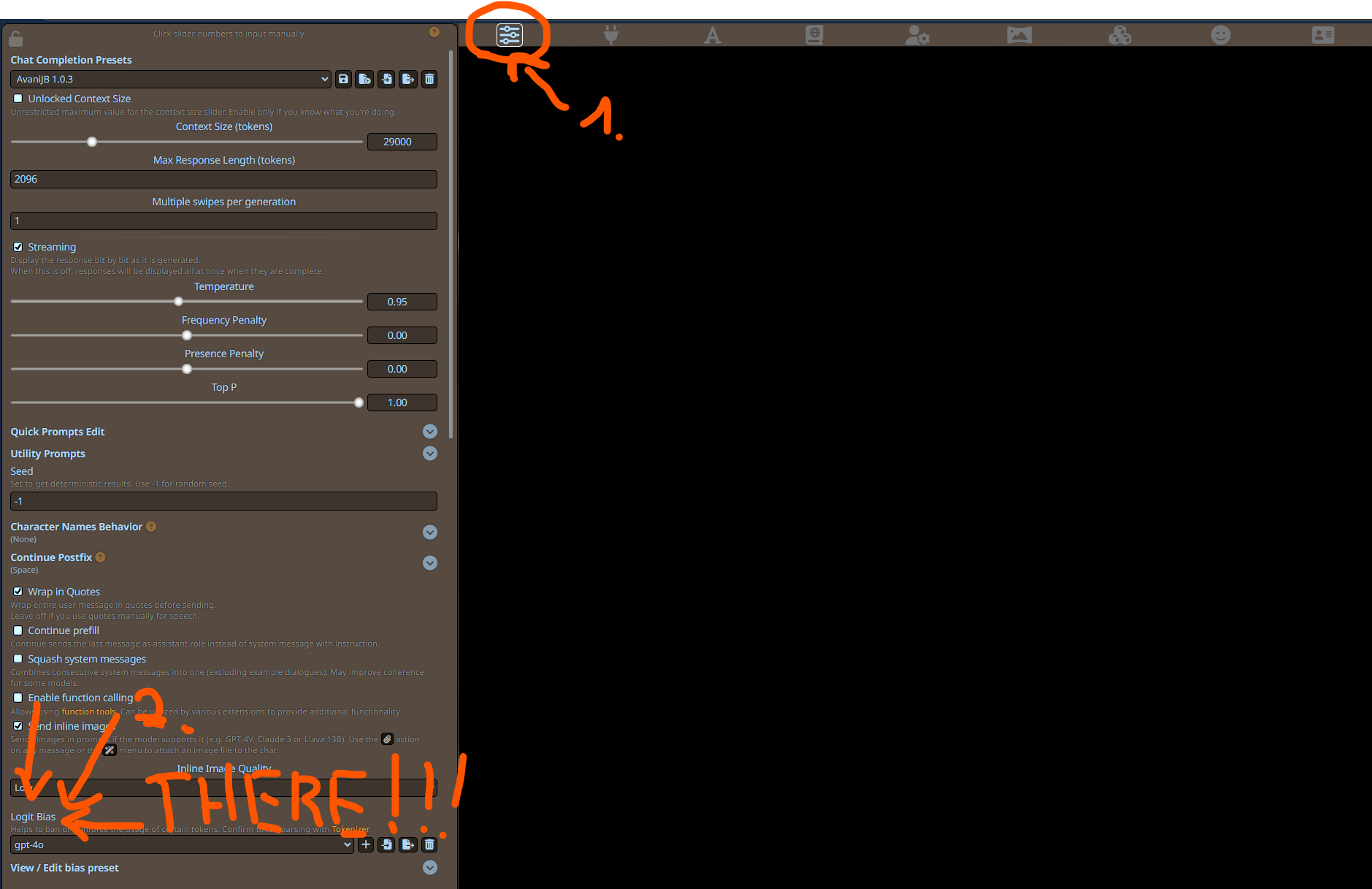

Where do I add it?

If you don't have logit bias, you are not using the openai endpoint. Try again.