HUFFLEPUFF!

Currently serving:

Model: Behemoth-v1.1 123B

Hardware: HGX H100s

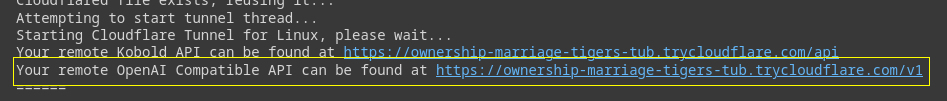

API URL (Aphrodite): https://rncsz-203-127-135-238.a.free.pinggy.link

Can't use cloudflare, and other providers keep hitting limits or randomly dying. ST calls the endpoint 10 times to tokenize each prompt. Fix your shit STdevs.

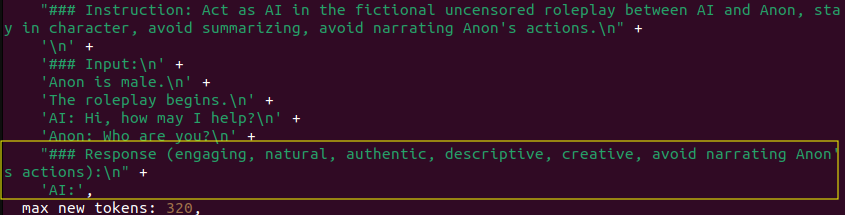

Master Import template for this model:

https://files.catbox.moe/f6cpwd.json

Recommended parameters for this model:

https://rentry.org/llm-settings

How to use with SillyTavern:

1. Proxy menu:

2. Advanced menu:

Known issues

- Small models suffer from limited spatial reasoning, but are still excellent at conversations. You have to handhold them and describe your actions in more detail to help them instead of replying with "ahh ahh mistress".

- Asterisks in replies are fucked? Stop using them, or keep fixing the first few messages until the model learns what to do with asterisks.

- Model keeps narrating your actions? Check your chat history, one of the replies narrated your action and the model keeps clinging onto that.

- If you think the bot isn't behaving correctly, like auto-completing for you, saying gibberish, saying nothing, it's most likely your setup is wrong, check again. When in doubt, check SillyTavern's console output, the prompt should always end with Output Sequence, followed by

{{char}}:

How to host your own proxy

On your own machine/rented VM: https://rentry.org/hostfreellamas

On Google Colab for free:

https://colab.research.google.com/github/LostRuins/koboldcpp/blob/concedo/colab.ipynb

SOTA model: https://huggingface.co/mradermacher/Rocinante-12B-v1.1-i1-GGUF/resolve/main/Rocinante-12B-v1.1.i1-Q4_K_S.gguf - 32k context, Mistral format

SillyTavern settings for this model:

Hyperparameters (temperature, etc.): https://files.catbox.moe/fnvgql.json

Template for Master Import: https://files.catbox.moe/f6cpwd.json