Storywriting with Mikupad: A Guide for New and SillyTavern Users - By NG

|

The following is an overview for using Mikupad. It's written for new users, as well as those that are already familiar with SillyTavern or other roleplay frontends. Many of the concepts directly adapt over from SillyTavern, but there are a few nuances, as each frontend serves a slightly different use case. TLDR: Go here and experiment: MIKUPAD! NOV 2025 |

|---|---|

Mikupad vs. SillyTavern (ST)

If you're already familiar with SillyTavern, it’s important to understand that Mikupad and SillyTavern are built for fundamentally different purposes Before diving into setup:

- Mikupad is a story-writing interface: It’s designed for continuous narrative generation, long-form writing, and direct prompt control.

- SillyTavern is a roleplaying engine: It’s built around round-based interaction, character personas, and RP-style back-and-forth conversations.

Most of their differences flow naturally from this core distinction.

| Mikupad | SillyTavern |

|---|---|

| Clean, minimal interface focused on Sessions aka stories | Character cards with substantial Character ecosystem (chub.ai, etc.) |

| Direct control over the full prompt Simple context tools: Memory, Author’s Note, World Info |

Round-based roleplay dialogue with multiple control options Personas, group chats, and extensive RP-focused extensions and customization |

| Limited backend selections, focused on local inference | Numerous backend selections, continuously updated and automated |

| Maintaining story flow with undo/redo and Token Probability visualization | Continue, Re-Roll, Response Editing functions |

| Minimal install: Runs from single HTML file Server using NodeJS is optional |

Requires NodeJS |

Installing Mikupad

Before installing anything, you can run mikupad by opening the mikupad.html file in your web browser. No additional installation is required; files are maintained by the browser. If they aren't, Mikupad issues a warning.

For installation, the Mikupad github can be found here: https://github.com/lmg-anon/mikupad. Using git automates updating, and allows you the option of setting up Mikupad server, or you can download the file(s) directly.

The optional Mikupad server offers the added benefit of storing all your data locally, so you don’t have to worry about your browser wiping your Sessions. To set up the server:

git clone https://github.com/lmg-anon/mikupad.git- Install Node.js on your server

- Start Mikupad by running the file at ~/mikupad/server/start.bat

- You can access the server on port 3000:

[server address]:3000

Setting up Mikupad Parameters for first run

Setup of Parameters is similar to setting up SillyTavern's API Connections, though the Mikupad interface is more manual. You'll need:

- Server URL: localhost or API URL

- API type: llama.cpp, KoboldCpp, OpenAI Compatible, or AI Horde

- API Key: Needed for OpenAI Compatible, or if you've set up your local inference with an API key

- Model: For OpenAI Compatible this may self-populate into dropdown. If not, you'll need to look up the model name you're using and type it in from the documentation. Not required for other API types.

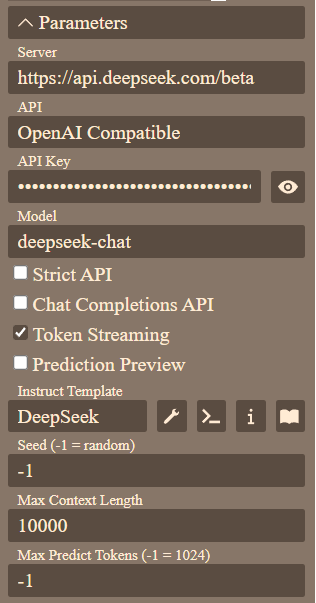

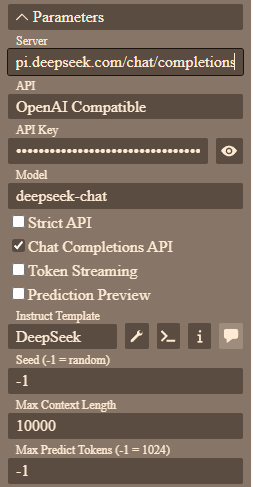

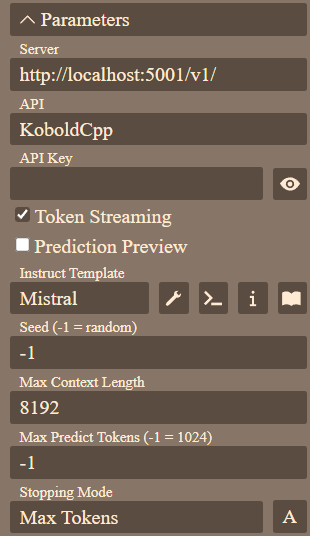

Example Parameters Settings

Below are some example Parameters settings that should get you started. Refer to the API documentation for your inference provider or engine.

| Deepseek Streaming |

DeepSeek Chat Completion |

Kobold Local |

OpenRouter |

|---|---|---|---|

|

|

|

|

| See below for DS Instruct Template Note beta endpoint only supports deepseek-chat model |

Note FIM not available for Chat Completion | Local Model, set Instruct Template to model in use. | Both Chat Completion and Streaming use same Server URL, just check the appropriate box. Begin typing at Model and Mikupad will show available models with those names. Set Instruct Template to model in use. |

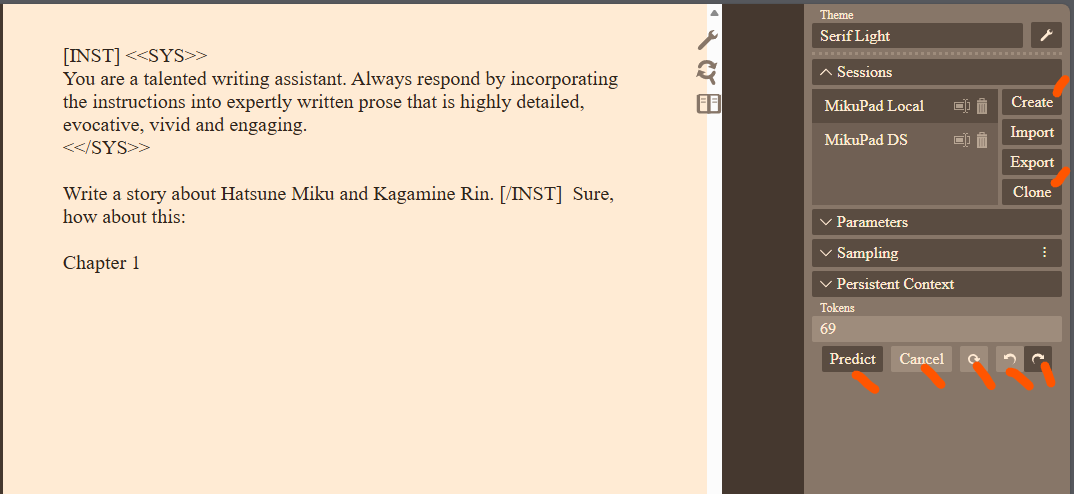

Mikupad Test Run

Once you get your parameters set up, try to run the basic Miku story provided with Mikupad... click Predict and it should either start writing (for Streaming) or flashing (for Chat Completion). For Streaming click Cancel to force it to stop writing midstream. If nothing happens and/or you get an error, go look at your Parameters setup and make corrections.

Below is the basic interface. Going from top to bottom of Session:

- Create: Creates an example Miku Session. Really, only useful for testing, as it blanks out all your settings, including Parameters, to a default

- Import/Export: Exports and Imports a Session. THIS EXPORTS YOUR API KEY AS WELL Handle these files with caution!

- Clone: Creates a local copy of the Session. You will use this most often as it creates a duplicate story and keeps your Parameters settings intact.

API Key Exposure

Export function outputs your API key! Don't share the file with anyone you don't want to be able to access your API funds!

Going from left to right on the bottom bar:

- Predict: This will begin creating a response. If "Streaming" is turned on in Parameters, you'll see it written out real time. It will run until it decides to stop, hits token limit set in Parameters, or you hit Cancel.

- Cancel: Stops generating text

- Regenerate: Regenerate the previously created block of text

- Undo: Erase the previously created block of text

- Redo: Un-Erase the previously deleted block of text

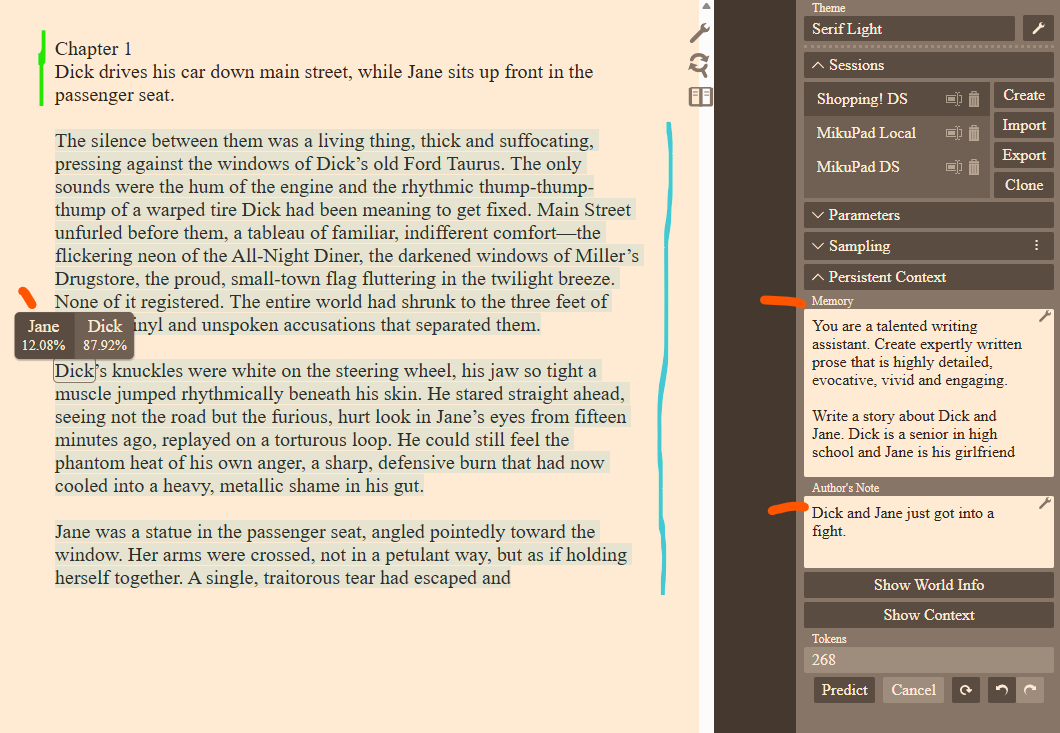

Dick and Jane test run: SOTA API Models

Session Formatting: Using Memory

After playing with the Mikupad a bit, I realized much of Mikupad focuses on local-inference instruct models. The below is commentary on how to configure Mikupad for large online models via API, which require much less handholding and formatting. A few notes on below, which is connected to DeepSeek API.

Some notes on the above, about format, sessions setup, and using Memory.

- I'm ignoring Instruct and System prompts and their structure, and just providing DS text. It works fine like this.

- I've moved all of the instruction from top of context to Memory. Memory works akin to ST Character Description and is always inserted at the top of the context. As with ST, this should include everything that's permanent about the prompt. Here, it's writing instructions and descriptions of the protagonists. I like this setup better than including instruction at the top of the story, because the top of the story will, eventually, fall out of context.

- The shown Memory is very very short, done that way to fit for this demo. In practice, it will be as long as a typical ST Character Card.

- Note any Jailbreaks would also be inserted into Memory so that it's always available at the top of context.

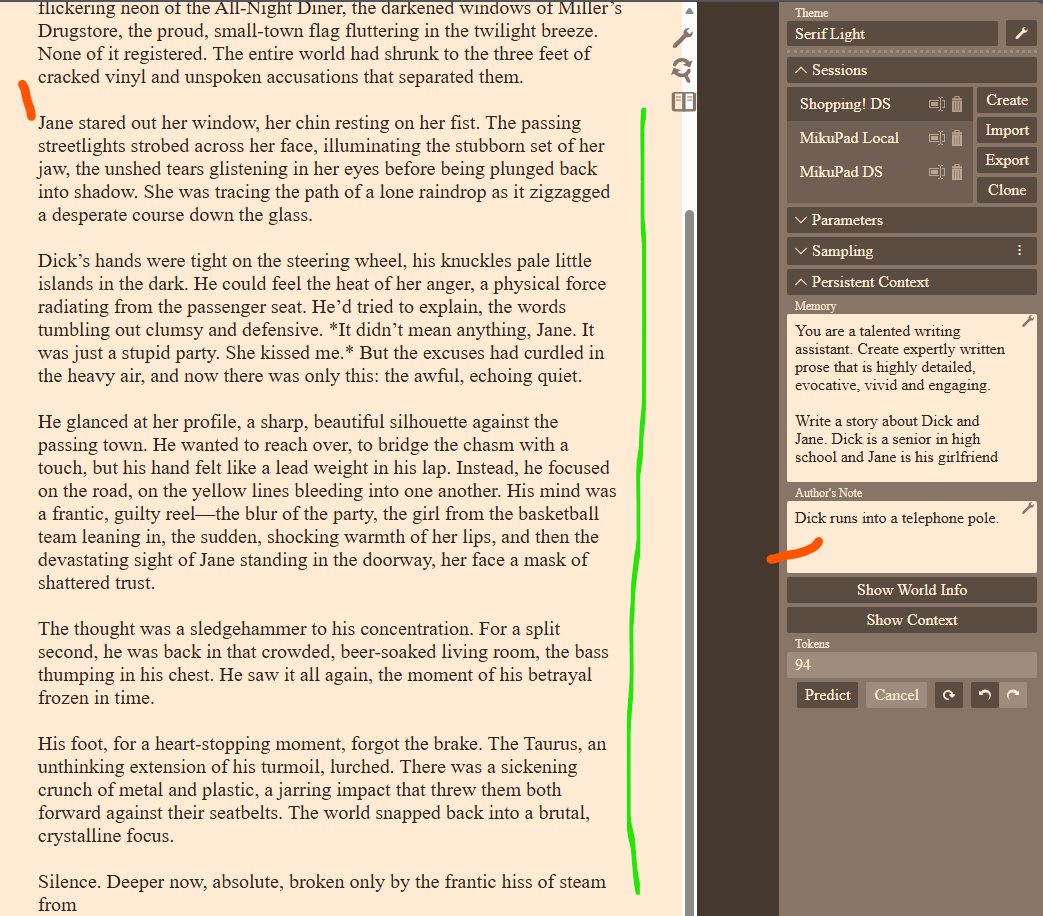

Author's Note

Author's Note is used differently from ST... It's for directing the immediate story, rather than a memory device. In the above example, I'm noting that the two protags are fighting. I could change this, before the next round of text generation, to have Dick and Jane make up, break up, get into a car wreck... the LLM will take that direction, and move the story there, directing the writing to what's described. It note should be erased once you move past that part of the story.

For ST, Author's Note gets used more like a mid-term memory for a certain chat, which gets erased once the chat's reset, and will usually stay there for quite awhile, persisting over several rounds of roleplay.

LogProbs (Streaming Only)

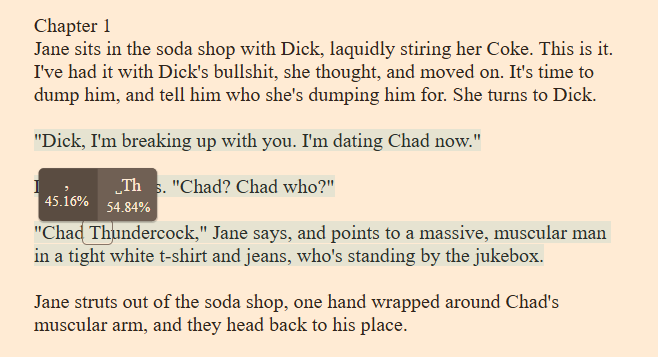

In the above, the Green section is what I wrote. The blue is the LLM output. Floating the cursor, you can see that paragraph 2 had an 87.92% chance of starting with Dick. If you click on Jane, Mikupad will replace Dick with Jane and you can start the generation again from there. These "token probabilities" are called LogProbs and are pulled in when you activate Streaming on most API. Ymmv. I note OpenRouter is missing this functionality in all my testing.

In the below, I took the DIck and Jane story from above, changed start of paragraph 2 to Jane, added a new Author's Note, then restarted it. You can see the story head off in a slightly different direction.

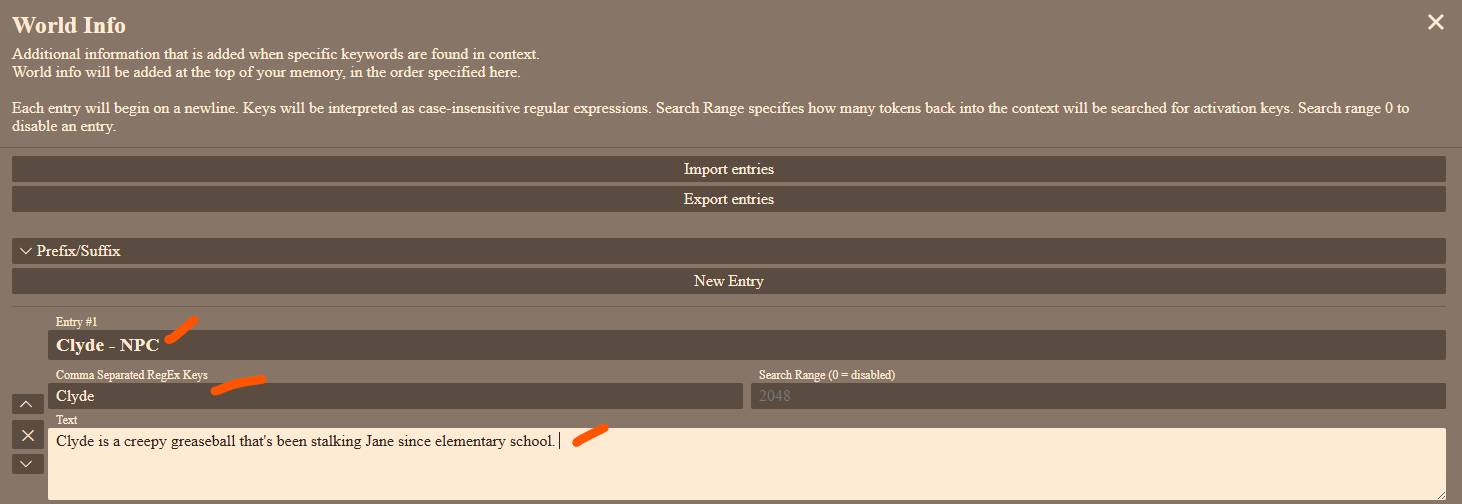

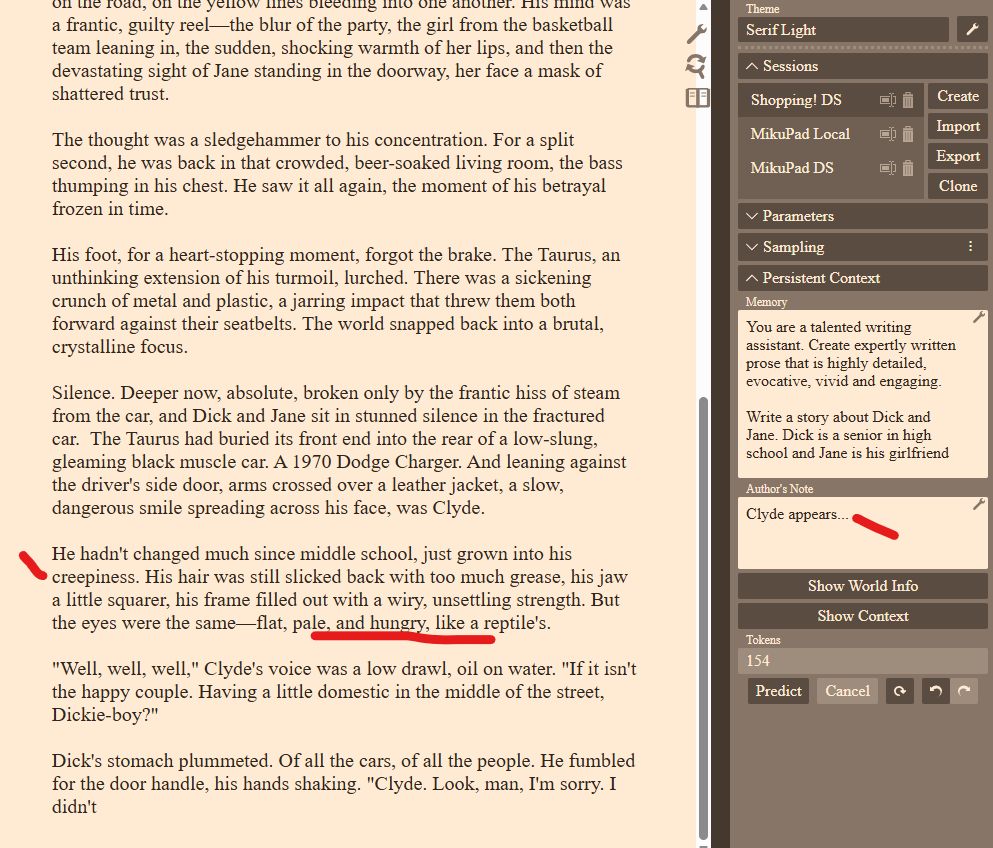

Worldbook

The Worldbook works in exactly the same way as ST, but has more limited functionality. As in ST, Mikupad looks for the trigger word (RegEx Keys), and inserts it into the context. Here's an entry for Clyde, and him being inserted into the story.

Fill-In-Middle

Fill-In-(the)-Middle allows the user to have Mikupad write the middle of the story, given a beginning and end. There's no real function like this in ST, it's a unique concept to story writers. Example below. By adding {fill} tag into that spot, the LLM fills in the center to match the endpoint.

| How it started | How it's going |

|---|---|

|

|

Getting FITM set up and working is tricky... the appendix shows a working setup for DeepSeek. Once I've worked out a better way, or more examples, I'll add them to this guide. In the meantime, the best source of information is to ask on the Mikupad github page.

Prompt Formatting and Show Context feature

Token Streaming and Chat Completion API have different formatting submitted to the API. Fortunately, you can view that as it's sent in; the option to see the active context is Persistent Context>Show Context. Looking back at the Clyde example above, here's Streaming and Chat Completion formatting.

Streaming Context Example

Chat Completion Context Example

A few notes on these formats

- Streaming here is mostly absent any instruct blocks because I haven't used them, but the formatting for Chat Completion is obvious, as Mikupad has blocked together the text to send over with

role: userinformation and the context put into acontentblock. - Looking at the Streaming example; note that you can change the order that Mikupad puts information for both Streaming and Chat Completion options. Here, the order is World Info, Memory, and last, Prompt. By changing the Advanced Context Ordering you can swap these blocks of information around. (Note that Author's Note is sort of jammed into the bottom... not sure what's going on here.)

Other Mikupad Features

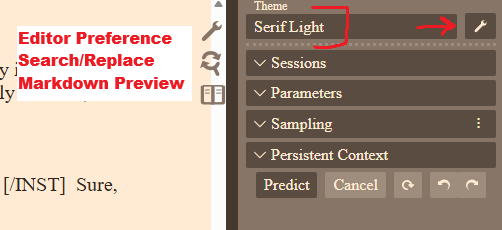

Editor Preferences, Search & Replace, Markdown Preview, Theme

- Editor Preference

- Sets text size, spell check, attach sidebar, preserve cursor position, token highlight behavior and color

- Export of prompt (entire Context) to plain text file, and export/import of entire database (all sessions)

API Key Exposure

Export Full DB function outputs your API key! Don't share the file with anyone you don't want to be able to access your API funds!

- Search/Replace with Plaintext and RegEx modes

- Markdown Preview shows the entire prompt with markdown formats rendered

- Theme allows you to change the theme to a dark theme, or make your own if you know CSS formats. You can also import/export new ones from here.

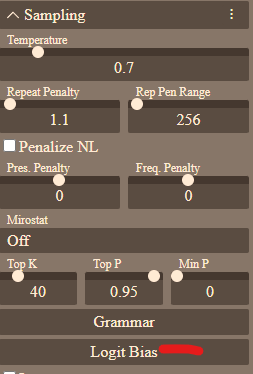

Sampling and Logit Bias

|

Setting for Temperature, etc. are located here If you're using Streaming (text completion) you can eliminate words from the LLM vocabulary using Logit Bias. I'll leave the Grammar function as an exercise for the reader... |

|---|---|

Story Writing Techniques with Mikupad

Writing stories with Mikupad differs quite a bit from role play frontends. The rest of this section is a light overview of some techniques to consider, most (all?) of which will also apply to other story writing platforms.

At the core: Roleplay is Reactive. Things happen, and {{user}} responds. The LLM is partner as {{char}} and other NPC.

Story writing is Generative. The writer sets a direction, and the LLM creates the words based on that direction. The LLM is a junior co-author.

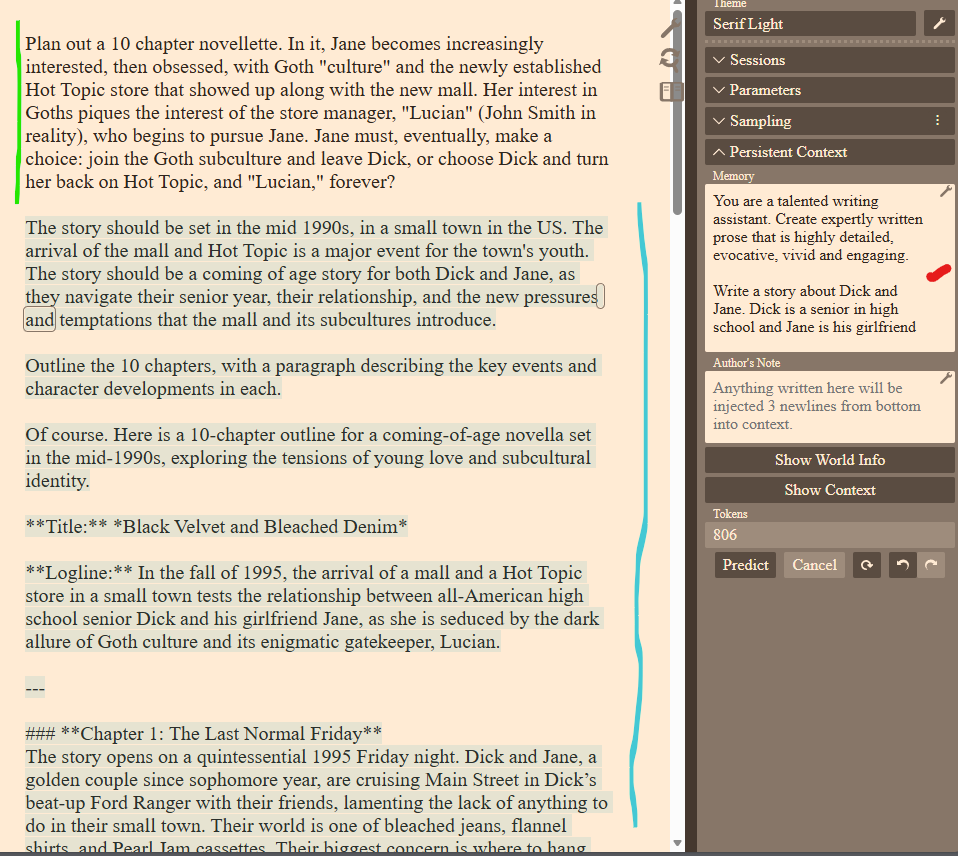

- Planning: Mikupad can be used for more than doing short stories, especially given long context for current LLMs. If you plan to write something complex, you can use Mikupad to preplan the novel structure and major events before starting. Below shows an example of this sort of exercise. The LLM created a ~2000 token, 10 chapter novellette plan with textbook narrative arch (exposition, incident, rising action, climax, resolution). You would then make edits to that, then tell the LLM to write Chapter 1, then Chapter 2, etc. My writing in Green, LLM in Blue. Notably, the LLM didn't feel I gave enough direction and added some of its own... a 1990s Young Adult novel was exactly what I had in mind. If it wasn't, I could correct it here and have it restart.

- Guidance: As stated earlier, elements like Author's Note serve a different function for story writers than a role play engine. So does Memory, vs. it's role play equivalent of Character Card. They exist to define how the story is written.

- Memory = the always-present story premise

- Author’s Note = controlling tone/style/themes on the fly

- World Info = injecting relevant lore when triggered by keywords, as such this function is much simpler for story writers vs. role play

- Voice: Story writing is a conceptual shift from performance to composition. For this reason, prompts like "Write the novel in the style of Edgar Allen Poe" make sense in a way that it doesn't for role play.

- Role play frontends focus on persona simulation: Consistent first-person character speech, Emotional reactions, Round-based action

- Story writer frontend focuses on: Third-person narration, Descriptive prose, Pacing, structure, emotional beats, Scene framing instead of dialogue trading

Appendix

Other Links

SillyTavern Writing Guides

Lorebook Starting Guide: https://rentry.org/SillyT_Lorebook

Character cards writing guide: https://rentry.org/NG_CharCard

Where to put information in Silly Tavern: https://rentry.org/NG_Context2RAGs

Excellent general guide on setting up SillyTavern for Roleplay: https://rentry.org/sukino-findings

Setting up SillyTavern (and Mikupad) Server

https://rentry.org/SillyTavernOnSBC

Access your models, cards, and sessions from any device with web browser anywhere in the world, from a low power, low profile SBC server

DeepSeek Instruct Template

This template will enable FiM (Fill In Middle) support for DS. Setup for other models should be similar.

Title Image Source

Note to self: top image produced with lora using base model noobvpred from ~60 images from source: https://www.wadachizu.com/painting/