Here is my experience following https://rentry.org/Mikubox-Triple-P40:

I spent $1150 (plus $85 for a new SSD). $500 of this was the GPUs.

- 9.2 t/s on Llama3 70B q5k_m,

-c 4096 -ngl 99 --split-mode row --flash-attn -t 4 - 8 t/s on Llama3 70B q6k, same settings

- 7.9 t/s on CommandR+ q4k_m, same settings

- 42 t/s on Llama3 8B q8,

-c 8192 - 6.4 t/s WizardLM2 8x22B q4k_s with

numactl --interleave=0-1 ./bin/server -m wizLM2_Q4_K_S.gguf -c 4096 --split-mode layer --tensor-split 16,17,17 --main-gpu 0 --flash-attn -t 8 -ngl 50 --numa numactl --no-mmap - 2.5 t/s WizardLM2 8x22B q6k with

numactl --interleave=0-1 ~/llama.cpp/bin/server -m ~/llms/wiz2-8x22B_q6k.gguf -c 2048 --split-mode layer --tensor-split 11,12,12 --main-gpu 0 --flash-attn -t 8 -ngl 35 --numa numactl --no-mmap

(These numbers are with llama.cpp server commit 2b737caae100cf0ac963206984332e422058f2b9 (May 28, 2024), GPUs powerlimited to 140W)

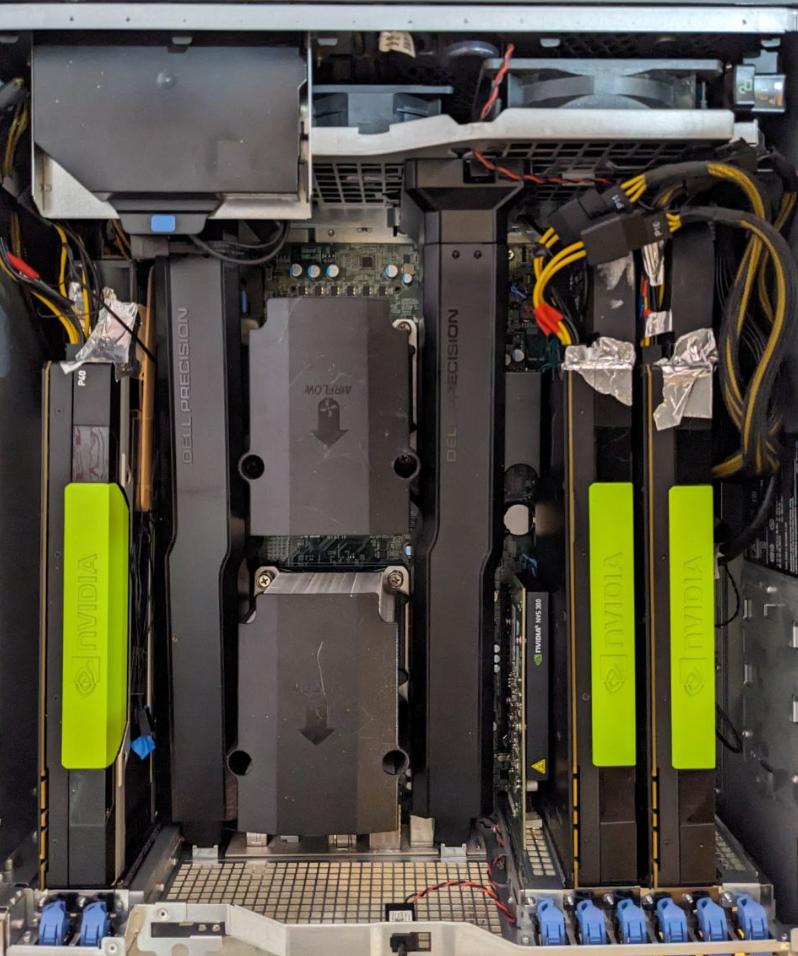

hardware build details

The T7910 has two 6-pin PCIe and one 8-pin PCIe power cables at the bottom of the case, and one 8-pin at the top. I initially didn't find the top 8-pin plug and thought it might be missing, but it was kind of just tucked away. You will buy three converters that take two female 8-pin PCIe and combine them to one male 8-pin CPU (what the P40 takes). The 8-pins by themselves fill two of these converters (with the other PCIe head empty), and the 6-pins together fill the third (they plug right into 6 of the 8-pin slots). Each 6-pin delivers 75W, each 8-pin delivers 150W, the PCIe slot itself delivers 75W.

It's a little noisy. Even with just 2 BFB0812HDs running (no third card/fan) I found the rushing air obnoxious to sit next to - but maybe with headphones it would be ok. It's in my basement, and I can hear it from a few feet outside the top of the stairwell. If you could control the fans to run at <100% it would probably be fine (and they definitely have cooling overkill to spare).

Speaking of control: if you get the BFB0812HD, you also have to signal the "PM" control wire to the power connector's 12V, or it won't spin at all. I just spliced it to the power connector's 12V, just like the fan's own 12V, but maybe you could get some fancy control module or something. Look under the sticker in the center of the fan to find the labelling of the 4 wires.

1 of my 3 GPU fans is ARCTIC S4028-6K, spliced in similarly to the BFB0812HD (but control wire untouched). I made a duct to the card out of cardboard and foil tape, which was finnicky and annoying: stick to the BFB0812HD as long as they're not like $50. The S4028 also has a 15k RPM variant - get that one if you want to be sure of the cooling. The P40 with this 6K one runs like 10C hotter than the BFB0812HDs. I haven't seen it go above 60C, probably fine. The S4028-6K is overall quieter than BFB0812HD but more of a "loud little fan" than "rushing air" sound, so maybe more annoying.

I couldn't get the "SAS"-fed drive sled SATA connections working. Finally just hooked up to the normal SATA, which is buried behind the other, "you're not supposed to remove this one" side panel.

There were a bunch of things I tweaked in BIOS. The infamous "above 4G decoding" was one, there were others that might have helped but that was probably the only mandatory one. I didn't take notes, sorry.

software config details

In root crontab, add:

@reboot nvidia-smi -pm ENABLED && nvidia-smi -pl 140

You don't benefit from more than 140W. This can go down to 125W if you have layers on CPU.

If you leave llama.cpp running but idle, your cards will stay in pstate 0, meaning 50W each despite being idle. So, pip3 install nvidia_pstate and then add this patch to your llama.cpp server. This gets you down to 10W each while idle.

Build the server with cmake . -DLLAMA_CUDA=ON && make -j 28 server

If you're doing any CPU offloading, run with NUMA like in my WizardLM2 example above. This is beneficial because of the 2 CPUs, each having their own quad memory channels, so you want to fill them evenly with weights. You can read https://github.com/ggerganov/llama.cpp/issues/1437 for way more details.

opinion zone

If you are willing to spend on a dedicated local inference machine, but don't want to go crazy, this is the way to go. The speed on large quants of 70B is quite fast, you have access to the current most powerful local model (Wiz2 8x22B?) at reasonable speeds, and running significantly larger future models (L3 400B) is out of reach anyways without spending $4000+.

The one exception is if you already own a 3090/4090 with slots for more. $1600 for same VRAM at 3x the VRAM bandwidth of this $1150 build, or $800 for 48GB, can be reasonably argued to be on the same Pareto front. But if you're starting from scratch, no way.

You could also go 3xP100 for an additional ~$150, and get blazing fast speeds on 4bit 70B quants with exllama. But then things like 8x22B or CR+ are totally beyond your reach at any dignified quant. I heard someone also did 2080ti 22GB hack + 2xP100.