The joy of creation

Generative AI are getting more powerful and robust every day, with dozens upon dozens of highly specialized ones, and hundreds of incredibly powerful well-rounded ones.

This tutorial will focus on getting you start everything you need to use Stable diffusion on your computer, and a good knowledge on how everything work.

Let's start!

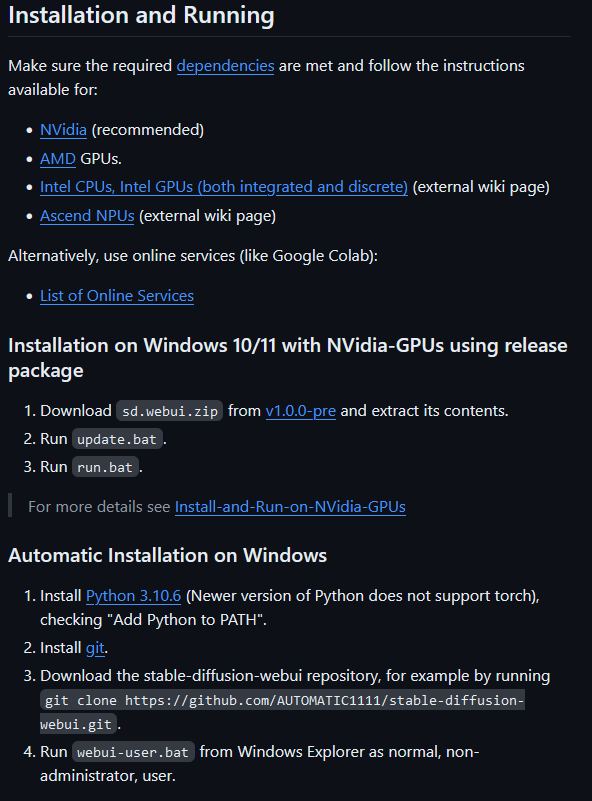

First, we need the download Stable-Diffusion

As everything's explained on the page, I'll not get into too much details for this step, follow the steps describe in the 'Installation and Running' part, I recommend to use the Automatic Installation on Windows (the one I used myself.)

Install it somewhere nice, and remember where you but that folder! We're going to make some back and forth on it later!

the installation folder of Stable Diffusion (where the webui-user.bat is ) will be referred as 'stable-diffusion-webui' in this tutorial.

Great, you now have Stable diffusion running locally on your PC ! :D

(Despite it being launch on your web browser, the application run without internet.)

But, that's not all we need!

For this tutorial, we'll see what Checkpoints, VAE and embeddings are.

The most important elements of our render pipeline is the Checkpoint (that I will also refer as 'Models' often in this tutorial).

What are Checkpoints ?

Basically, that's the heart of your generation, creating your request based on the images it was trained with. Two models can have a GIGANTIC difference of result, despite having the exact same prompts and settings.

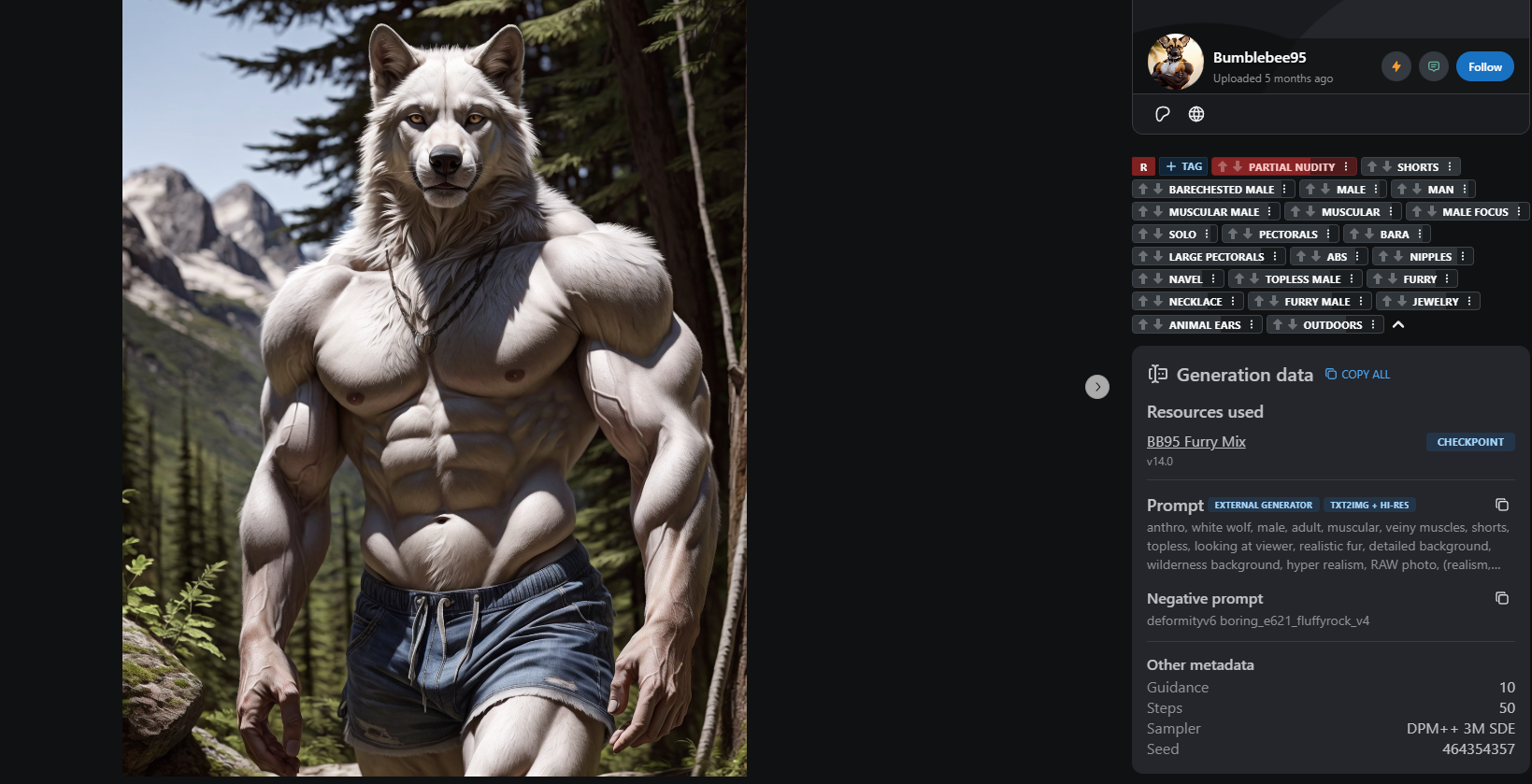

The exact same prompts and settings (and seed), but using :

Yiffymix (left) and BB95 (right)

For this tutorial, ALL the images will use the same settings and seed, will see them as we go, don't worry. Since I'm using the same Seed, all the images are going to look extremely similar, that's on purpose, and of course don't reflect the full extend of the models and Stable diffusion possibilities. If you use random seeds, you WILL get an infinite amount of results with your prompts.

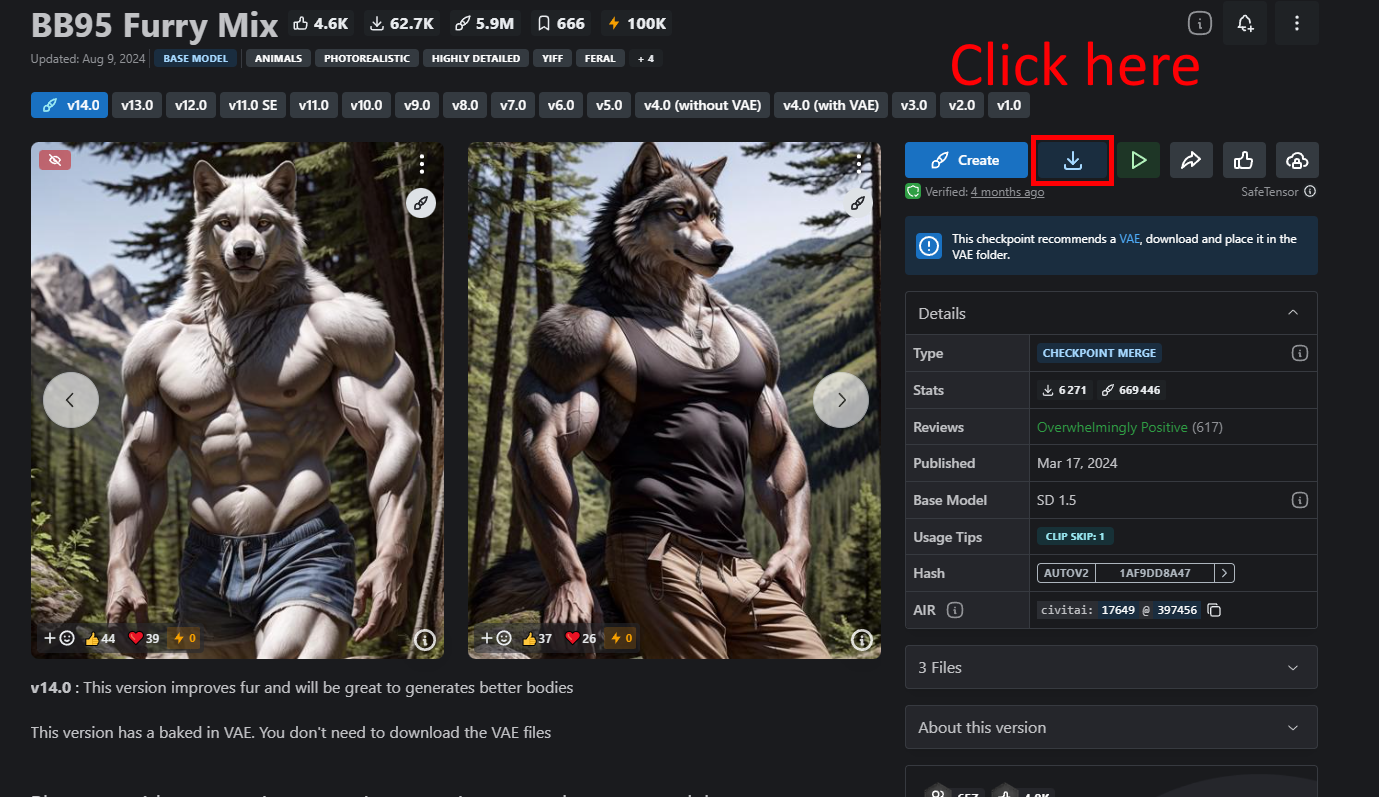

For this tutorial, we'll use the BB95 Furry mix model available here :

I highly suggest to create an account on this website and look around, it contain MANY amazing models of various category, and tons of beautiful renders.

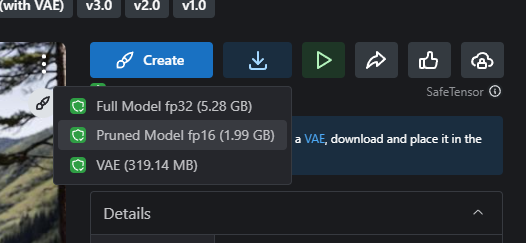

Where's also gonna need to select a version of the model, that's not always required. Here, click on the Pruned Model version.

You must place your checkpoints/models here :

stable-diffusion-webui\models\Stable-diffusion

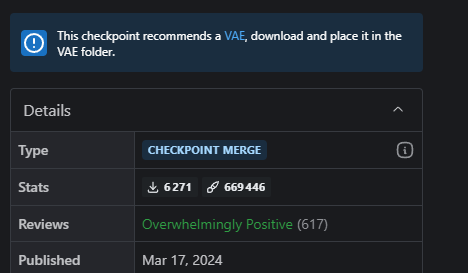

Nice! However, you may have noticed this :

This model creators recommend a VAE, so let's download it !

To do so, simply click on the blue 'VAE' on the info bubble.

VAE goes to the folder :

stable-diffusion-webui\models\VAE

While it's downloading...

WHAT is a VAE?

A Variational Autoencoder, or simply VAE, help the models to make better images, it's a sort of refined guideline that will help the model on specific elements, like eyes, faces, feet, hands, or more general things.

You can use VAEs with any model you want, even if it's not recommended by the model creators.

While everything is still downloading, let's check on the BB95 creations on the page. Click on one of them to display more info.

Checking the prompts and settings used to achieve nice creations with models is always useful for future use. Here we can see the style used, the samplers and the steps (don't worry, will see those in details soon!), but most importantly for now, we can also see that the negative prompts is pretty empty... It's because the creator use what we call 'Embedding'!

What is Embedding

Embedding is a bit of a advanced concept to understand, but it basically guide the model to make it understand the concept and words it's reading, they exist for both good and bad prompts, here we are going to use :

Boring_e621 that is a negative prompts helper.

Embeddings go on the folder :

stable-diffusion-webui\embeddings

With those 3 elements downloaded and in there proper folders :

Models :

\stable-diffusion-webui\models\Stable-diffusion

VAE:

\stable-diffusion-webui\models\VAE

Embeddings :

\stable-diffusion-webui\embeddings

We can finally launch Stable diffusion !

To do so, double click on the 'webui-user.bat' in your stable-diffusion-webui folder !

And VOILA! The SD is ready to use to generate your first render.

But before that, we need to see some crucial elements :

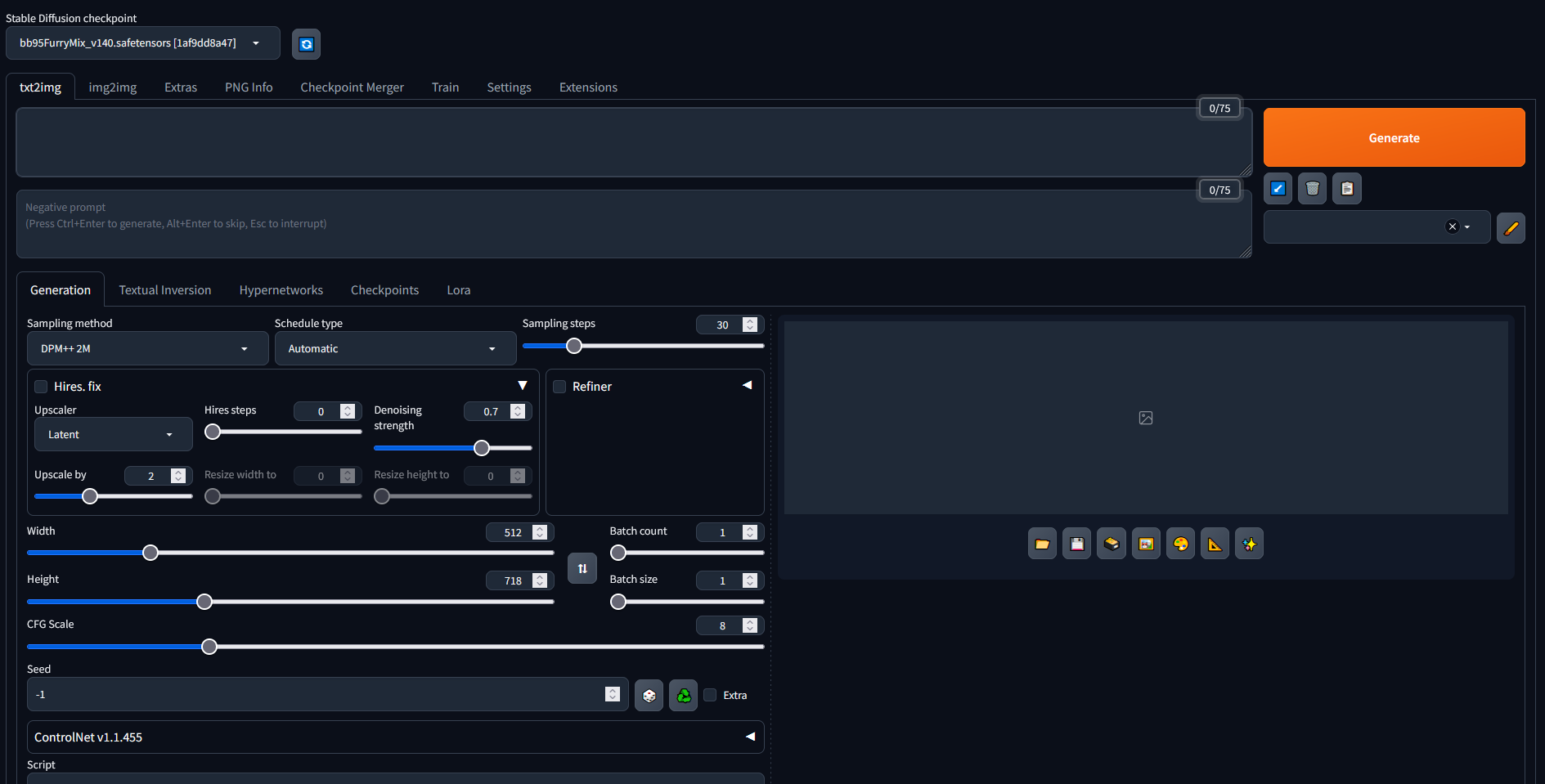

The Stable Diffusion checkpoint :

Simple what model you want to use, for this tuto, click on it and select 'bb95furryMix'

Then we have the main tab, right now Txt2img is select, that exactly what we want for now.

Prompts is what you want to see, for this tutorial we'll use this prompt:

artwork (Highest Quality, 4k, masterpiece, Amazing Details:1.1) adult, wolf, bare torso, muscular, black fur, grey muzzle.

You can see () inside, it specify want MUST be take in account by the AI during creation, don't overuse it though, it's an useful help when you can really get the render you want, but using it too much just counter it's effect.

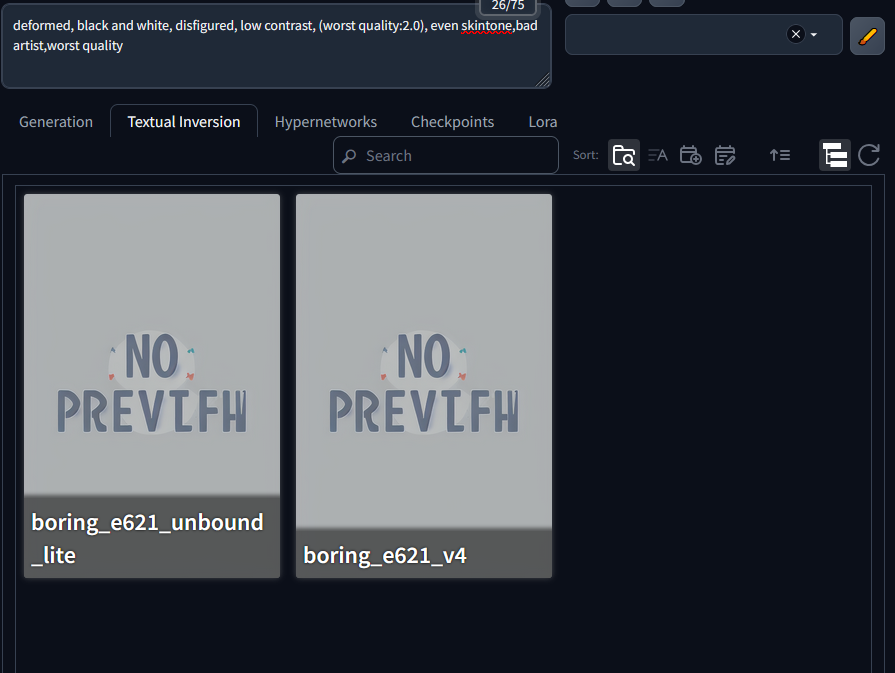

Negative prompts is a list of words and embeddings to exclude from your render, here we'll use :

deformed, black and white, disfigured, low contrast, (worst quality:2.0), even skintone,bad artist,worst quality,

By definition the negative prompts is more generic and less directive than the Prompt, it's more of a list of concept.

In my experience, it's not recommended to put too much element on it, as it will end up messing the AI and be counterproductive.

The generation tab is the most important and contain many settings to make your creations even more specific :

Sampling Methods :

To generate an image, Stable Diffusion don't 'draw' per say, but start with a completely random image made of noise, to denoise the image and ending up creating a proper render, SD use a sampler, or sampling method as it processed through the work. there's a LOT of methods available on Stable diffusion, and I suggest you to try them out to see which one work the best for you.

Schedule Type :

Another part of the denoising process, It would be a lot of work to explain exactly the difference between all of them, and honestly pretty much a useless amount of information to drop right here.

I recommend to let it automatic for now, as it does the charm perfectly, but of course, you are highly encouraged to mess around and test stuff out !

Sampling Steps :

So, that's WAY MORE interesting and important.

Basically : The higher the number, the best your image will end up, BUT the longer it will take.

(5 steps)

(80 steps)

But sometime, higher doesn't mean better !

But sometime, higher doesn't mean better !

(30 steps, basically the SAME)

So, again, it's more of a trial and error process... Some models will take HOURS to do an image at 60 steps, some will take seconds, some will be ideal at 45 steps, where others do perfectly fine jobs at 20... try out, have fun, and don't be afraid to create messed up renders !

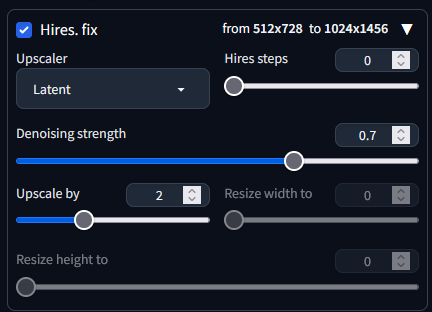

Hires.fix

Another key element to create a nice render is the dimension of this one... Obviously a 1024 * 1436 image will have way more details then a 64 * 128 one !

(the eldritch horror generated by a 64 * 128 resolution)

(the eldritch horror generated by a 64 * 128 resolution)

However, simply inputting a large resolution on our settings doesn't always mean a good result, and can cost a lot of time.

So, to have a nice image AND a great resolution, we use the Hires.fix option, that create the image in a small resolution, then upscale it to create more details and enhance it.

This allow us upscale our image using different methods (again, there's NO point explaining all of them right here) and different other elements.

To make this tutorial less than 1 000 000 words and let you make a render before next year, let's focus on the 'upscale by', that is basically how much you want to multiply the size of your image.

2 mean double it, obviously, and as you can guess, the bigger the upscale, the longer it will take.

Our boys got even more details now, look at those eyes~ <3 (30 steps, resolution : 512 * 728, upscale * 2 )

Our boys got even more details now, look at those eyes~ <3 (30 steps, resolution : 512 * 728, upscale * 2 )

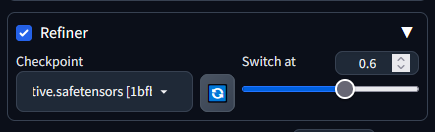

Refiner :

That's kind of a tricky one, that I don't use often. It basically allow you to switch to another model at some point in the rendering... MAY be cool, often weird in my experience !

The only options here are :

The next checkpoint (model) you want, and WHEN do you want to switch.

so, if I wanted to switch to MidgardPony at 60%, I would do this :

And it will give me this result (...):

(30 steps, resolution : 512 * 728, upscale * 2 )

(30 steps, resolution : 512 * 728, upscale * 2 )

However, as it switch the model during the creation, it add time to render, keep that in mind as some checkpoints can take a while to load!

For this image, it took A LOT longer for the Midgard part then the BB95 one.

Width & Height

We're getting back to simpler and clearer things !

As you can imagine, those are the dimensions of your render, it's important to set it in a way that's coherent with what you want

a portrait scale (518 * 728 with upscaling) :

a landscape scale ( 728 * 518 with upscaling) :

also note of radically the image change, despite being exactly the same seed and settings, except the resolution.

also note of radically the image change, despite being exactly the same seed and settings, except the resolution.

Batch count :

The number of render made every time you'll hit generation.

Don't cost more resources, even if you set it to 150... BUT, of course, it would take, in this case, 150 time longer to complete the full process.

It's useful with fast models to quickly try out ideas and see nice results.

DON'T use a seed with it though, as it will simply make the same image over and over again.

Batch size

The number of render per batch, meaning that :

Batch count : 1

Batch size : 1

will give you ONE image.

Batch count : 5

Batch size : 10

Would make 5 batch on 10 image, so 50 images.

NOTE : the batch SIZE actually use more resources to be made !

CFG Scale :

the 'rigidity' of your prompt over the ai ''artistic'' liberty. The stronger the setting, the most guided the AI will be, will a low amount will authorize more randomness in the result :

(All of the previous images were made with a CFG of 8)

CFG of 2:

Lower doesn't necessarily mean bad!

Lower doesn't necessarily mean bad!

CFG of 20:

Like a too strict scale's doesn't mean perfect result.

Like a too strict scale's doesn't mean perfect result.

That part depend a LOT on your prompts formulation, obviously.

FINALLY, the last elements we need to see here is the Seed:

Simply put, every seed is a different image, by default it's set to -1 meaning random.

for this tutorial, the seed is 2087480585, meaning the same image will be made over and over again, but you may have notice some variations depending of our settings changes.

Using a seed it very nice to improve a great image, of test out different settings like Sampling methods, Schedule types or even Checkpoints!

Alright ! It's DONE right? We've seen everything and now this tutorial can end, right ... RIGHT?!

*NO, it is NOT...

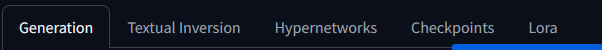

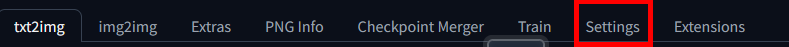

Okay, so we've seen the FIRST tab, but you may have noticed the others :

For your mental sanity (and mine), we're only going to check one of them that is directly linked to what we've downloaded earlier:

For your mental sanity (and mine), we're only going to check one of them that is directly linked to what we've downloaded earlier:

the Textual inversion

Textual inversions are were your embedding are displayed, to use on in your prompts, go to the prompts you went to add it in, go on the tab, and simply click on the embedding desired!

example:

If I want to use 'boring_e621_V4' on the negative prompts, I just need to click on the negative prompts panel, and then go on Textual Inversion and click on the desired embedding !

Result with the new embedding :

The embedding gave us more defined eyes and a generally better looking lighting and body shape, while we're still using a very basic prompt, and minimal negative prompt.

The embedding gave us more defined eyes and a generally better looking lighting and body shape, while we're still using a very basic prompt, and minimal negative prompt.

And finally :

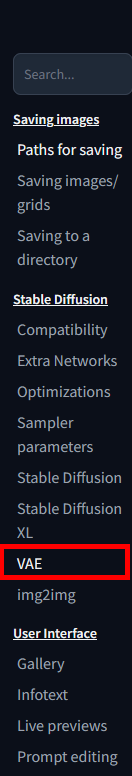

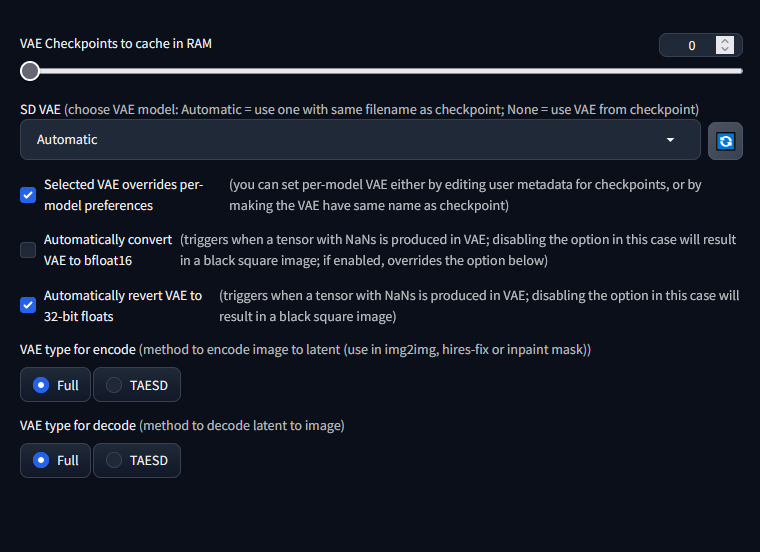

The VAE!

To use it, it's a bit trickier.

You need to go on settings:

Then, go on VAE:

And finally, set your VAE (furception_vae_1-0), or let 'automatic' if you want !

Don't forget to apply the settings !

Alright ! Finally, and for real this time: it's time to make our first render !

First, let's erase the seed for it (either by clicking on the dice, or inputting -1 on the label), and check on our prompts:

Starting with want we want, we have :

artwork (Highest Quality, 4k, masterpiece, Amazing Details:1.1) adult, wolf, bare torso, muscular, black fur, grey muzzle.

It's alright, but not extremely complete. Where's the character, what is he doing, what are the color of his eyes, his expression, his muscular forms, his muzzle length, does he have a specific hair cut, what about the mood of the render, the angle of the camera, the effects and lightings?

There's an infinity of possible questions to answer in your prompt, but let's stay a bit classic where with a sfw warrior in a deep forest:

artwork (Highest Quality, 4k, masterpiece, Amazing Details:1.1) adult, wolf, bare torso, muscular, black fur, grey muzzle, red loincloth, long braided hair, dynamic pose, fluffy fur, serious look, green iris eyes, dynamic pose, great anatomy

dusty road in a deep and eery forest.

And here's the result :

Now, let's try to simply change this : Sampling : DPM++3M SDE, Schedule : Automatic, Steps : 50, CFG Scale: 8

Let's see the result :

Nice~

and since we don't use a seed anymore, we can do as much render as we want wit this prompts !

But what about a more roguish lion man from outer-space ?

artwork (Highest Quality, 4k, masterpiece, Amazing Details:1.1) SCifi setting, lion, adult, photo realism, soldier, scifi armor, space, intense battle in background,hyper realism, RAW photo, (realism, photorealistic:1.3), detailed, hi res.

And so on and on! Now it's your turn to make cool stuff! ;)

I hope this tutorial and introduction to the world of AI generated content helped you, there's a lot of subtilties and details left out to keep this as digest as possible, but I think this is a good start, and now you can make your own renders and look around for more detailed and specific tutorials if you want!

Good luck, and have fun!~

Thank you for reading this, if you seen any mistakes or encountering problem, please reach me at : amby@tutamail.com ! <3